Spatial data in R: Operations on continuous fields

SCII205: Introductory Geospatial Analysis / Practical No. 4

Alexandru T. Codilean

Last modified: 2025-06-14

Introduction

This practical is divided into two distinct parts. In the first section, the focus is on spatial interpolation, learning how to convert a discrete POINT geometry sf object into a continuous terra SpatRaster. This transformation is essential for analysing spatial patterns and gradients across a landscape, and the section offers a detailed, step-by-step guide to understanding and applying interpolation techniques to generate continuous surfaces from point data. In the second section, the emphasis shifts to analysing continuous fields through the use of an existing digital elevation model (DEM). This part of the practical demonstrates a range of geospatial operations, including convolution, viewshed analysis, and terrain analysis. The techniques covered here are crucial for applications such as hydrological modelling and landform analysis.

R packages

The practical will utilise R functions from packages explored in previous sessions:

The sf package: provides a standardised framework for handling spatial vector data in R based on the simple features standard (OGC). It enables users to easily work with points, lines, and polygons by integrating robust geospatial libraries such as GDAL, GEOS, and PROJ.

The terra package: is designed for efficient spatial data analysis, with a particular emphasis on raster data processing and vector data support. As a modern successor to the raster package, it utilises improved performance through C++ implementations to manage large datasets and offers a suite of functions for data import, manipulation, and analysis.

The tmap package: provides a flexible and powerful system for creating thematic maps in R, drawing on a layered grammar of graphics approach similar to that of ggplot2. It supports both static and interactive map visualizations, making it easy to tailor maps to various presentation and analysis needs.

The tidyverse: is a collection of R packages designed for data science, providing a cohesive set of tools for data manipulation, visualization, and analysis. It includes core packages such as ggplot2 for visualization, dplyr for data manipulation, tidyr for data tidying, and readr for data import, all following a consistent and intuitive syntax.

The practical also introduces these new packages:

The gstat package: provides a comprehensive toolkit for geostatistical modelling, prediction, and simulation. It offers robust functions for variogram estimation, multiple kriging techniques (including ordinary, universal, and indicator kriging), and conditional simulation, making it a cornerstone for spatial data analysis in R.

The whitebox package: is an R frontend for WhiteboxTools, an advanced geospatial data analysis platform developed at the University of Guelph. It provides an interface for performing a wide range of GIS, remote sensing, hydrological, terrain, and LiDAR data processing operations directly from R.

The plotly package: is an open-source library that enables the creation of interactive, web-based data visualizations directly from R by leveraging the capabilities of the

plotly.jsJavaScript library. It seamlessly integrates with other R packages like ggplot2, allowing users to convert static plots into dynamic, interactive charts with features such as zooming, hovering, and clickable elements.

Resources

- The Geocomputation with R book.

- The tidyverse library of packages documentation.

- The sf package documentation.

- The terra package documentation.

- The tmap package documentation.

- The gstat package documentation.

- The WhiteboxTools library documentation.

- The plotly package documentation.

The gstat package

The gstat R package is a comprehensive tool for spatial and geostatistical analysis. It includes functions for variogram estimation, various kriging methods (such as ordinary, universal, indicator, and co-kriging), and both conditional and unconditional stochastic simulation to generate multiple realisations of spatial fields. Designed for multivariable geostatistics, gstat is ideal for modeling spatial phenomena and analyzing relationships among different spatially distributed variables.

Key characteristics include:

Versatile modeling: Offers tools for variogram estimation, multiple kriging methods, and spatial simulation, making it suitable for diverse spatial prediction tasks.

Multivariable capabilities: Supports the joint analysis of multiple spatially correlated variables, essential for complex environmental and geochemical studies.

Integration with the R spatial ecosystem: Works seamlessly with packages like sf and terra, facilitating data handling, visualization, and advanced spatial analysis.

User-friendly interface: Features clear syntax for model specification, cross-validation, and diagnostics, accommodating both beginners and experts.

Robust documentation and community support: Extensive resources, tutorials, and a supportive community ensure effective use in both academic research and practical applications.

The whitebox package

The whitebox package in R serves as an interface to the open‐source WhiteboxTools library, which offers high-performance geospatial analysis capabilities. Here are its key characteristics and advantages:

High-performance processing: WhiteboxTools is developed in Rust, offering fast and efficient execution of computationally intensive geospatial operations. The whitebox package lets users leverage this performance directly from R.

Comprehensive geospatial functionality: It provides access to a broad array of tools for terrain analysis, hydrological modeling, remote sensing, and image processing. This versatility makes it suitable for diverse spatial analyses and environmental modeling tasks.

Seamless integration with R workflows: the package allows you to execute WhiteboxTools commands within R, enabling smooth integration with other R spatial packages for data manipulation, visualization, and further analysis.

User-friendly command interface: By wrapping the command-line operations of WhiteboxTools, the whitebox package provides a straightforward and accessible interface, which is beneficial for users who prefer R’s scripting environment over direct command-line interaction.

Open-source and actively developed: Both WhiteboxTools and its R interface are open-source, fostering community contributions, continuous improvements, and transparency in geospatial research.

Install and load packages

Packages such as sf, terra, tmap, and tidyverse should already be installed. Should this not be the case, execute the following chunk of code:

# Main spatial packages

install.packages("sf")

install.packages("terra")

# tmap dev version and leaflet for dynamic maps

install.packages("tmap",

repos = c("https://r-tmap.r-universe.dev", "https://cloud.r-project.org"))

install.packages("leaflet")

# tidyverse for additional data wrangling

install.packages("tidyverse")Next install gstat, plotly:

Load packages:

library(sf)

library(terra)

library(tmap)

library(leaflet)

library(tidyverse)

library(gstat)

library(plotly)

# Set tmap to static maps

tmap_mode("plot")The following code block installs both the whitebox package and the WhiteboxTools library. Since this is a two-step process, it is executed separately from the installation of the other packages:

# Install the CRAN version of whitebox

install.packages("whitebox")

# For the development version run this:

# if (!require("remotes")) install.packages('remotes')

# remotes::install_github("opengeos/whiteboxR", build = FALSE)

# Install the WhiteboxTools library

whitebox::install_whitebox()Load and initialise the whitebox package:

Load and prepare data

This practical is divided into two parts. The first section focuses on spatial interpolation and explains how to convert a POINT geometry sf object into a continuous terra SpatRaster. The second section uses an existing digital elevation model (DEM) to demonstrate various geospatial operations on continuous fields. Each section employs a different dataset, as detailed below.

Dataset for interpolation

The dataset for the first part of this practical comprises the following layers:

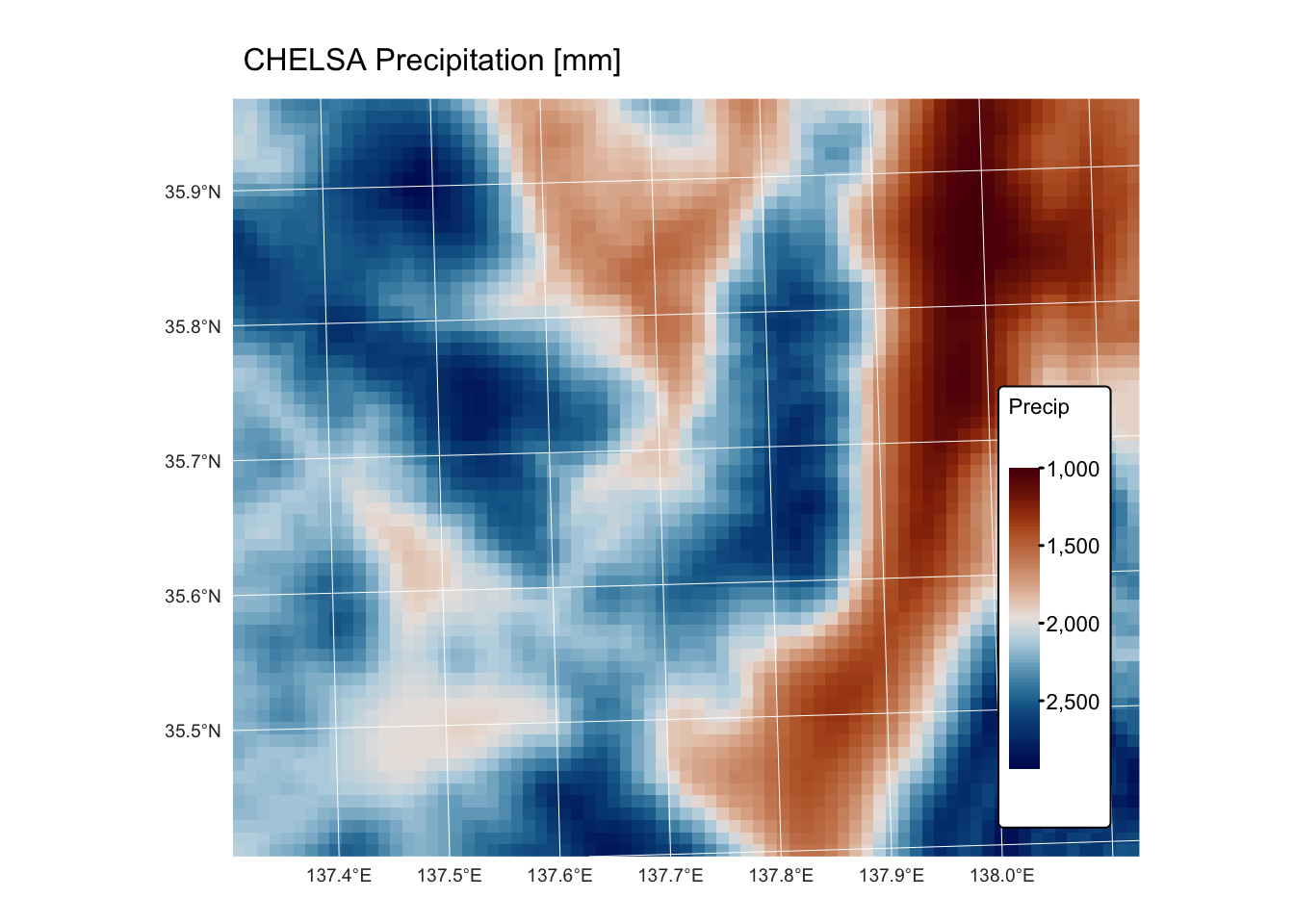

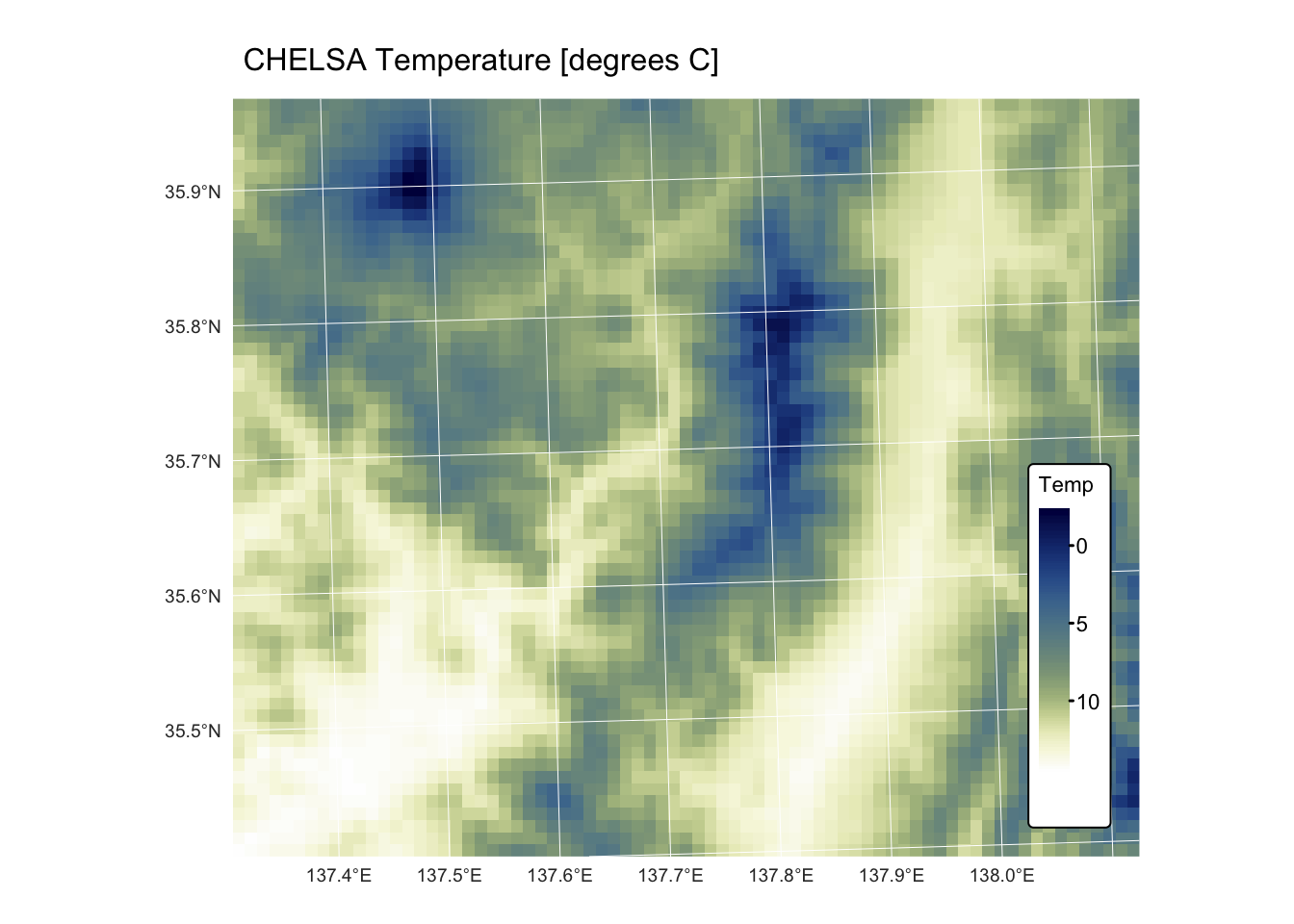

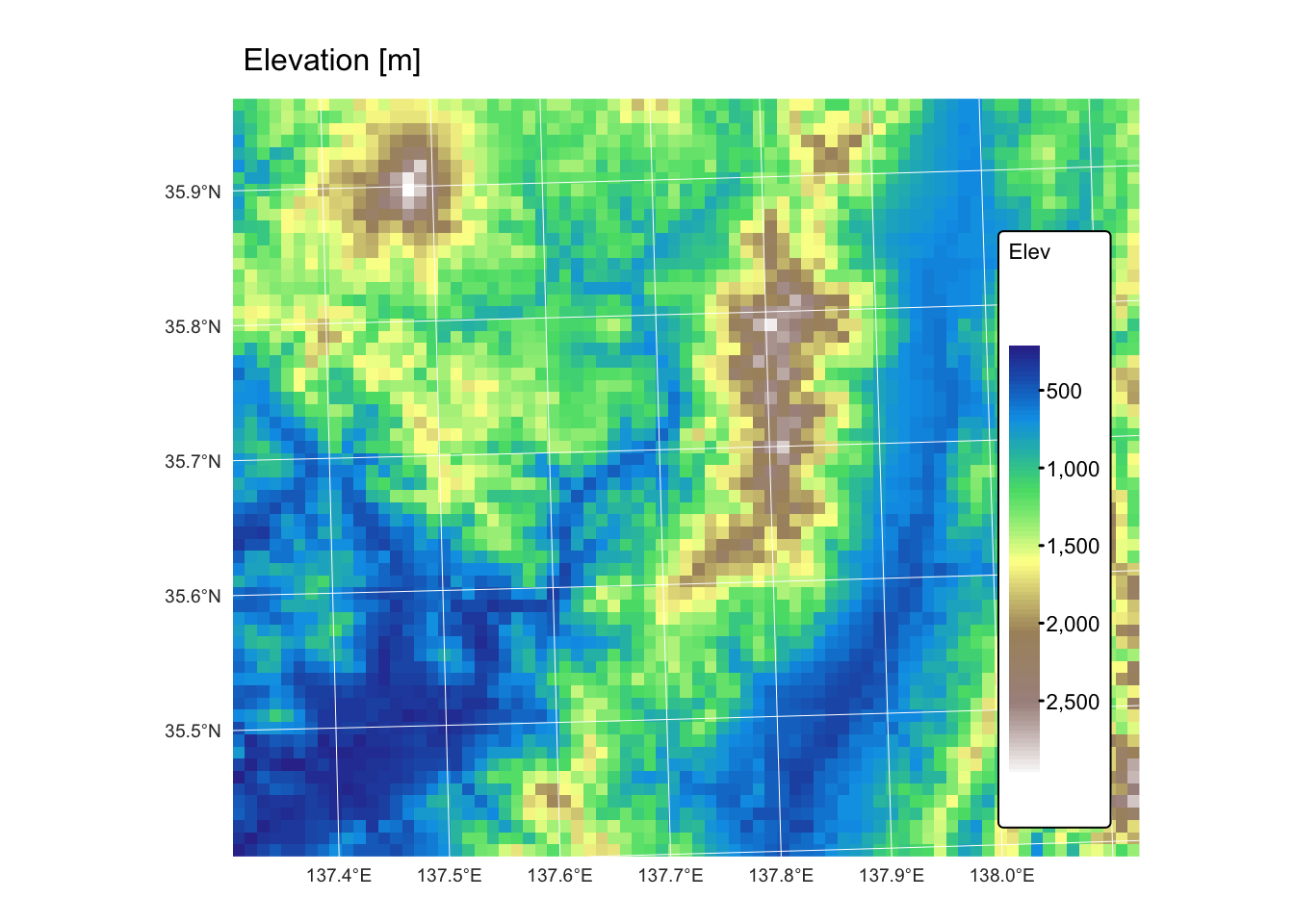

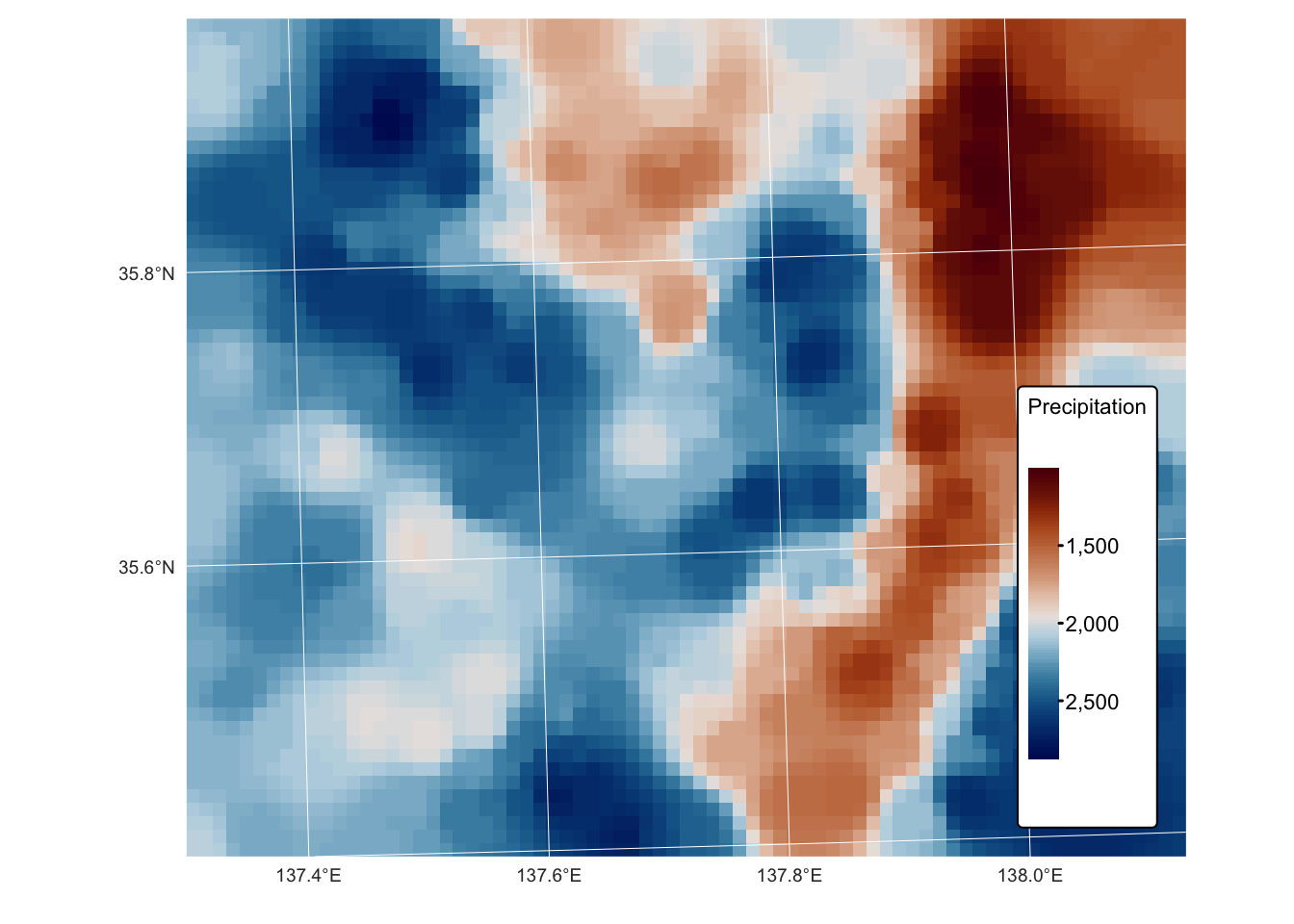

chelsa_map_1km: this GeoTIFF raster represents a subset of the global mean annual precipitation (BIO12) dataset for the period 1981 to 2010, derived from the CHELSA (Climatologies at high resolution for the Earth’s land surface areas) project. The raster features a 1 km resolution and is projected using the EPSG:32653 (WGS 84 / UTM zone 53N) coordinate reference system.chelsa_mat_1km: this GeoTIFF raster represents a subset of the global mean annual temperature dataset (BIO01) for the period 1981 to 2010, derived from the CHELSA project. The raster features a 1 km resolution and is projected using the EPSG:32653 (WGS 84 / UTM zone 53N) coordinate reference system.dem_1km: this GeoTIFF raster represents a 1 km resolution digital elevation model (DEM) for the area covered by the CHELSA precipitation and temperature rasters. The DEM was created by resampling a 10 m resolution DEM of the same region, sourced from the Geospatial Information Authority of Japan. It is projected using the EPSG:32653 (WGS 84 / UTM zone 53N) coordinate reference system.bnd.gpkg: This Geopackage contains a POLYGON geometry layer representing the bounding box of the aforementioned raster datasets. The vector layer is projected using the EPSG:32653 (WGS 84 / UTM zone 53N) coordinate reference system.

Load raster data

Read the three GeoTIFF files as SpatRaster objects and

verify that the extents match:

# Read in the 3 GeoTIFF files as SpatRaster objects

r_map <- rast("./data/data_spat3/raster/chelsa_map_1km.tif")

r_mat <- rast("./data/data_spat3/raster/chelsa_mat_1km.tif")

r_dem <- rast("./data/data_spat3/raster/dem_1km.tif")

names(r_map) <- "Precip"

names(r_mat) <- "Temp"

names(r_dem) <- "Elev"

# Verify that the extents match:

# (1) Extract numeric extent values for each raster

ext_map <- c(xmin(r_map), xmax(r_map), ymin(r_map), ymax(r_map))

ext_mat <- c(xmin(r_mat), xmax(r_mat), ymin(r_mat), ymax(r_mat))

ext_dem <- c(xmin(r_dem), xmax(r_dem), ymin(r_dem), ymax(r_dem))

# (2) Compare the extents pairwise

if (!isTRUE(all.equal(ext_map, ext_mat, tolerance = .Machine$double.eps^0.5)) ||

!isTRUE(all.equal(ext_map, ext_dem, tolerance = .Machine$double.eps^0.5))) {

stop("The raster extents do not match!")

}Plot the three rasters with tmap:

tm_shape(r_map) +

tm_raster("Precip",

col.scale = tm_scale_continuous(values = "-scico.vik")

) +

tm_layout(frame = FALSE,

legend.text.size = 0.7,

legend.title.size = 0.7,

legend.position = c("right", "bottom"),

legend.bg.color = "white") +

tm_title("CHELSA Precipitation [mm]", size = 1) +

tm_graticules(col = "white", lwd = 0.5)

tm_shape(r_mat) +

tm_raster("Temp",

col.scale = tm_scale_continuous(values = "scico.davos")

) +

tm_layout(frame = FALSE,

legend.text.size = 0.7,

legend.title.size = 0.7,

legend.position = c("right", "bottom"),

legend.bg.color = "white") +

tm_title("CHELSA Temperature [degrees C]", size = 1) +

tm_graticules(col = "white", lwd = 0.5)

tm_shape(r_dem) +

tm_raster("Elev",

col.scale = tm_scale_continuous(values = "matplotlib.terrain")

) +

tm_layout(frame = FALSE,

legend.text.size = 0.7,

legend.title.size = 0.7,

legend.position = c("right", "bottom"),

legend.bg.color = "white") +

tm_title("Elevation [m]", size = 1) +

tm_graticules(col = "white", lwd = 0.5)

Create points for interpolation

To illustrate the various interpolation methods available in the gstat package, we will:

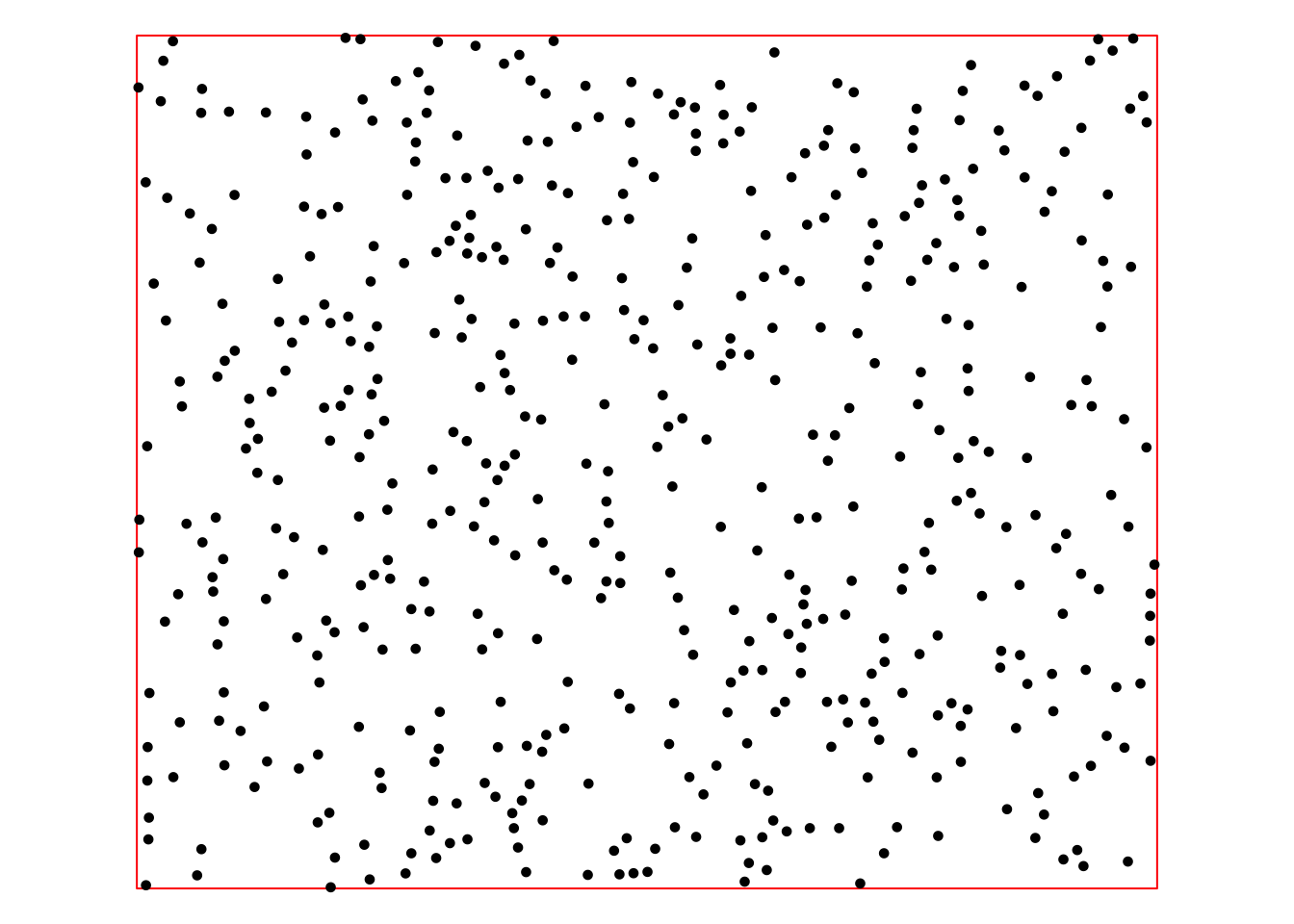

Create an sf object containing 500 POINT geometries randomly distributed within the

bndbounding box, assigning each point values from the precipitation, temperature, and elevation rasters.Randomly split this sf object into two equal datasets — a training set for performing the interpolation and a testing set for evaluating the performance of each method. Additionally, the interpolated rasters can be directly compared with the original GeoTIFFs.

The next R code block generates the 500 random points and saves them as an sf object. Given that the resolution of the provided rasters is 1 km, the random points are ensured to be at least 1 km apart.

# Create a bounding box from the bnd layer provided

bnd <- st_read("./data/data_spat3/vector/bnd.gpkg")## Reading layer `Bounding Box' from data source

## `/Volumes/Work/Dropbox/Transfer/SCII206/R-Resource/data/data_spat3/vector/bnd.gpkg'

## using driver `GPKG'

## Simple feature collection with 1 feature and 20 fields

## Geometry type: MULTIPOLYGON

## Dimension: XY

## Bounding box: xmin: 709205 ymin: 3920571 xmax: 783782.5 ymax: 3982934

## Projected CRS: WGS 84 / UTM zone 53Nbbox <- st_as_sfc(st_bbox(bnd))

# Initialize variables for the greedy sampling algorithm

accepted_points <- list()

n_points <- 500 # Number of points

min_dist <- 1000 # Minimum distance in meters

attempt <- 0

max_attempts <- 100000 # To avoid infinite loops

# Note: This method assumes that the CRS units are in meters.

# If not, you may need to transform the coordinate system appropriately.

# Greedily sample points until 500 valid ones are collected

while (length(accepted_points) < n_points && attempt < max_attempts) {

attempt <- attempt + 1

# Generate a random point within the bounding box

bbox_coords <- st_bbox(bbox)

x <- runif(1, bbox_coords["xmin"], bbox_coords["xmax"])

y <- runif(1, bbox_coords["ymin"], bbox_coords["ymax"])

pt <- st_sfc(st_point(c(x, y)), crs = st_crs(bnd))

# If this is the first point, add it immediately

if (length(accepted_points) == 0) {

accepted_points[[1]] <- pt

} else {

# Combine already accepted points into one sfc object

accepted_sfc <- do.call(c, accepted_points)

# Calculate distances from the new point to all accepted points

dists <- st_distance(pt, accepted_sfc)

# If the new point is at least 1000 m from all others, accept it

if (all(as.numeric(dists) >= min_dist)) {

accepted_points[[length(accepted_points) + 1]] <- pt

}

}

}

# Check that enough points were generated

if (length(accepted_points) < n_points) {

stop("Could not generate 500 points with the specified minimum distance.")

}

# Combine accepted points into a single sf object

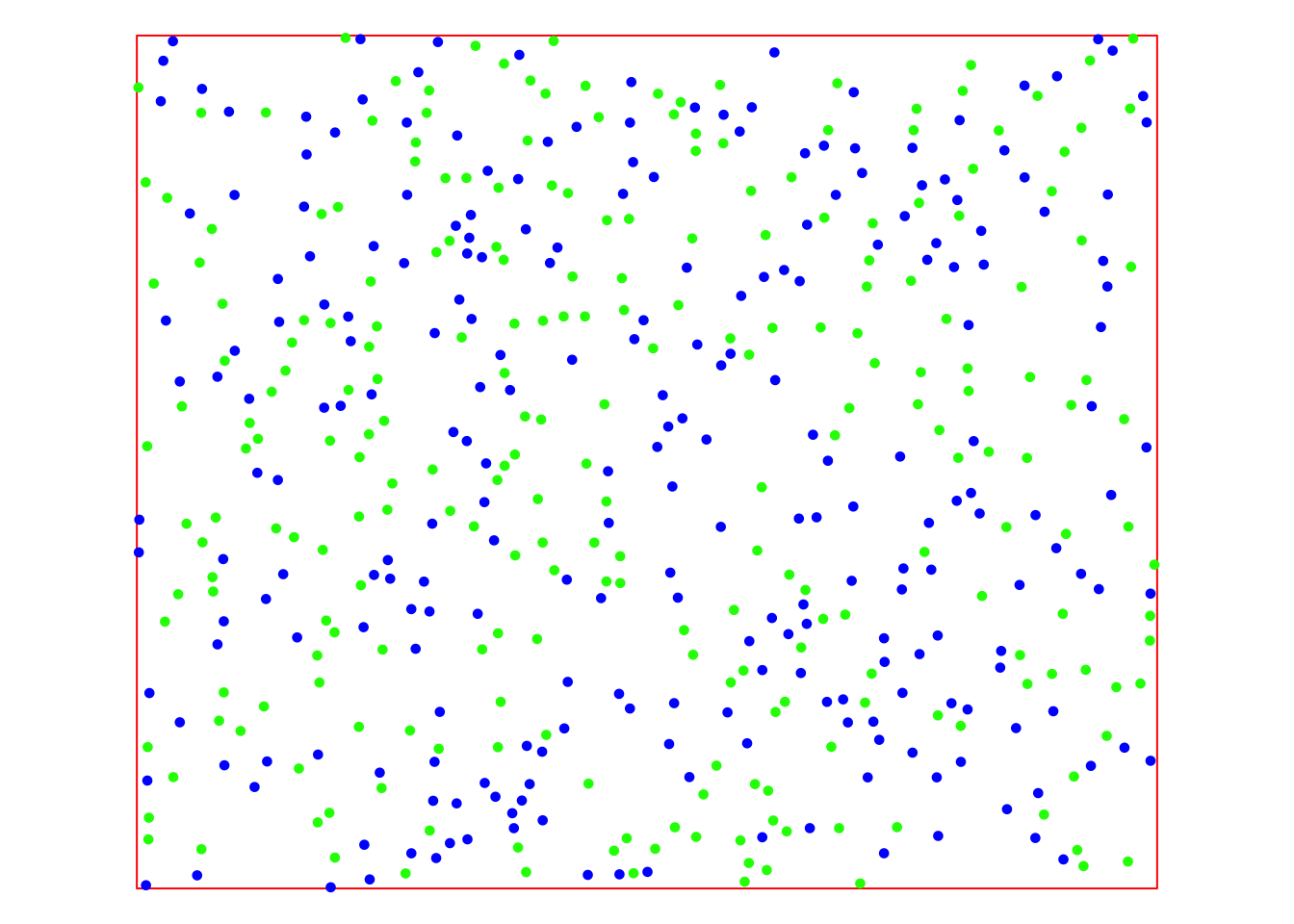

points_sf <- st_sf(geometry = do.call(c, accepted_points))Plot random points with tmap to inspect spatial distribution:

# Plot random points with bbox

bnd_map <- tm_shape(bnd) + tm_borders("red")

bnd_map + tm_shape(points_sf) + tm_dots(size = 0.3) +

tm_layout(frame = FALSE) +

tm_legend(show = FALSE)

The above point sf object (points_sf)

looks satisfactory: there is no obvious clustering of points and there

is good coverage of the area of interest. The following chunk of R code

extracts the values from the raster layers and assigns them as attribute

columns of points_sf.

# Stack the three rasters together

r_stack <- c(r_map, r_mat, r_dem)

# Convert the sf object to a SpatVector for extraction

points_vect <- vect(points_sf)

# Extract values from the raster stack at point locations

extracted_vals <- terra::extract(r_stack, points_vect)

# Remove the ID column produced by extract()

extracted_vals <- extracted_vals[, -1]

# Set the column names for the extracted values explicitly.

colnames(extracted_vals) <- c("Precipitation", "Temperature", "Elevation")

# Bind the extracted raster values to the sf object.

points_sf <- cbind(points_sf, extracted_vals)Finally, the random points are divided into two subsets — a training set and a testing set — each comprising 250 randomly selected points.

# Add a unique ID column

points_sf <- points_sf %>% mutate(ID = row_number())

# Set a seed for reproducibility and randomly split the data into two groups

set.seed(123) # Adjust or remove the seed if necessary

training_indices <- sample(nrow(points_sf), 250)

training_sf <- points_sf[training_indices, ]

testing_sf <- points_sf[-training_indices, ]

#Inspect the final objects by printing the first few rows

head(training_sf)We can see from the above that each new sf object includes the three columns with data from the precipitation, temperature, and elevation rasters, plus one ID column holding unique identifiers for each row.

Plot the training and testing sets with tmap:

# Plot random points with bbox

bnd_map <- tm_shape(bnd) +

tm_borders("red")

training_map <- bnd_map +

tm_shape(training_sf) + tm_dots(size = 0.3, "blue") +

tm_layout(frame = FALSE) +

tm_legend(show = FALSE)

training_map +

tm_shape(testing_sf) + tm_dots(size = 0.3, "green") +

tm_layout(frame = FALSE) +

tm_legend(show = FALSE)

The map displays the training data in blue and the testing data in green. With these sets of points, we can now apply and evaluate the various interpolation methods offered by gstat.

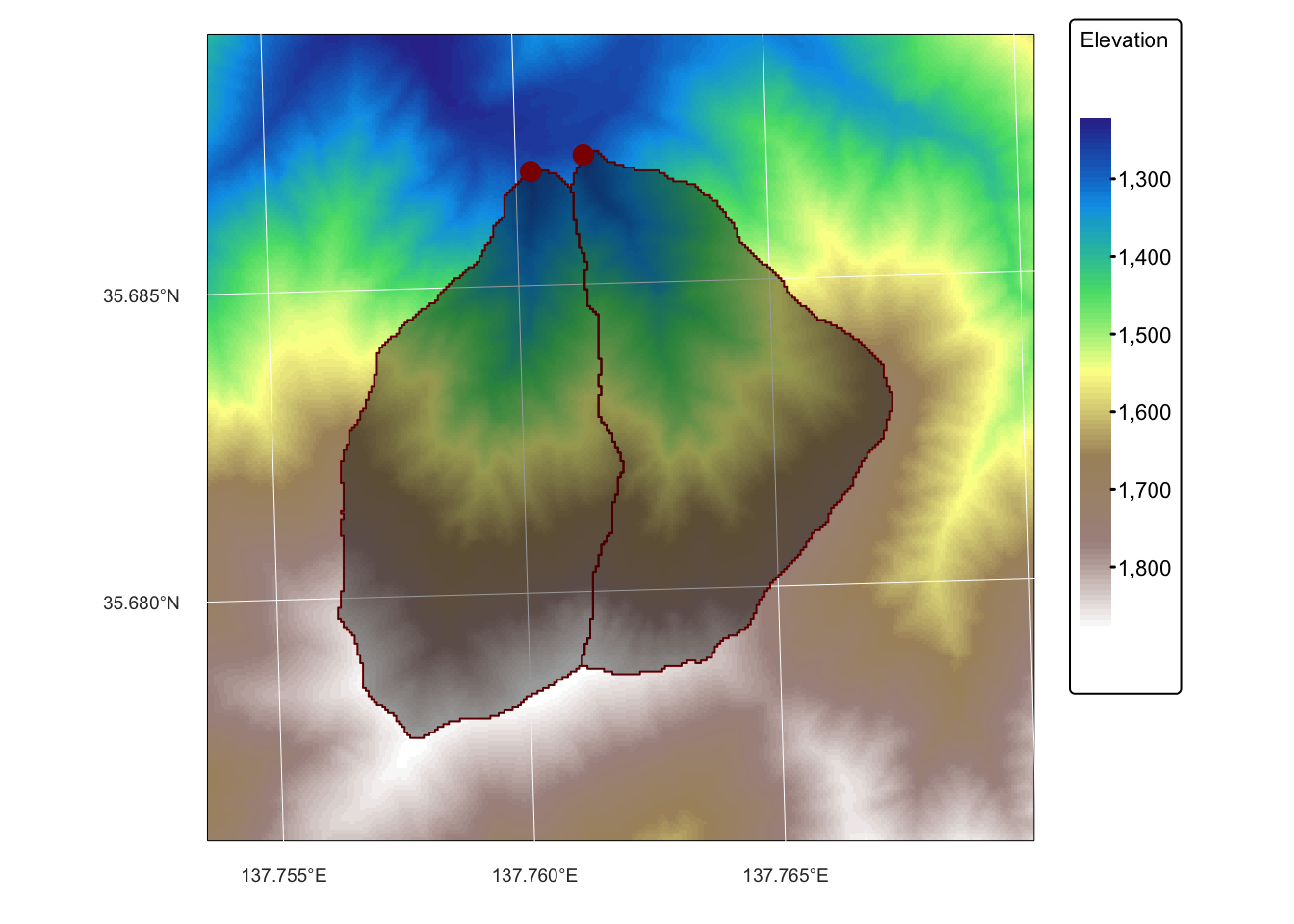

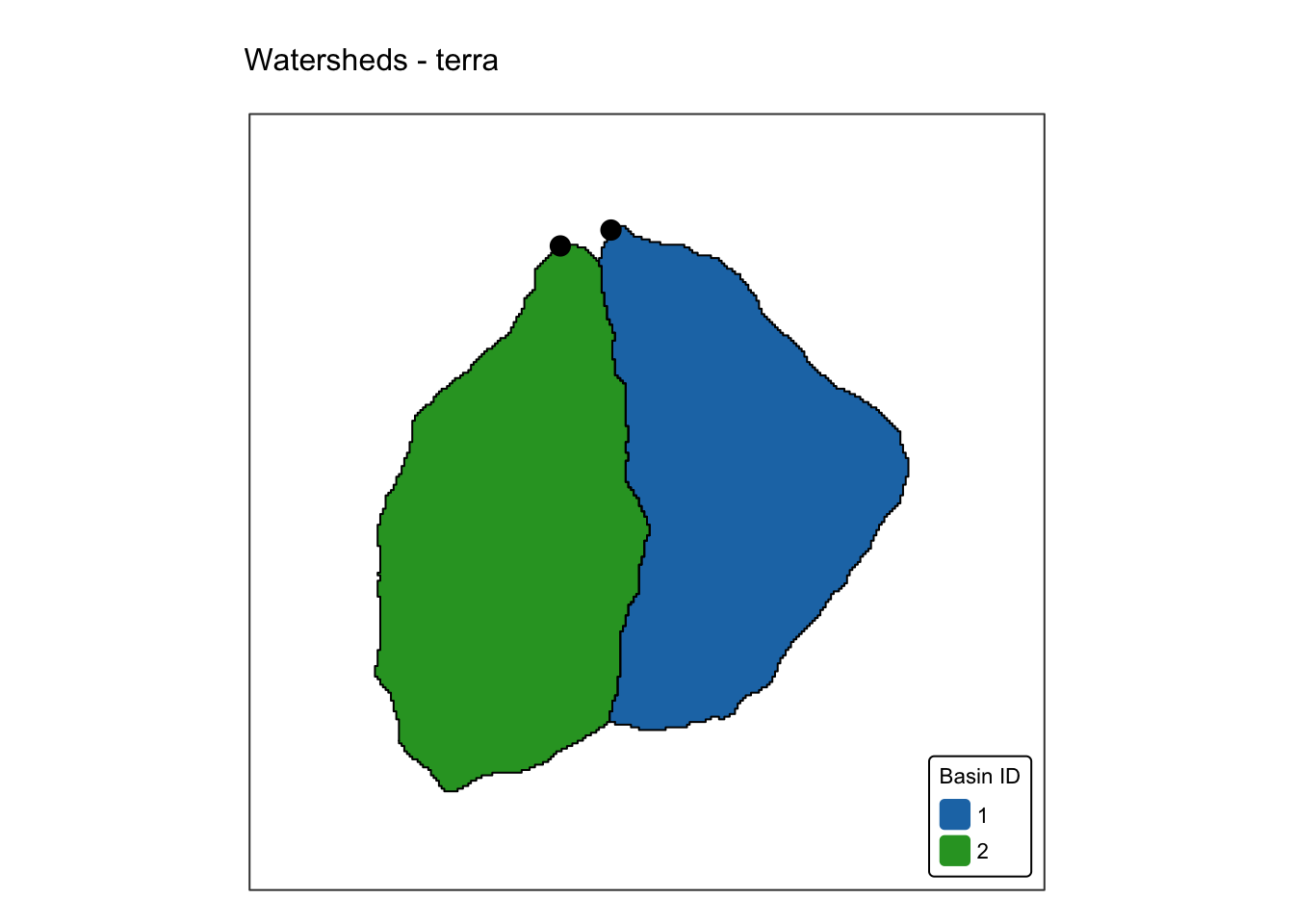

Dataset for operations

The dataset for the second part of this practical comprises the following layers:

dem_5m: A GeoTIFF raster representing a 5 m resolution digital elevation model (DEM) obtained from the Geospatial Information Authority of Japan.basin_outlets.gpkg: A Geopackage containing a POINT geometry layer that represents the outlets of two small watersheds.basin_poly.gpkg: A Geopackage with a POLYGON geometry layer representing the watersheds draining into basin_outlets, delineated fromdem_5m.bnd_small.gpkg: A Geopackage containing a POLYGON geometry layer representing the extent ofdem_5m.

All layers are projected using the EPSG:32653 (WGS 84 / UTM zone 53N) coordinate reference system.

The next R code block reads in the data and plots it on a map using tmap:

## Reading layer `Bounding Box Small' from data source

## `/Volumes/Work/Dropbox/Transfer/SCII206/R-Resource/data/data_spat3/vector/bnd_small.gpkg'

## using driver `GPKG'

## Simple feature collection with 1 feature and 1 field

## Geometry type: POLYGON

## Dimension: XY

## Bounding box: xmin: 749207.5 ymin: 3951521 xmax: 750697.5 ymax: 3952976

## Projected CRS: WGS 84 / UTM zone 53N## Reading layer `JP Basins' from data source

## `/Volumes/Work/Dropbox/Transfer/SCII206/R-Resource/data/data_spat3/vector/basins_poly.gpkg'

## using driver `GPKG'

## Simple feature collection with 2 features and 2 fields

## Geometry type: MULTIPOLYGON

## Dimension: XY

## Bounding box: xmin: 749442.5 ymin: 3951706 xmax: 750442.5 ymax: 3952766

## Projected CRS: WGS 84 / UTM zone 53N## Reading layer `JP basin outlets' from data source

## `/Volumes/Work/Dropbox/Transfer/SCII206/R-Resource/data/data_spat3/vector/basin_outlets.gpkg'

## using driver `GPKG'

## Simple feature collection with 2 features and 3 fields

## Geometry type: POINT

## Dimension: XY

## Bounding box: xmin: 749790 ymin: 3952729 xmax: 749884.9 ymax: 3952758

## Projected CRS: WGS 84 / UTM zone 53N# Read in DEM as SpatRast object

jp_dem <- rast("./data/data_spat3/raster/dem_5m.tif")

names(jp_dem) <- "Elevation"

# Plot bnd + Add DEM, basins, and outlets

bnd_map <- tm_shape(bnd_small) + tm_borders("black") +

tm_layout(frame = FALSE)

dem_map <- bnd_map +

tm_shape(jp_dem) + tm_raster("Elevation",

col.scale = tm_scale_continuous(values = "matplotlib.terrain")) +

tm_layout(frame = FALSE,

legend.text.size = 0.7,

legend.title.size = 0.7,

legend.bg.color = "white") +

tm_graticules(col = "white", lwd = 0.5, n.x = 3, n.y = 3)

basin_map <-dem_map +

tm_shape(jp_basins) + tm_borders("darkred") + tm_fill("black", fill_alpha = 0.4)

tm_layout(frame = FALSE)

basin_map +

tm_shape(jp_outlets) + tm_dots(size = 0.7, "darkred") +

tm_layout(frame = FALSE)

Spatial interpolation

Data models

In geospatial analysis the natural world is captured using two fundamental data models:

Discrete entities: are individual, well-defined objects represented as points, lines, or polygons with clear boundaries, such as buildings or roads. They focus on the exact location and shape of each object, making them ideal for analyses that require detailed spatial relationships.

Continuous fields: represent spatial phenomena as grids of cells where each cell holds a value, such as temperature or elevation, that changes gradually across space. They assume that the measured attribute exists continuously over the area, allowing for smooth interpolation and analysis of natural gradients.

The primary difference between the two data models, namely that discrete entities capture distinct, bounded features, while continuous fields describe phenomena that vary seamlessly across a region, influences the choice of data formats (vector for discrete and raster for continuous) and the analytical methods used in geospatial analysis.

Continuous fields are typically obtained by gathering data from a variety of sources—such as field measurements, remote sensing imagery, or sensor networks — and then using interpolation techniques to estimate values at unmeasured locations.

Spatial interpolation methods

Spatial interpolation is a set of methods used to estimate unknown values at unsampled locations based on known data points. It leverages the principle of spatial autocorrelation — the concept that nearby locations are more likely to have similar values than those farther apart (often referred to as Tobler’s First Law of Geography).

The choice of interpolation method depends on the nature of the data, the spatial structure of the phenomenon being studied, and the specific requirements of the analysis. All interpolation methods require careful consideration of data quality and density, as these factors significantly affect the accuracy of the interpolated surfaces.

Spatial interpolation methods are typically classified as either deterministic or stochastic, and further categorized as either exact or inexact. The differences between these categories are briefly discussed below.

Deterministic/stochastic methods

Deterministic Methods:

- Operate under the assumption that spatial relationships follow fixed mathematical rules.

- Examples include inverse distance weighting (IDW) and spline interpolation.

- Rely solely on the geometric configuration of sample points, assigning weights that decrease with distance.

- Produce a single “best guess” value for each unsampled location without modeling statistical variability.

Stochastic Methods:

- Also known as geostatistical methods.

- Treat spatial data as a realisation of an underlying random process.

- Use statistical models (often encapsulated in variograms) to describe spatial autocorrelation.

- Provide not only predicted values but also measures of prediction uncertainty.

- Particularly advantageous when data exhibit inherent randomness or when quantifying uncertainty is crucial.

Exact/inexact interpolators

Exact interpolators:

- Reproduce the original data values exactly at the sample locations.

- Common in many deterministic methods and some stochastic methods (e.g., kriging with a zero nugget effect).

- Best used when data are highly accurate and it’s important to honor the original measurements.

Inexact (smoothing) interpolators:

- Balance fidelity to the data with the smoothness of the resulting surface.

- Do not necessarily pass through every sample point.

- Examples include smoothing splines and trend surface analysis.

- Help to smooth out measurement noise and local variability.

- Preferable when reducing noise and generalising the data is more important than reproducing every original value.

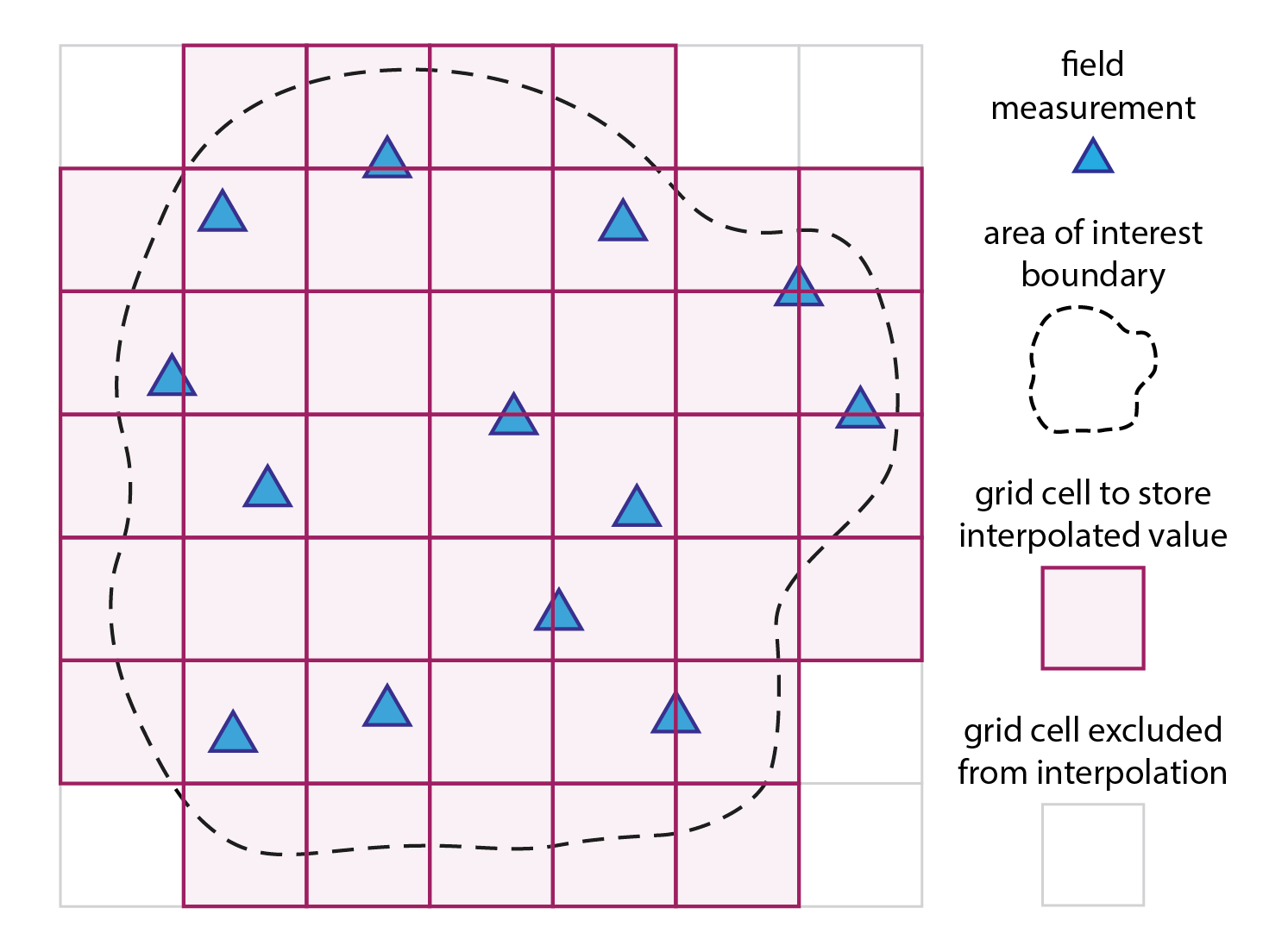

Spatial interpolation with gstat

As noted above, spatial interpolation is a method used to estimate unknown values at specific locations based on measurements taken at known locations. In geospatial analysis, these specific locations usually refer to a regular grid of cells (magenta squares in the Fig. 1 below). The method applies a mathematical model or function to compute a new value for each grid cell (magenta squares) based on the measured values from the proximity of that grid cell (blue triangles). The grid does not need to have a rectangular shape, and can be cropped to the boundary of the area of interest.

Fig. 1: Spatial interpolation in a nutshell: a mathematical model/function is used to compute a new value for each grid cell (magenta squares) based on the measured values from the proximity of that grid cell (blue triangles).

The next block of R code produces a regular grid for interpolation

and crops this to the bnd sf polygon

object.

# Create a grid based on the bounding box of the polygon

# We set the spatial resolution to 1 km (75 x 62 cells)

bbox <- st_bbox(bnd)

x.range <- seq(bbox["xmin"], bbox["xmax"], length.out = 75)

y.range <- seq(bbox["ymin"], bbox["ymax"], length.out = 62)

grid <- expand.grid(x = x.range, y = y.range)

# Convert the grid to an sf object and assign the same CRS as the polygon

grid_sf <- st_as_sf(grid, coords = c("x", "y"), crs = st_crs(bnd))

# Crop the grid to the polygon area using an intersection

grid_sf_cropped <- st_intersection(grid_sf, bnd)

# Convert the cropped grid to a Spatial object (gstat requires Spatial objects)

grid_sp <- as(grid_sf_cropped, "Spatial")Fitting a first order polynomial

The simplest mathematical model we can fit to a dataset is a linear model. For two dimensional data, the first order polynomial has the following equation:

\[f(x,y) = ax + by + c\] where: \(a\), \(b\), and \(c\) are constants and \(x\) and \(y\) are the x and y coordinates of the data, respectively.

The next example fits a first order polynomial (i.e., a plane) to the

cloud of points defined by our training dataset

(training_sf). We create two surfaces: one for temperature

and one for precipitation.

# Extract coordinates from points and prepare data for modeling

points_coords <- st_coordinates(training_sf)

temp_df <- data.frame(x = points_coords[,1], y = points_coords[,2], # Temp.

value_temp = points_sf$Temperature)

precip_df <- data.frame(x = points_coords[,1], y = points_coords[,2], # Precip.

value_precip = points_sf$Precipitation)

# Fit a first order polynomial model (linear trend surface)

lm_model_temp <- lm(value_temp ~ x + y, data = temp_df)

lm_model_precip <- lm(value_precip ~ x + y, data = precip_df)

# Prepare grid data for prediction by extracting coordinates

grid_coords <- st_coordinates(grid_sf_cropped)

grid_df_temp <- data.frame(x = grid_coords[,1], y = grid_coords[,2]) # Temp.

grid_df_precip <- data.frame(x = grid_coords[,1], y = grid_coords[,2]) # Precip.

# Predict trend surface

grid_df_temp$pred <- predict(lm_model_temp, newdata = grid_df_temp) # Temp.

grid_df_precip$pred <- predict(lm_model_precip, newdata = grid_df_precip) # Precip.

# Convert the prediction grid to a terra SpatRaster using type = "xyz"

rast_temp <- rast(grid_df_temp, type = "xyz")

rast_precip <- rast(grid_df_precip, type = "xyz")

# Assign a CRS and set variable names

crs(rast_temp) <- st_crs(bnd)$wkt

crs(rast_precip) <- st_crs(bnd)$wkt

names(rast_temp) <- "Temperature"

names(rast_precip) <- "Precipitation"To visualize the resulting first order polynomial surfaces and how well they approximate the data, the next blocks of R code will produce some interactive 3D plots. We start with the temperature data:

# Data for plotting

t <- rast_temp

sf_points <- training_sf

# Prepare Data for 3D Plotting

# Convert the raster to a matrix for plotly.

t_mat <- terra::as.matrix(t, wide = TRUE)

# Flip the matrix vertically so that row order matches the y sequence (ymin to ymax).

t_mat <- t_mat[nrow(t_mat):1, ]

# Define x and y sequences based on the raster extent and resolution.

x_seq_t <- seq(terra::xmin(t), terra::xmax(t), length.out = ncol(t_mat))

y_seq_t <- seq(terra::ymin(t), terra::ymax(t), length.out = nrow(t_mat))

# Extract coordinates from the sf object.

sf_coords <- st_coordinates(sf_points)

# Combine coordinates with the z attribute

sf_df_t <- data.frame(X = sf_coords[, "X"], Y = sf_coords[, "Y"],

Z = sf_points$Temperature)

# Create an Interactive 3D Plot with plotly

# Initialize a plotly surface plot for the raster.

p_t <- plot_ly(x = ~x_seq_t, y = ~y_seq_t, z = ~t_mat) %>%

add_surface(showscale = TRUE, colorscale = "Portland", opacity = 0.8)

# Add the sf object to plot.

p_t <- p_t %>% add_trace(x = sf_df_t$X, y = sf_df_t$Y, z = sf_df_t$Z,

type = 'scatter3d', mode = 'markers',

marker = list(size = 3, color = 'blue'))

# Modify the layout to control the z-axis range and adjust the vertical exaggeration.

p_t <- p_t %>% layout(scene = list(

zaxis = list(title = "Temperature (oC)",

# Alternatively, specify a custom range, e.g., range = c(0, 20)

range = c(min(sf_df_t$Z, na.rm = TRUE), max(sf_df_t$Z, na.rm = TRUE))),

aspectmode = "manual", aspectratio = list(x = 1, y = 1, z = 0.3)

))

# Display the temperature plot

p_tAnd now, plot precipitation:

# Data for plotting

r <- rast_precip

sf_points <- training_sf

# Prepare Data for 3D Plotting

# Convert the raster to a matrix for plotly.

r_mat <- terra::as.matrix(r, wide = TRUE)

# Flip the matrix vertically so that row order matches the y sequence (ymin to ymax).

r_mat <- r_mat[nrow(r_mat):1, ]

# Define x and y sequences based on the raster extent and resolution.

x_seq_r <- seq(terra::xmin(r), terra::xmax(r), length.out = ncol(r_mat))

y_seq_r <- seq(terra::ymin(r), terra::ymax(r), length.out = nrow(r_mat))

# Extract coordinates from the sf object.

sf_coords <- st_coordinates(sf_points)

# Combine coordinates with the z attribute

sf_df_r <- data.frame(X = sf_coords[, "X"], Y = sf_coords[, "Y"],

Z = sf_points$Precipitation)

# Create an Interactive 3D Plot with plotly

# Initialize a plotly surface plot for the raster.

p_r <- plot_ly(x = ~x_seq_r, y = ~y_seq_r, z = ~r_mat) %>%

add_surface(showscale = TRUE, colorscale = "Portland", opacity = 0.8)

# Add the sf object to plot.

p_r <- p_r %>% add_trace(x = sf_df_r$X, y = sf_df_r$Y, z = sf_df_r$Z,

type = 'scatter3d', mode = 'markers',

marker = list(size = 3, color = 'blue'))

# Modify the layout to control the z-axis range and adjust the vertical exaggeration.

p_r <- p_r %>% layout(scene = list(

zaxis = list(title = "Precipitation [mm]",

# Alternatively, specify a custom range, e.g., range = c(0, 20)

range = c(min(sf_df_r$Z, na.rm = TRUE), max(sf_df_r$Z, na.rm = TRUE))),

aspectmode = "manual", aspectratio = list(x = 1, y = 1, z = 0.3)

))

# Display the precipitation plot

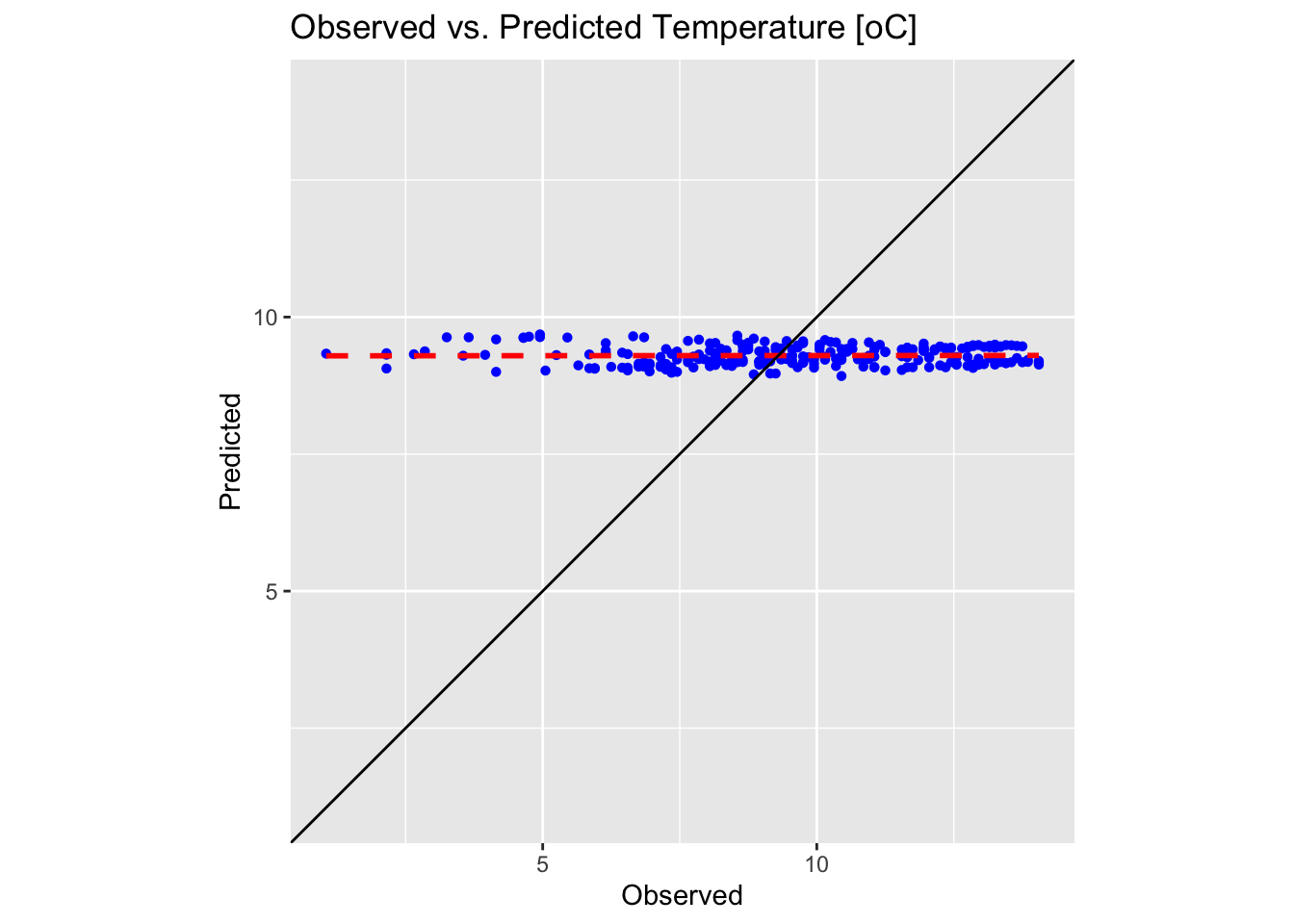

p_rAs you can see, a first order polynomial is doing a bad job at

approximating the temperature and precipitation data. Another, more

formal, way of evaluating the performance of the interpolation is by

using the testing dataset testing_sf.

In the next block of R code, we assign the predicted temperature and precipitation values to the testing dataset and then compare these values with the actual ones:

# Create copy of training set so we do not overwrite the original

testing_sf_new <- testing_sf

# Extract predicted values

r_stack <- c(rast_precip, rast_temp) # Stack the two rasters together

points_vect <- vect(testing_sf_new) # Convert to a SpatVector for extraction

extracted_vals <- terra::extract(r_stack, points_vect) # Extract values

extracted_vals <- extracted_vals[, -1] # Remove the ID column produced by extract()

colnames(extracted_vals) <- c("Precip_predict", "Temp_predict") # Set the column names

testing_sf_new <- cbind(testing_sf_new, extracted_vals) # Bind the extracted raster values

# Compute the common limits for both axes from the data

lims_temp <- range(c(testing_sf_new$Temperature, testing_sf_new$Temp_predict))

# First plot: Observed vs. Predicted for Temperature

plot1 <- ggplot(testing_sf_new, aes(x = Temperature, y = Temp_predict)) +

geom_point(shape = 16, colour = "blue") +

geom_smooth(method = "lm", se = FALSE, linetype = "dashed", colour = "red", size = 1) +

geom_abline(intercept = 0, slope = 1, colour = "black") +

labs(title = "Observed vs. Predicted Temperature [oC]",

x = "Observed", y = "Predicted") +

coord_fixed(ratio = 1) +

theme(aspect.ratio = 1) +

scale_x_continuous(limits = lims_temp) +

scale_y_continuous(limits = lims_temp)

# Compute the common limits for both axes from the data

lims_precip <- range(c(testing_sf_new$Precipitation, testing_sf_new$Precip_predict))

# Second plot: Observed vs. Predicted for Precipitation

plot2 <- ggplot(testing_sf_new, aes(x = Precipitation, y = Precip_predict)) +

geom_point(shape = 16, colour = "blue") +

geom_smooth(method = "lm", se = FALSE, linetype = "dashed", colour = "red", size = 1) +

geom_abline(intercept = 0, slope = 1, colour = "black") +

labs(title = "Observed vs. Predicted Precipitation [mm]",

x = "Observed", y = "Predicted") +

coord_fixed(ratio = 1) +

theme(aspect.ratio = 1) +

scale_x_continuous(limits = lims_precip) +

scale_y_continuous(limits = lims_precip)

# Make plots

plot1

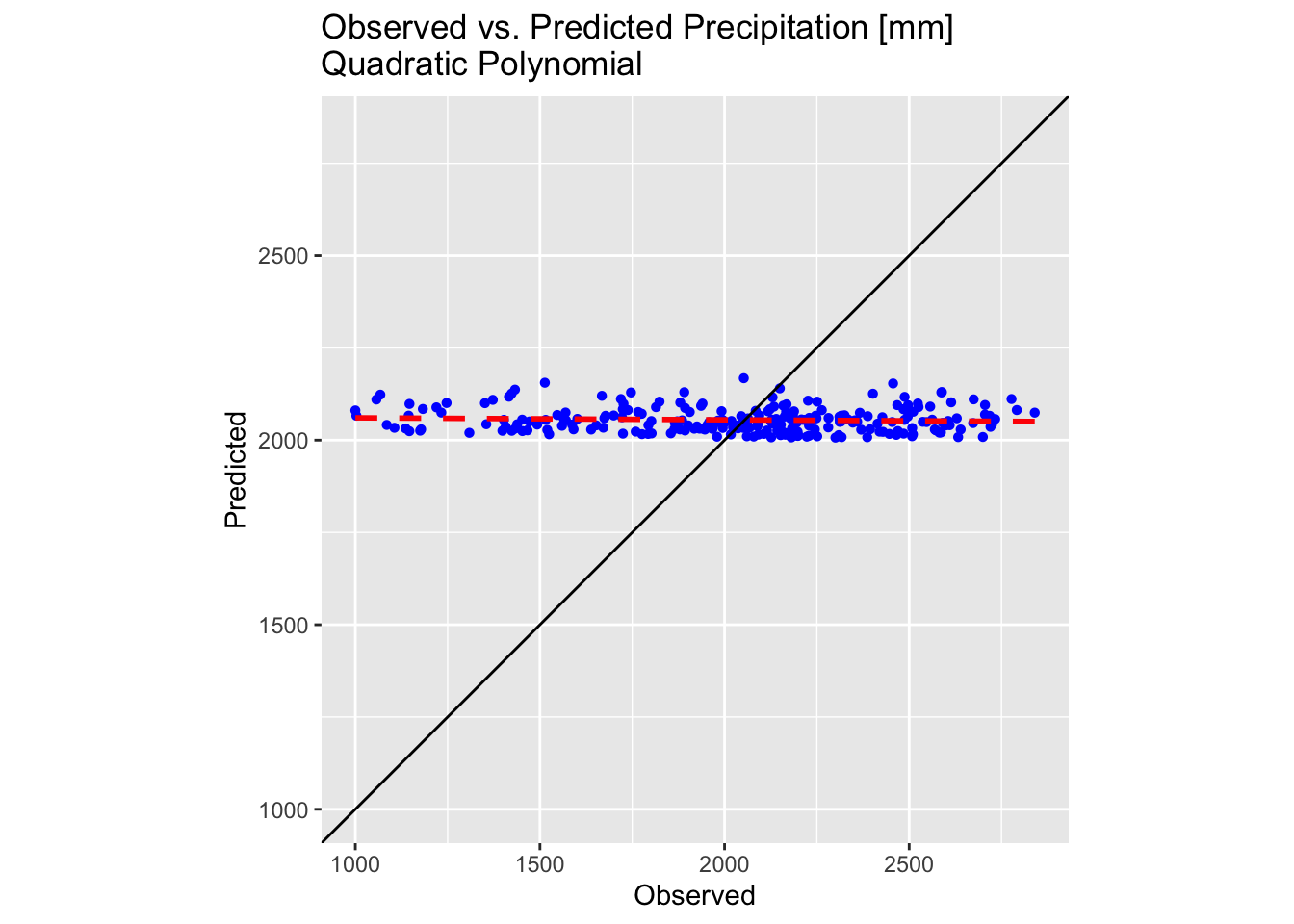

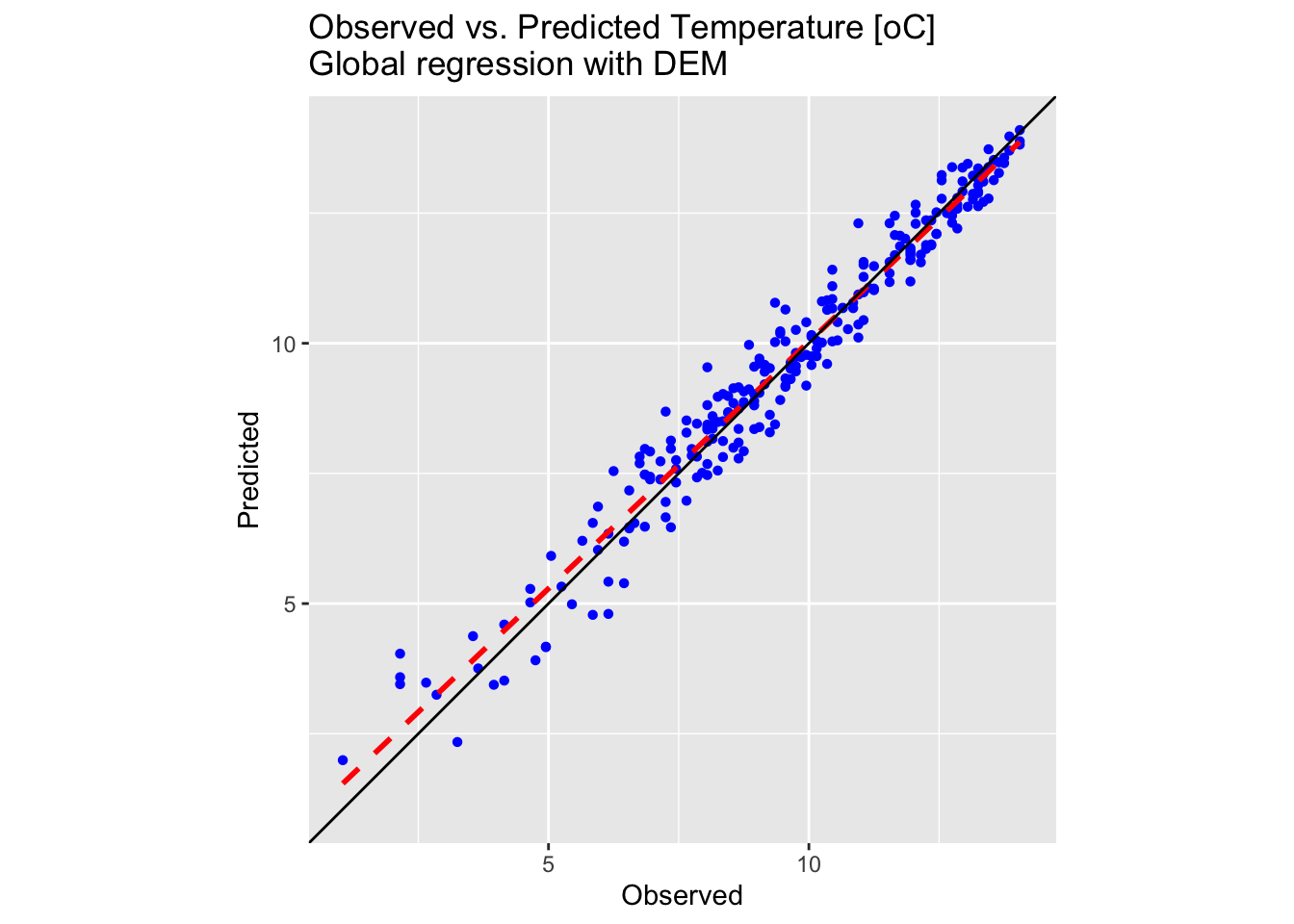

These plots confirm the poor performance of first-order polynomial interpolation in approximating our precipitation and temperature values. In each plot, the black solid line represents the ideal 1:1 correspondence, while the red dotted line shows the linear fit to the predicted versus observed data. The closer the red dotted line is to the black line, the more accurate the interpolation; in a perfect scenario, the two would overlap exactly.

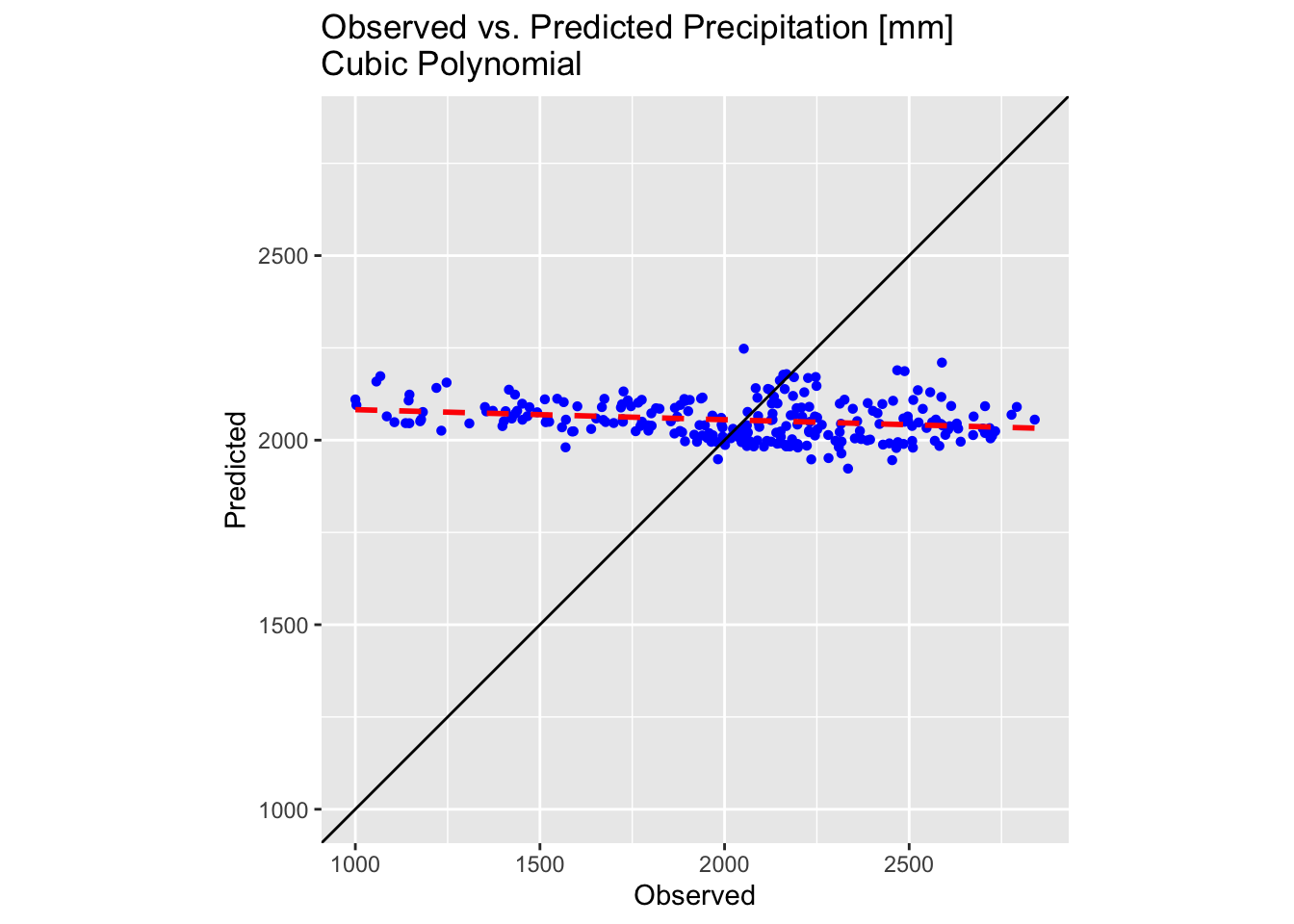

Fitting higher order polynomials

In the following examples, we repeat the above steps while fitting second- and third-order polynomial models to the precipitation data. For brevity, we omit the temperature data.

A second order (quadratic) polynomial in two dimensions is generally written as:

\[f(x,y) = ax^{2} + bxy + cy^{2} + dx + ey + f\] A third order (cubic) polynomial in two dimensions is generally written as:

\[f(x,y) = ax^{3} + bx^{2}y + cxy^{2} + dy^{3} + ex^{2} + fxy + gy^{2} + hx + iy +j\] Here, the coefficients \(a,b,c,…,j\) are constants and \(x\) and \(y\) are the x and y coordinates of the data, respectively.

The next code block fits a quadratic polynomial to the precipitation

data in training_sf:

# Extract coordinates from points and prepare data for modeling

points_coords <- st_coordinates(training_sf)

precip_df <- data.frame(x = points_coords[,1], y = points_coords[,2],

value_precip = points_sf$Precipitation)

# Fit a quadratic polynomial model (linear trend surface)

lm_model_precip <- lm(value_precip ~ x + y + I(x^2) + I(y^2) + I(x*y), data = precip_df)

# Prepare grid data for prediction by extracting coordinates

grid_coords <- st_coordinates(grid_sf_cropped)

grid_df_precip <- data.frame(x = grid_coords[,1], y = grid_coords[,2])

# Predict trend surface

grid_df_precip$pred <- predict(lm_model_precip, newdata = grid_df_precip)

# Convert the prediction grid to a terra SpatRaster using type = "xyz"

rast_precip_2 <- rast(grid_df_precip, type = "xyz")

# Assign a CRS and set variable names

crs(rast_precip_2) <- st_crs(bnd)$wkt

names(rast_precip_2) <- "Precipitation"The above code block is identical to the one used for fitting a first

order polynomial, except that the lm() function parameters

have been changed to reflect the terms of the quadratic polynomial:

To fit a cubic polynomial we modify the lm() function

parameters as follows:

lm_model_precip <- lm(value_precip ~ x + y +

I(x^2) + I(y^2) + I(x*y) +

I(x^3) + I(y^3) + I(x^2*y) + I(x*y^2),

data = precip_df)The complete code block for fitting a cubic polynomial is thus:

# Extract coordinates from points and prepare data for modeling

points_coords <- st_coordinates(training_sf)

precip_df <- data.frame(x = points_coords[,1], y = points_coords[,2],

value_precip = points_sf$Precipitation)

# Fit a quadratic polynomial model (linear trend surface)

lm_model_precip <- lm(value_precip ~ x + y +

I(x^2) + I(y^2) + I(x*y) +

I(x^3) + I(y^3) + I(x^2*y) + I(x*y^2),

data = precip_df)

# Prepare grid data for prediction by extracting coordinates

grid_coords <- st_coordinates(grid_sf_cropped)

grid_df_precip <- data.frame(x = grid_coords[,1], y = grid_coords[,2])

# Predict trend surface

grid_df_precip$pred <- predict(lm_model_precip, newdata = grid_df_precip)

# Convert the prediction grid to a terra SpatRaster using type = "xyz"

rast_precip_3 <- rast(grid_df_precip, type = "xyz")

# Assign a CRS and set variable names

crs(rast_precip_3) <- st_crs(bnd)$wkt

names(rast_precip_3) <- "Precipitation"To create the 3D plots, we repurpose the code block originally used for plotting the first-order polynomial trend surface of the precipitation data, omitting the sections that remain unchanged.

# Data for plotting

r <- rast_precip_2

# Convert the raster to a matrix for plotly.

r_mat <- terra::as.matrix(r, wide = TRUE)

# Flip the matrix vertically so that row order matches the y sequence (ymin to ymax).

r_mat <- r_mat[nrow(r_mat):1, ]

# Create an Interactive 3D Plot with plotly

# Initialize a plotly surface plot for the raster.

p_r2 <- plot_ly(x = ~x_seq_r, y = ~y_seq_r, z = ~r_mat) %>%

add_surface(showscale = TRUE, colorscale = "Portland", opacity = 0.8)

# Add the sf object to plot.

p_r2 <- p_r2 %>% add_trace(x = sf_df_r$X, y = sf_df_r$Y, z = sf_df_r$Z,

type = 'scatter3d', mode = 'markers',

marker = list(size = 3, color = 'blue'))

# Modify the layout to control the z-axis range and adjust the vertical exaggeration.

p_r2 <- p_r2 %>% layout(scene = list(

zaxis = list(title = "Precipitation [mm]",

# Alternatively, specify a custom range, e.g., range = c(0, 20)

range = c(min(sf_df_r$Z, na.rm = TRUE), max(sf_df_r$Z, na.rm = TRUE))),

aspectmode = "manual", aspectratio = list(x = 1, y = 1, z = 0.8)

))

# Display the precipitation plot

p_r2# Data for plotting

r <- rast_precip_3

# Convert the raster to a matrix for plotly.

r_mat <- terra::as.matrix(r, wide = TRUE)

# Flip the matrix vertically so that row order matches the y sequence (ymin to ymax).

r_mat <- r_mat[nrow(r_mat):1, ]

# Create an Interactive 3D Plot with plotly

# Initialize a plotly surface plot for the raster.

p_r3 <- plot_ly(x = ~x_seq_r, y = ~y_seq_r, z = ~r_mat) %>%

add_surface(showscale = TRUE, colorscale = "Portland", opacity = 0.8)

# Add the sf object to plot.

p_r3 <- p_r3 %>% add_trace(x = sf_df_r$X, y = sf_df_r$Y, z = sf_df_r$Z,

type = 'scatter3d', mode = 'markers',

marker = list(size = 3, color = 'blue'))

# Modify the layout to control the z-axis range and adjust the vertical exaggeration.

p_r3 <- p_r3 %>% layout(scene = list(

zaxis = list(title = "Precipitation [mm]",

# Alternatively, specify a custom range, e.g., range = c(0, 20)

range = c(min(sf_df_r$Z, na.rm = TRUE), max(sf_df_r$Z, na.rm = TRUE))),

aspectmode = "manual", aspectratio = list(x = 1, y = 1, z = 0.8)

))

# Display the precipitation plot

p_r3Next, we plot the testing dataset:

# Create a copy of training set so we do not overwrite the original

testing_sf_new2 <- testing_sf

# Extract predicted values from quadratic surface

r_stack <- c(rast_precip_2, rast_precip_3) # Stack the two rasters together

points_vect <- vect(testing_sf_new2) # Convert to a SpatVector for extraction

extracted_vals <- terra::extract(r_stack, points_vect) # Extract values

extracted_vals <- extracted_vals[, -1] # Remove the ID column produced by extract()

colnames(extracted_vals) <- c("Precip_quadratic", "Precip_cubic") # Set the column names

testing_sf_new2 <- cbind(testing_sf_new2, extracted_vals) # Bind the extracted raster values

# Compute the common limits for both axes from the data

lims_precip <- range(c(testing_sf_new2$Precipitation, testing_sf_new2$Precip_quadratic))

# First plot: Observed vs. Predicted for Precipitation

plot1 <- ggplot(testing_sf_new2, aes(x = Precipitation, y = Precip_quadratic)) +

geom_point(shape = 16, colour = "blue") +

geom_smooth(method = "lm", se = FALSE, linetype = "dashed", colour = "red", size = 1) +

geom_abline(intercept = 0, slope = 1, colour = "black") +

labs(title = "Observed vs. Predicted Precipitation [mm] \nQuadratic Polynomial",

x = "Observed", y = "Predicted") +

coord_fixed(ratio = 1) +

theme(aspect.ratio = 1) +

scale_x_continuous(limits = lims_precip) +

scale_y_continuous(limits = lims_precip)

# Compute the common limits for both axes from the data

lims_precip <- range(c(testing_sf_new2$Precipitation, testing_sf_new2$Precip_cubic))

# Second plot: Observed vs. Predicted for Precipitation

plot2 <- ggplot(testing_sf_new2, aes(x = Precipitation, y = Precip_cubic)) +

geom_point(shape = 16, colour = "blue") +

geom_smooth(method = "lm", se = FALSE, linetype = "dashed", colour = "red", size = 1) +

geom_abline(intercept = 0, slope = 1, colour = "black") +

labs(title = "Observed vs. Predicted Precipitation [mm] \nCubic Polynomial",

x = "Observed", y = "Predicted") +

coord_fixed(ratio = 1) +

theme(aspect.ratio = 1) +

scale_x_continuous(limits = lims_precip) +

scale_y_continuous(limits = lims_precip)

# Make plots

plot1

The results are slightly improved but still sub-optimal. While fitting even higher order polynomials might offer further improvements, several key issues must be considered:

Overfitting: high-order models often capture not only the underlying trend but also the noise in the data. This results in a model that fits the training data extremely well yet performs poorly on unseen data.

Numerical instability: as the polynomial order increases, the model can become highly sensitive to small changes in the data. This can lead to large and erratic coefficient estimates, making the model unstable.

Extrapolation problems: high-order polynomials tend to exhibit extreme oscillations, especially near the boundaries of the data range. This behaviour can result in highly unreliable predictions when extrapolating beyond the observed data.

Interpretability: with higher-order terms, it becomes challenging to interpret the influence of individual predictors. The resulting model is often more of a black box, making it difficult to explain or understand the relationship between the variables.

Increased variance: more complex models typically have higher variance, meaning they can vary widely with different training data samples. This variability can undermine the robustness of the predictions.

Multicollinearity: when using powers of the same variable as predictors, there is often a high degree of correlation among these terms. This multicollinearity can make the model’s coefficient estimates unstable and harder to interpret.

Global regression

Spatial prediction using global regression on cheap-to-measure attributes involves building a single regression model over the entire study area to predict a target variable — typically one that is expensive or difficult to measure directly — by utilising ancillary data that are inexpensive and readily available. These ancillary or predictor variables might include remote sensing products, digital elevation models, land cover classifications, or other environmental covariates.

Underlying principles of the method

A global regression model is developed by relating the target variable to the cheap-to-measure attributes. The model is termed “global” because it assumes that the relationship between the target variable and the predictors is consistent across the entire study area. For example, a linear regression model might be specified as:

\[Z(i,j) = a_{0} + a_{1}X_{1}(i,j) + \dotsm + a_{n}X_{n}(i,j)+ \epsilon(i,j)\] where \(Z(i,j)\) is the target variable at location \((i,j)\), \(X_{1}\dotsc X_{n}\) are cheap-to-measure covariates, \(a_{0},a_{1},\dotsc a_{n}\) are regression coefficients, and \(\epsilon(i,j)\) is the error term.

Once the model is calibrated using observed data from locations where both the target and predictor variables are measured, it is then applied to predict the target variable at new locations based solely on the predictor variables. Because these predictors are available for the whole study region, spatial prediction becomes possible even in areas with sparse or no direct measurements of the target variable.

Assumptions and Limitations

Global Assumption: the method assumes a spatially invariant relationship between the predictor variables and the target variable. This can be a limitation in regions where local conditions vary significantly.

Residual Variability: while the global regression captures the main trend, local deviations or spatial autocorrelation in the residuals may remain unmodelled. Often, these residuals are further modelled using geostatistical interpolation methods like kriging (a process known as regression kriging) to capture local spatial structure.

Benefits in geospatial analysis

Cost-efficiency: by relying on cheap-to-measure attributes that are often available from remote sensing or other large-scale data sources, the approach can reduce the need for extensive and expensive field measurements.

Broad coverage: the global regression approach allows for predictions over extensive areas, providing a consistent and continuous map of the target variable.

Integration with other methods: it can be integrated with spatial interpolation methods to enhance prediction accuracy, balancing the broad-scale trend with local spatial detail.

Global regression of temperature data

In the following example, we apply the global regression method to

interpolate temperature data from training_sf using the

digital elevation model r_dem.

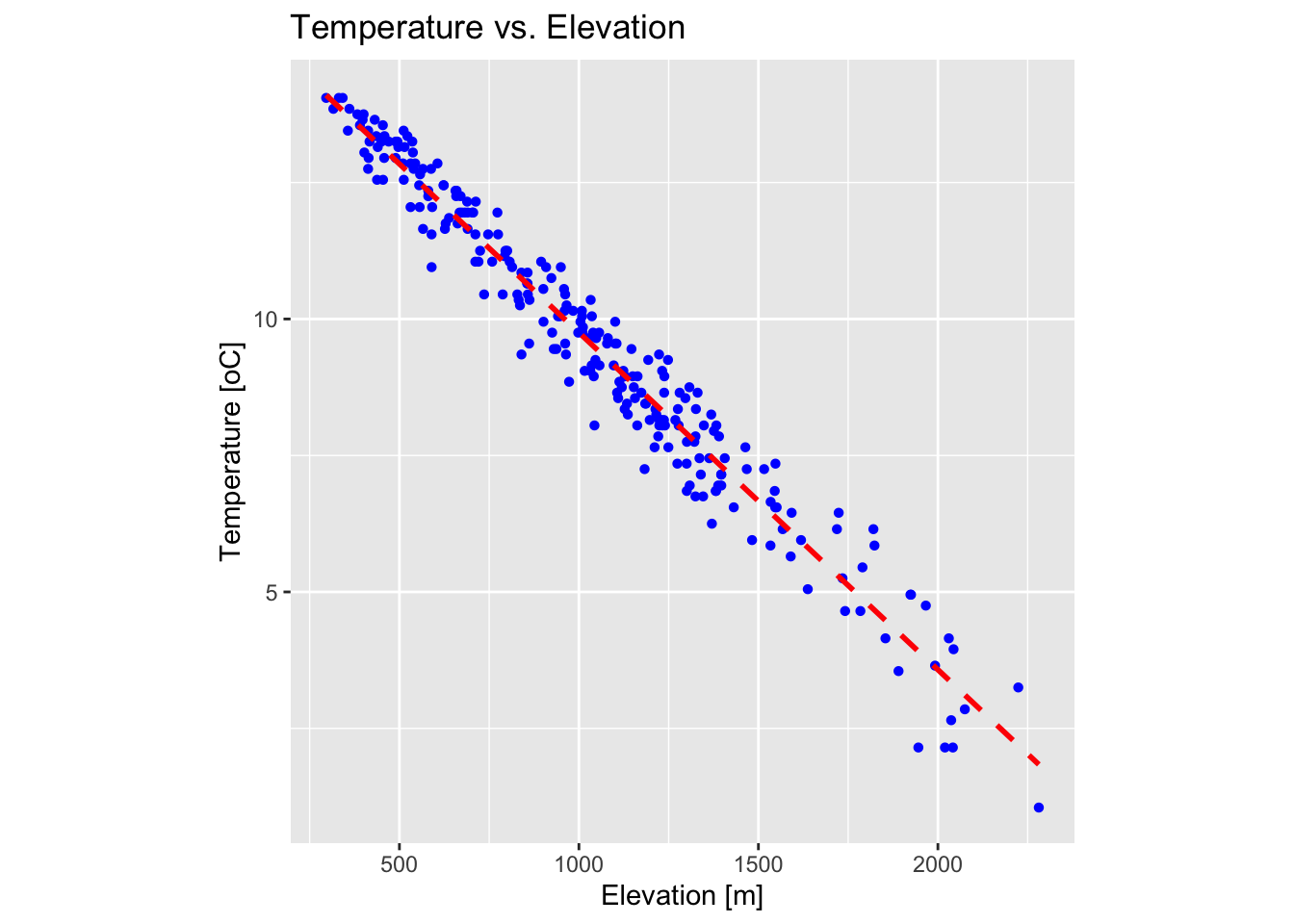

There is a strong dependence between temperature and elevation: temperature decreases with increasing elevation due to the decrease in atmospheric pressure, which leads to adiabatic cooling of rising air. The average rate of temperature decrease, known as the environmental lapse rate, is approximately 6.5°C per kilometre in the troposphere, though this can vary with local conditions such as humidity and weather patterns.

training_sf includes both temperature and elevation

data, and so we can easily verify the relationship between these two

parameters:

# Plot temperature vs. elevation

plot_temp_elev <- ggplot(testing_sf, aes(x = Elevation, y = Temperature)) +

geom_point(shape = 16, colour = "blue") +

# add linear fit to the data

geom_smooth(method = "lm", se = FALSE, linetype = "dashed", colour = "red", size = 1) +

labs(title = "Temperature vs. Elevation",

x = "Elevation [m]", y = "Temperature [oC]") +

theme(aspect.ratio = 1)

# Make plot

plot_temp_elev

The above plot confirms that there is a strong relationship between temperature and elevation in our dataset.

The next code block performs a linear regression with elevation as

the independent (predictor) variable and temperature as the dependent

(response) variable. It then uses map algebra to predict temperature

values using the digital elevation model r_dem.

# Perform linear regression

lm_model <- lm(Temperature ~ Elevation, data = training_sf)

# Extract model parameters

intercept <- coef(lm_model)[1]

slope <- coef(lm_model)[2]

# Predict temperature values using map algebra

r_temp_predicted <- r_dem * slope + intercept

# Rename variable in SpatRaster

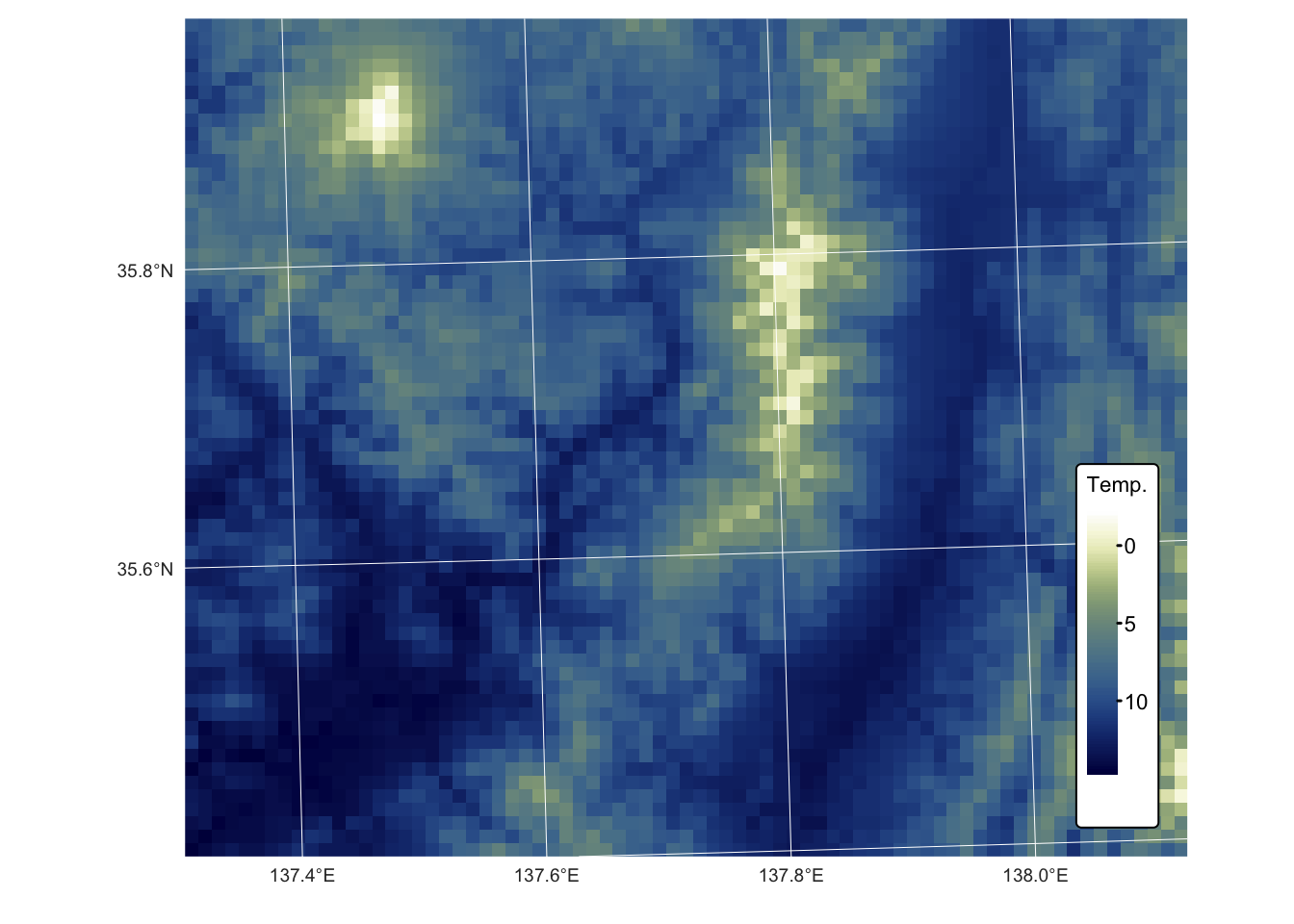

names(r_temp_predicted) <- "Temp."Plot the resulting temperature raster on a map using tmap:

tm_shape(r_temp_predicted) + tm_raster("Temp.",

col.scale = tm_scale_continuous(values = "-scico.davos")) +

tm_layout(frame = FALSE,

legend.text.size = 0.7,

legend.title.size = 0.7,

legend.position = c("right", "bottom"),

legend.bg.color = "white") +

tm_graticules(col = "white", lwd = 0.5, n.x = 5, n.y = 4) Compare with testing dataset:

Compare with testing dataset:

# Create a copy of training set so we do not overwrite the original

testing_sf_temp <- testing_sf

# Convert testing_sf_idw to a SpatVector

points_vect <- vect(testing_sf_temp)

# Extract raster values at point locations

extracted_vals <- terra::extract(r_temp_predicted, points_vect)

# Remove the ID column produced by extract()

extracted_vals <- extracted_vals[, -1, drop = FALSE]

# Rename extracted column

colnames(extracted_vals) <- "Temp_regress"

# Convert extracted values to an sf-compatible format

extracted_vals_sf <- as.data.frame(extracted_vals)

# Ensure row counts match for binding

testing_sf_temp <- cbind(testing_sf_temp, extracted_vals_sf)

# Compute the common limits for both axes from the data

lims_precip <- range(c(testing_sf_temp$Temperature, testing_sf_temp$Temp_regress))

# First plot: Observed vs. Predicted for Temperature

plot_regress <- ggplot(testing_sf_temp, aes(x = Temperature, y = Temp_regress)) +

geom_point(shape = 16, colour = "blue") +

geom_smooth(method = "lm", se = FALSE, linetype = "dashed", colour = "red", size = 1) +

geom_abline(intercept = 0, slope = 1, colour = "black") +

labs(title = "Observed vs. Predicted Temperature [oC] \nGlobal regression with DEM",

x = "Observed", y = "Predicted") +

coord_fixed(ratio = 1) +

theme(aspect.ratio = 1) +

scale_x_continuous(limits = lims_precip) +

scale_y_continuous(limits = lims_precip)

# Make plot

plot_regress

Both the map and the plot above confirm a satisfactory outcome, with observed and predicted values differing by only a few degrees Celsius.

IDW interpolation

Inverse Distance Weighted (IDW) interpolation is a deterministic spatial interpolation method used to estimate values at unmeasured locations based on the values of nearby measured points. The underlying principle is that points closer in space are more alike than those further away.

Weighting by Distance

Each known data point is assigned a weight that is inversely proportional to its distance from the target location. Typically, the weight is calculated using the formula:

\[w_{i} = \frac{1}{d^{p}_{i}}\] where \(d_{i}\) is the distance from the known point to the estimation point, and \(p\) is a power parameter that controls how quickly the influence of a point decreases with distance. A higher \(p\) emphasises nearer points even more.

The estimated value at an unknown location is computed as a weighted average of the known values:

\[z(x,y) = \frac{\sum_{i=1}^{n}w_{i}z_{i}}{\sum_{i=1}^{n}w_{i}}\]

Here, \(z_{i}\) represents the known values at different points and \(w_{i}\) is the weight. The summation is taken over all the points used in the interpolation.

Advantages and limitations:

The method is relatively simple to implement and understand, and it works well when the spatial distribution of known data points is fairly uniform. However, it can sometimes lead to artefacts (e.g., bullseye effects) if data points are clustered or if there are abrupt spatial changes that the method cannot account for.

The next R code block performs IDW interpolation on the precipitation

values from training_sf:

# Extract coordinates from training points and prepare data for IDW interpolation

points_coords <- st_coordinates(training_sf)

precip_df <- data.frame(x = points_coords[,1], y = points_coords[,2],

value_precip = training_sf$Precipitation)

# Convert to sf spatial object

precip_sf <- st_as_sf(precip_df, coords = c("x", "y"), crs = st_crs(training_sf))

# Prepare grid data for interpolation

grid_coords <- st_coordinates(grid_sf_cropped)

grid_df_precip <- data.frame(x = grid_coords[,1], y = grid_coords[,2])

# Convert grid to sf spatial object

grid_sf <-

st_as_sf(grid_df_precip, coords = c("x", "y"), crs = st_crs(grid_sf_cropped))

# Perform IDW interpolation

idw_result <-

idw(formula = value_precip ~ 1, locations = precip_sf, newdata = grid_sf, idp = 2)## [inverse distance weighted interpolation]# Extract predicted values

grid_df_precip$pred <- idw_result$var1.pred

# Convert the prediction grid to a terra SpatRaster using type = "xyz"

rast_precip_idw <- rast(grid_df_precip, type = "xyz")

# Assign a CRS and set variable names

crs(rast_precip_idw) <- st_crs(bnd)$wkt

names(rast_precip_idw) <- "Precipitation"Plot the resulting surface on an interactive 3D plot:

# Data for plotting

r <- rast_precip_idw

# Convert the raster to a matrix for plotly.

r_mat <- terra::as.matrix(r, wide = TRUE)

# Flip the matrix vertically so that row order matches the y sequence (ymin to ymax).

r_mat <- r_mat[nrow(r_mat):1, ]

# Create an Interactive 3D Plot with plotly

# Initialize a plotly surface plot for the raster.

p_idw <- plot_ly(x = ~x_seq_r, y = ~y_seq_r, z = ~r_mat) %>%

add_surface(showscale = TRUE, colorscale = "Portland", opacity = 0.8)

# Add the sf object to plot.

p_idw <- p_idw %>% add_trace(x = sf_df_r$X, y = sf_df_r$Y, z = sf_df_r$Z,

type = 'scatter3d', mode = 'markers',

marker = list(size = 3, color = 'blue'))

# Modify the layout to control the z-axis range and adjust the vertical exaggeration.

p_idw <- p_idw %>% layout(scene = list(

zaxis = list(title = "Precipitation [mm]",

# Alternatively, specify a custom range, e.g., range = c(0, 20)

range = c(min(sf_df_r$Z, na.rm = TRUE), max(sf_df_r$Z, na.rm = TRUE))),

aspectmode = "manual", aspectratio = list(x = 1, y = 1, z = 0.5)

))

# Display the precipitation plot

p_idwNext, plot the testing dataset:

# Create a copy of training set so we do not overwrite the original

testing_sf_idw <- testing_sf

# Convert testing_sf_idw to a SpatVector

points_vect <- vect(testing_sf_idw)

# Extract raster values at point locations

extracted_vals <- terra::extract(rast_precip_idw, points_vect)

# Remove the ID column produced by extract()

extracted_vals <- extracted_vals[, -1, drop = FALSE]

# Rename extracted column

colnames(extracted_vals) <- "Precip_idw"

# Convert extracted values to an sf-compatible format

extracted_vals_sf <- as.data.frame(extracted_vals)

# Ensure row counts match for binding

testing_sf_idw <- cbind(testing_sf_idw, extracted_vals_sf)

# Compute the common limits for both axes from the data

lims_precip <- range(c(testing_sf_idw$Precipitation, testing_sf_idw$Precip_idw))

# First plot: Observed vs. Predicted for Precipitation

plot_idw <- ggplot(testing_sf_idw, aes(x = Precipitation, y = Precip_idw)) +

geom_point(shape = 16, colour = "blue") +

geom_smooth(method = "lm", se = FALSE, linetype = "dashed", colour = "red", size = 1) +

geom_abline(intercept = 0, slope = 1, colour = "black") +

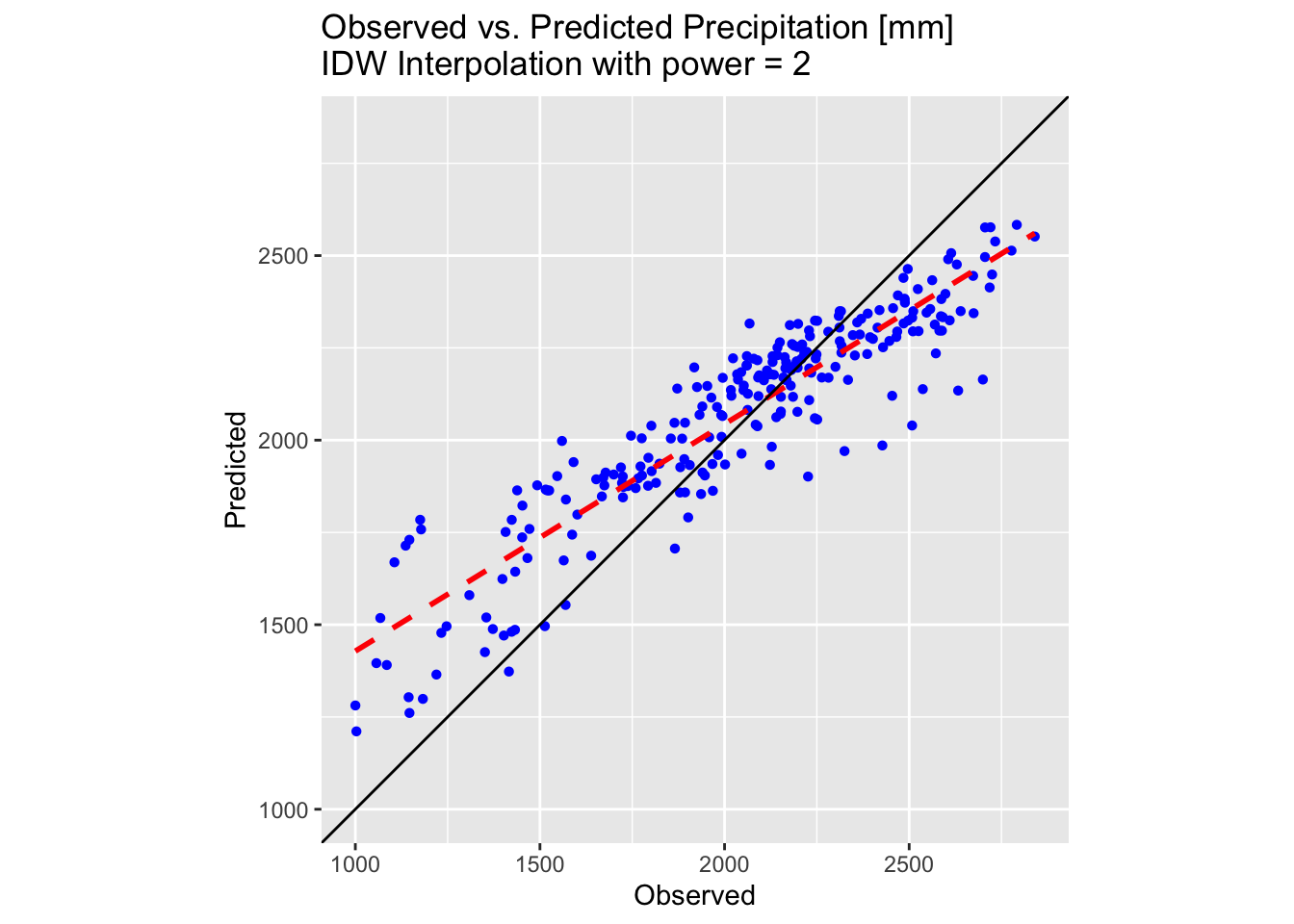

labs(title = "Observed vs. Predicted Precipitation [mm] \nIDW Interpolation with power = 2",

x = "Observed", y = "Predicted") +

coord_fixed(ratio = 1) +

theme(aspect.ratio = 1) +

scale_x_continuous(limits = lims_precip) +

scale_y_continuous(limits = lims_precip)

# Make plot

plot_idw

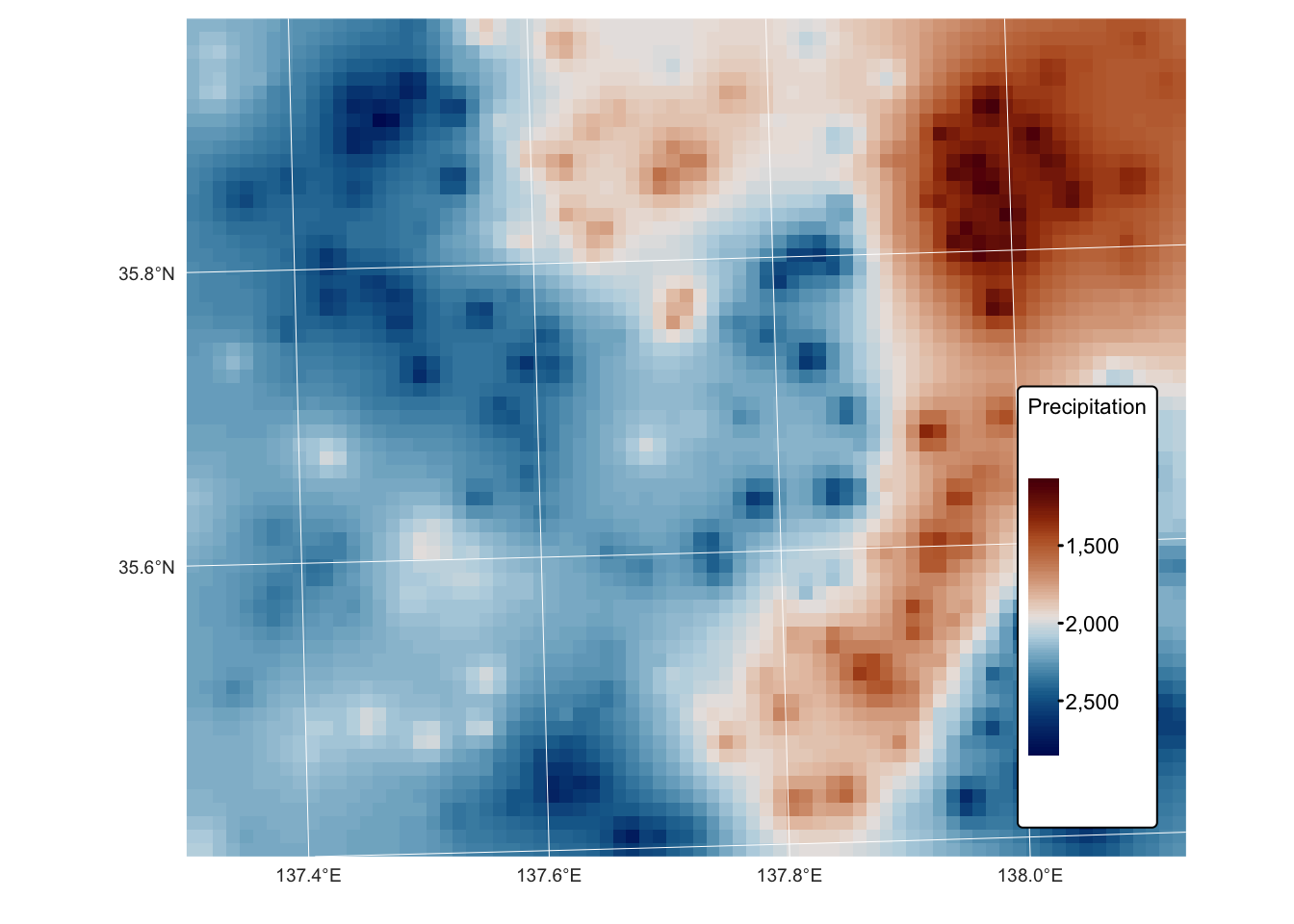

Examining the above 3D plot alongside the scatter plot of the testing dataset reveals that the IDW interpolation performs significantly better than the polynomial methods attempted earlier. However, the predicted surface appears rather “pointy”, displaying the bullseye effect noted previously.

The latter is most clear when the surface is plotted on a map:

tm_shape(rast_precip_idw) + tm_raster("Precipitation",

col.scale = tm_scale_continuous(values = "-scico.vik")) +

tm_layout(frame = FALSE,

legend.text.size = 0.7,

legend.title.size = 0.7,

legend.position = c("right", "bottom"),

legend.bg.color = "white") +

tm_graticules(col = "white", lwd = 0.5, n.x = 5, n.y = 4)

The default value for \(p\) (the

power parameter) in the idw() function is 2 (set using

idp = 2). The value of this parameter can be modified, and

in general:

- values of

idp < 2will produce a smoother interpolation with distant points having more influence, and - values of

idp > 2will produce a sharper local influence, with nearby points dominating more.

The idw() function in gstat also allows

further customisation by limiting the size of the search radius. This is

achieved by using the nmax and maxdist

parameters:

nmax: the maximum number of nearest neighbours to consider for interpolation.maxdist: the maximum search radius (distance in CRS units) within which points are considered.

The following block of code illustrates how to use these parameters together:

# Perform IDW interpolation with a limited search window

idw_result <- idw(formula = value_precip ~ 1,

locations = precip_sf,

newdata = grid_sf,

idp = 2, # Power parameter (changeable)

nmax = 10, # Consider only the 10 nearest points

maxdist = 50000) # Maximum search distance (e.g., 50 km)In the next example, we will set the idp = 4 to reduce

the bullseye effect and produce a smoother, yet more accurate,

surface:

# Perform IDW interpolation with power = 4

idw_result4 <-

idw(formula = value_precip ~ 1, locations = precip_sf, newdata = grid_sf, idp = 4)## [inverse distance weighted interpolation]# Extract predicted values

grid_df_precip$pred <- idw_result4$var1.pred

# Convert the prediction grid to a terra SpatRaster using type = "xyz"

rast_precip_idw4 <- rast(grid_df_precip, type = "xyz")

# Assign a CRS and set variable names

crs(rast_precip_idw4) <- st_crs(bnd)$wkt

names(rast_precip_idw4) <- "Precipitation"Plot in 3D:

# Data for plotting

r <- rast_precip_idw4

# Convert the raster to a matrix for plotly.

r_mat <- terra::as.matrix(r, wide = TRUE)

# Flip the matrix vertically so that row order matches the y sequence (ymin to ymax).

r_mat <- r_mat[nrow(r_mat):1, ]

# Create an Interactive 3D Plot with plotly

# Initialize a plotly surface plot for the raster.

p_idw <- plot_ly(x = ~x_seq_r, y = ~y_seq_r, z = ~r_mat) %>%

add_surface(showscale = TRUE, colorscale = "Portland", opacity = 0.8)

# Add the sf object to plot.

p_idw <- p_idw %>% add_trace(x = sf_df_r$X, y = sf_df_r$Y, z = sf_df_r$Z,

type = 'scatter3d', mode = 'markers',

marker = list(size = 3, color = 'blue'))

# Modify the layout to control the z-axis range and adjust the vertical exaggeration.

p_idw <- p_idw %>% layout(scene = list(

zaxis = list(title = "Precipitation [mm]",

# Alternatively, specify a custom range, e.g., range = c(0, 20)

range = c(min(sf_df_r$Z, na.rm = TRUE), max(sf_df_r$Z, na.rm = TRUE))),

aspectmode = "manual", aspectratio = list(x = 1, y = 1, z = 0.5)

))

# Display the precipitation plot

p_idwPlot testing dataset:

# Create a copy of training set so we do not overwrite the original

testing_sf_idw4 <- testing_sf

# Convert testing_sf_idw to a SpatVector

points_vect <- vect(testing_sf_idw4)

# Extract raster values at point locations

extracted_vals <- terra::extract(rast_precip_idw4, points_vect)

# Remove the ID column produced by extract()

extracted_vals <- extracted_vals[, -1, drop = FALSE]

# Rename extracted column

colnames(extracted_vals) <- "Precip_idw"

# Convert extracted values to an sf-compatible format

extracted_vals_sf <- as.data.frame(extracted_vals)

# Ensure row counts match for binding

testing_sf_idw4 <- cbind(testing_sf_idw4, extracted_vals_sf)

# Compute the common limits for both axes from the data

lims_precip <- range(c(testing_sf_idw4$Precipitation, testing_sf_idw4$Precip_idw))

# First plot: Observed vs. Predicted for Precipitation

plot_idw <- ggplot(testing_sf_idw4, aes(x = Precipitation, y = Precip_idw)) +

geom_point(shape = 16, colour = "blue") +

geom_smooth(method = "lm", se = FALSE, linetype = "dashed", colour = "red", size = 1) +

geom_abline(intercept = 0, slope = 1, colour = "black") +

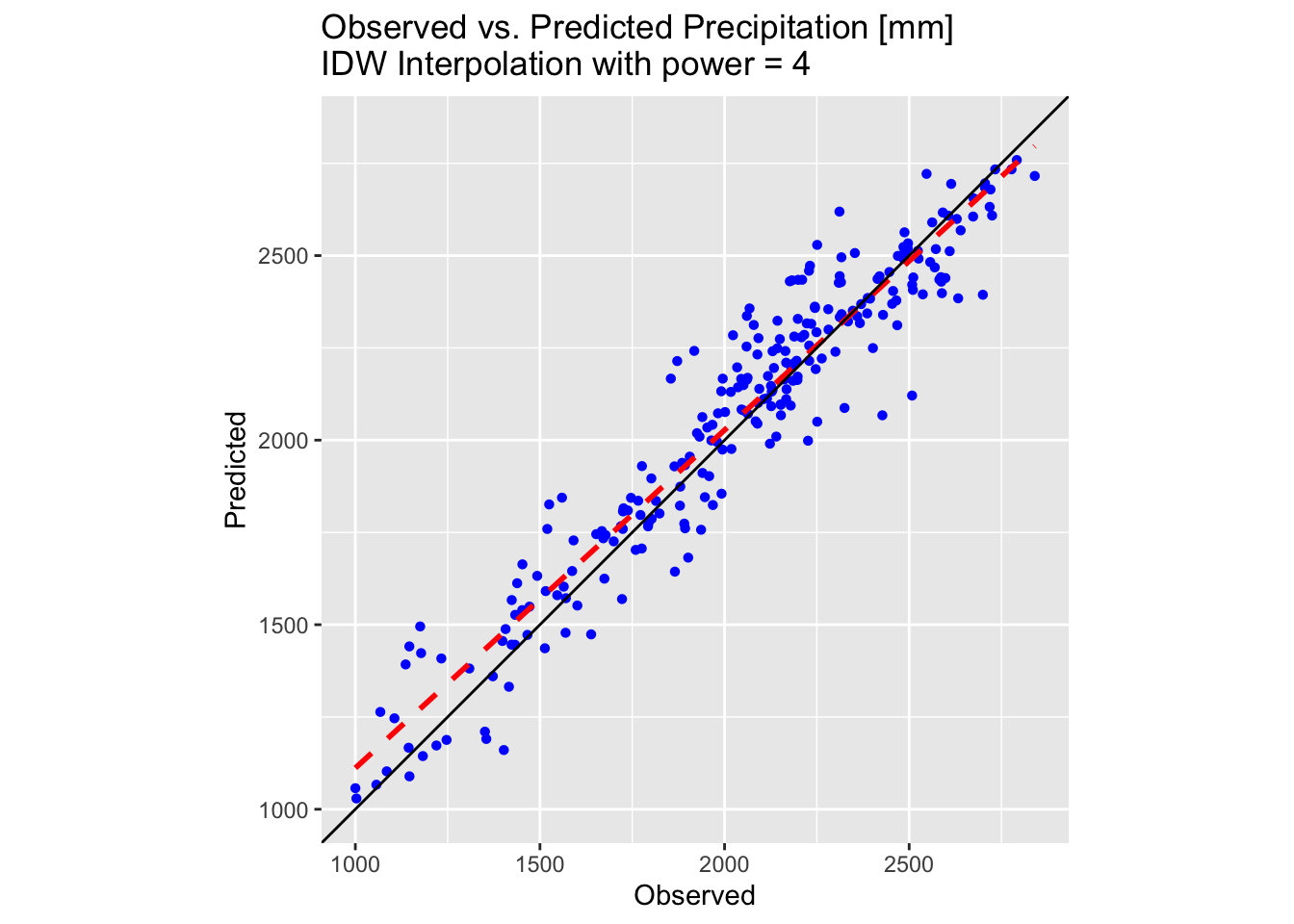

labs(title = "Observed vs. Predicted Precipitation [mm] \nIDW Interpolation with power = 4",

x = "Observed", y = "Predicted") +

coord_fixed(ratio = 1) +

theme(aspect.ratio = 1) +

scale_x_continuous(limits = lims_precip) +

scale_y_continuous(limits = lims_precip)

# Make plot

plot_idw

Plot on a map using tmap:

tm_shape(rast_precip_idw4) + tm_raster("Precipitation",

col.scale = tm_scale_continuous(values = "-scico.vik")) +

tm_layout(frame = FALSE,

legend.text.size = 0.7,

legend.title.size = 0.7,

legend.position = c("right", "bottom"),

legend.bg.color = "white") +

tm_graticules(col = "white", lwd = 0.5, n.x = 5, n.y = 4)

As shown by the above plots and map, increasing the idp

parameter to 4 produces a better-fitting surface for the precipitation

values in training_sf.

Kriging

Kriging is a geostatistical interpolation technique used to predict values at unmeasured locations based on spatially correlated data. Named after South African mining engineer Danie Krige, this method was formalised by Georges Matheron in the 1960s and has since been widely applied in fields such as geology, environmental science, meteorology, and geographic information systems (GIS).

Kriging differs from simpler interpolation methods (e.g., inverse distance weighting or spline interpolation) by incorporating spatial autocorrelation — the statistical relationship between measured points — to make more reliable predictions. It is based on the theory of regionalised variables, assuming that spatially distributed data exhibit a degree of continuity and structure.

At its core, Kriging uses a weighted linear combination of known data points to estimate unknown values, where the weights are determined through a variogram model. The variogram quantifies how data values change with distance, allowing Kriging to provide not only the best linear unbiased estimate but also an estimation of the associated uncertainty.

Several variants of Kriging exist, including:

- Ordinary Kriging – Assumes a constant but unknown mean and relies on the variogram to model spatial relationships.

- Simple Kriging – Assumes a known, constant mean across the study area.

- Universal Kriging – Accounts for deterministic trends in the data by incorporating external explanatory variables.

- Indicator Kriging – Used for categorical data by transforming it into binary indicators.

- Co-Kriging – Incorporates multiple correlated variables to improve estimation accuracy.

Kriging is particularly useful when dealing with sparse or irregularly spaced data, providing both interpolated values and a measure of prediction confidence. However, it requires careful variogram modelling and assumptions about spatial stationarity, making it more complex than deterministic interpolation methods.

Calculating the sample semivariogram

The semivariogram is a function that quantifies how similar or dissimilar data points are based on the distance between them. It is defined as:

\[\gamma(h) = \frac{1}{2}\mathbb{E}\{[Z(x)-Z(x+h)]^2\}\]

where:

- \(\gamma(h)\) is the semivariance for a lag distance \(h\),

- \(Z(x)\) is the value of the variable at location \(x\),

- \(Z(x+h)\) is the value at a location separated by a vector \(h\).

In kriging, the semivariogram serves to model the spatial autocorrelation of the data. The key steps of the process, include:

Empirical estimation: The semivariogram is first computed empirically by evaluating the half average squared differences between pairs of data points separated by various distances (lag bins).

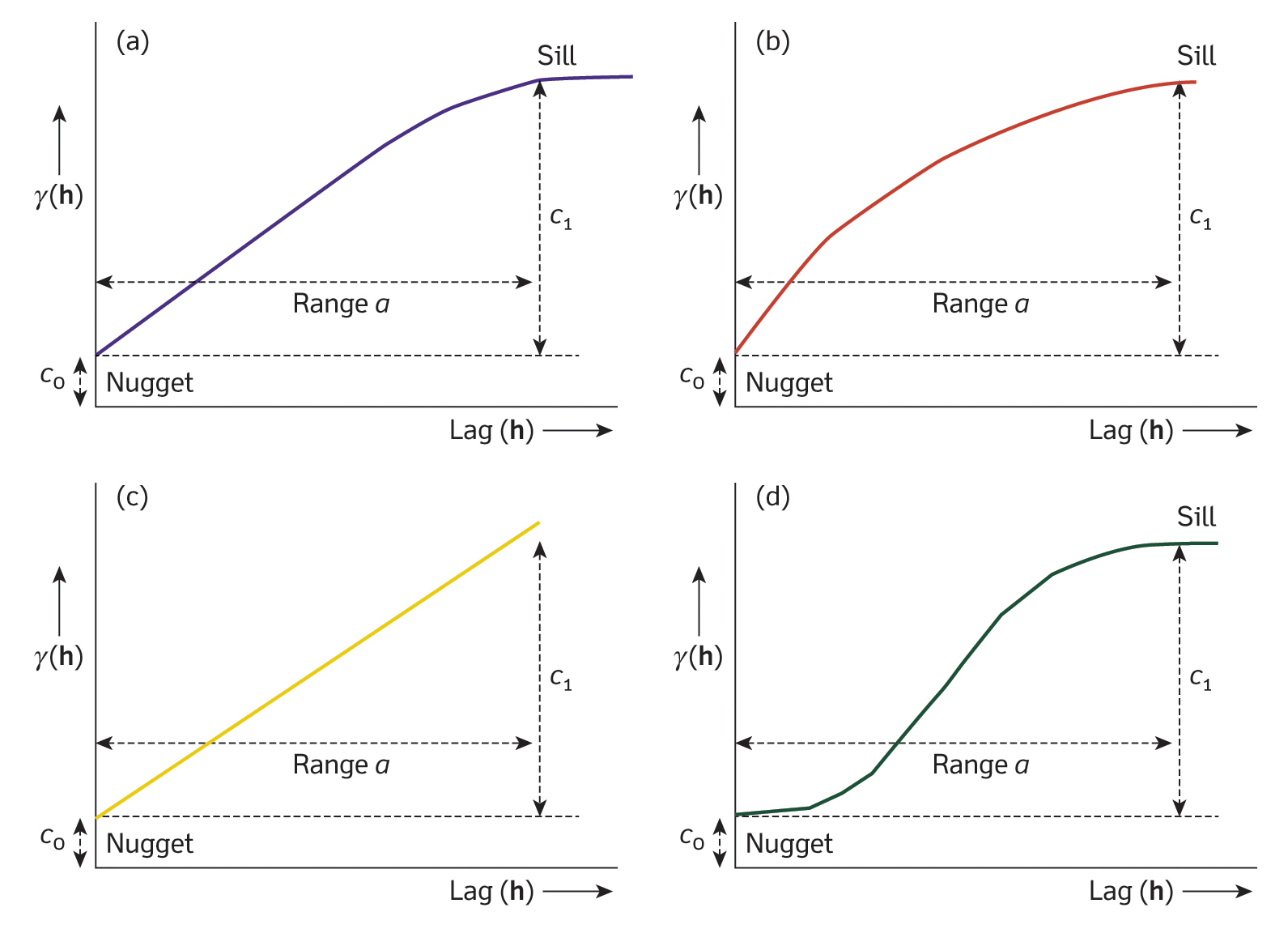

Modelling the spatial structure: A theoretical model (e.g. spherical, exponential, linear, Gaussian) is then fitted to the empirical semivariogram. This model characterises how the data’s variance changes with distance.

Key parameters:

Nugget: represents the semivariance at zero distance and captures both measurement error and microscale variability that are not explained by larger-scale spatial trends. A high nugget value suggests that even very closely located samples can exhibit significant differences, indicating local variability or errors.

Sill: is the plateau that the semivariogram reaches, marking the maximum variance beyond which increases in separation distance do not add further variability. This indicates that spatial correlation has become negligible, and the data variability is fully accounted for at this level.

Range: is defined as the distance at which the semivariogram reaches the sill, beyond which data points are considered spatially independent. It provides an estimate of the spatial extent over which autocorrelation exists, meaning that observations further apart than this distance do not influence each other significantly.

Determining kriging weights:

The fitted semivariogram model is used to derive the weights for kriging. These weights determine how much influence each neighbouring sample point will have on the estimated value at an unsampled location, ensuring that the spatial dependence captured by the semivariogram is respected.

Fig. 2: Examples of the most commonly used variogram models: (a) spherical, (b) exponential, (c) linear, (d) Gaussian. Reproduced from: Burrough et al (2015), Principles of Geographical Information Systems (3rd ed.), Oxford University Press.

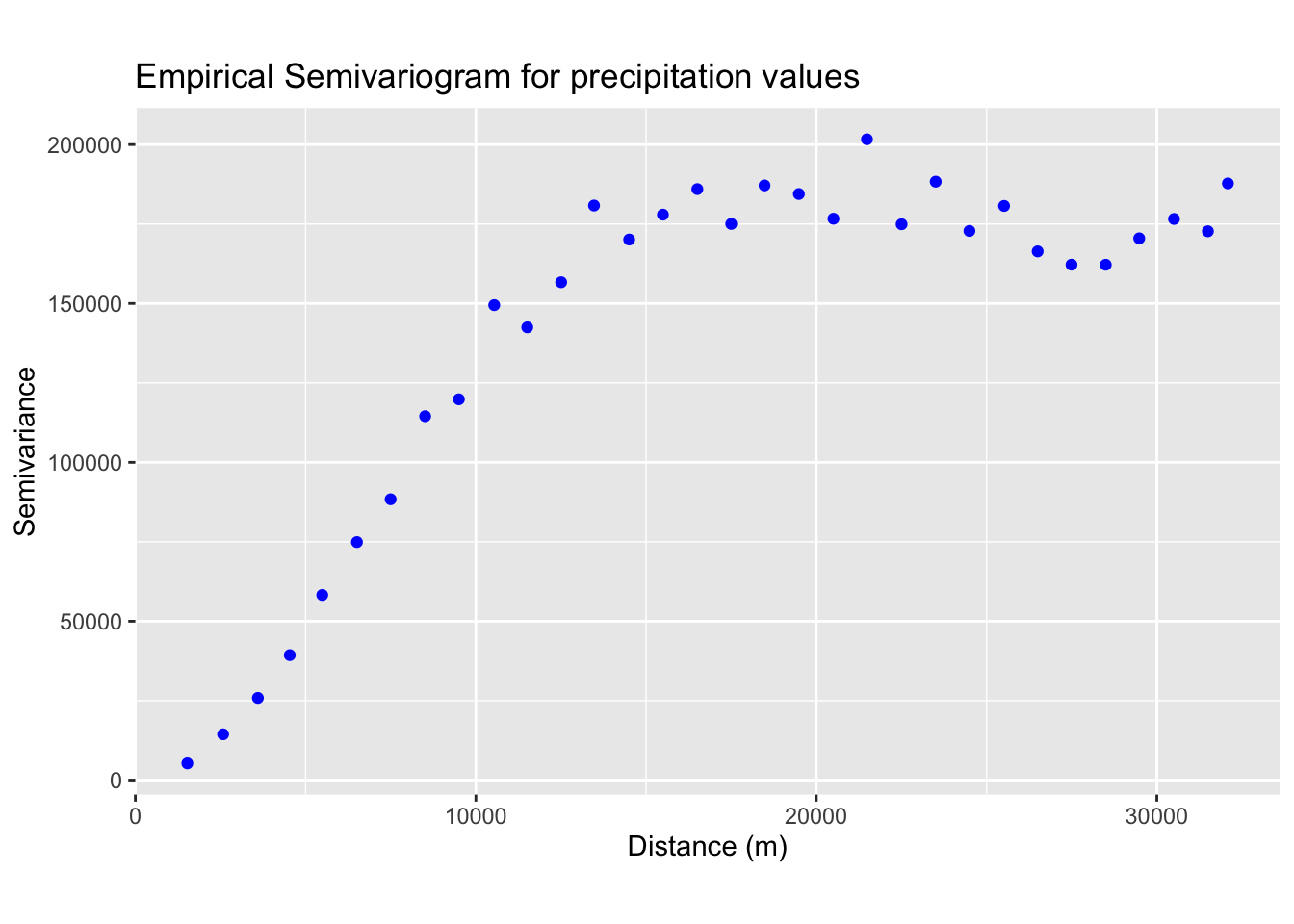

In the next block of R code we compute and plot the empirical

semivariogram for the precipitation data from

training_sf:

# Create a gstat object using the sf point data directly

# The formula precip ~ 1 indicates that no auxiliary predictors are used

# and the mean is assumed constant over the study area.

gstat_obj <- gstat(formula = Precipitation ~ 1, data = training_sf)

# Compute the empirical semivariogram

# The width parameter defines the lag distance binning

# We use 1 km for width; this should be adjusted as needed

emp_variogram <- variogram(gstat_obj, width = 1000)

# Plot empirical semivariogram

ggplot(emp_variogram, aes(x = dist, y = gamma)) +

geom_point(colour = "blue") +

labs(title = "Empirical Semivariogram for precipitation values",

x = "Distance (m)", y = "Semivariance") +

theme(aspect.ratio = 0.6)

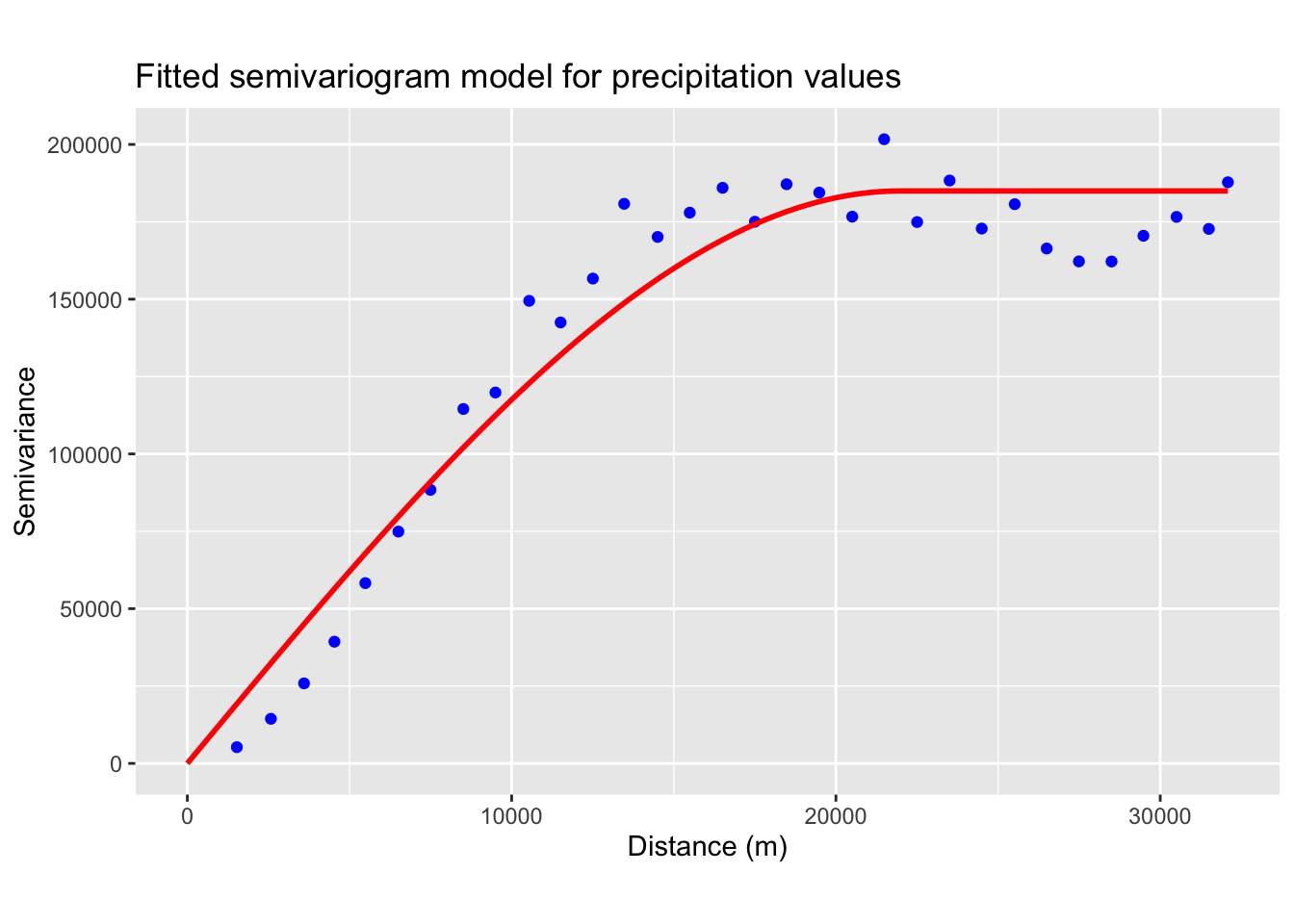

Next, we fit a theoretical model to the empirical semivariogram. The plot above suggests that the data may be best represented by a spherical model (see Fig. 2a). From the plot, we estimate the values for the nugget, sill, and range.

# Fit a spherical variogram model; adjust psill, range, and nugget as appropriate

model_variogram <-

fit.variogram(emp_variogram,

model = vgm(psill = 150000, model = "Sph", range = 15000, nugget = 0))

# Plot empirical semivariogram

vgm_line <- variogramLine(model_variogram, maxdist = max(emp_variogram$dist), n = 100)

ggplot() +

geom_point(data = emp_variogram, aes(x = dist, y = gamma), colour = "blue") +

geom_line(data = vgm_line, aes(x = dist, y = gamma), color = "red", size = 1) +

labs(title = "Fitted semivariogram model for precipitation values",

x = "Distance (m)", y = "Semivariance") +

theme(aspect.ratio = 0.6)

Ordinary Kriging

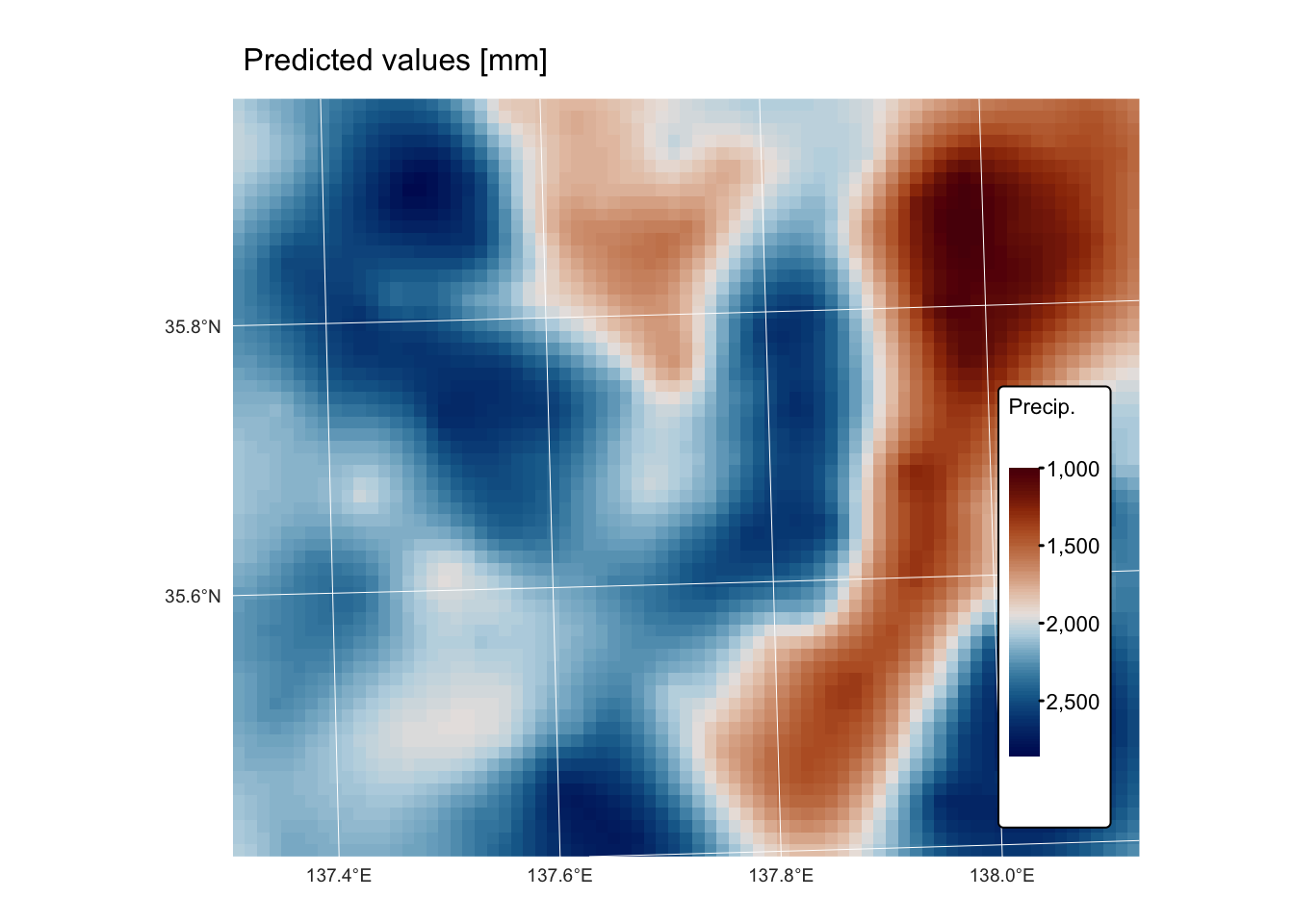

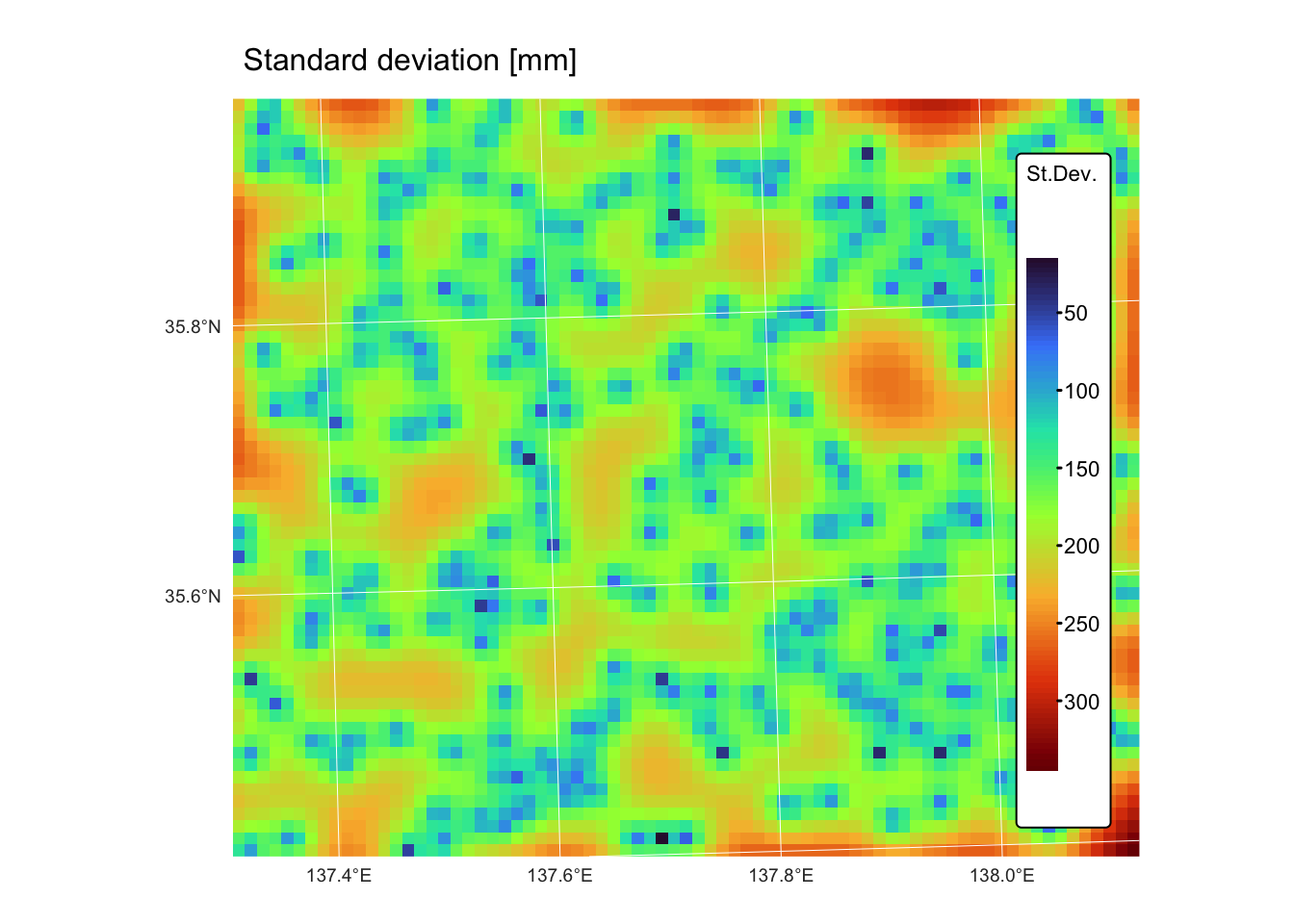

In the next block of R code, we apply the fitted variogram model to interpolate precipitation values over the previously generated grid (see Fig. 1).

Ordinary kriging is one of the most commonly used forms of kriging because it assumes that the mean is constant but unknown across the neighbourhood of interest. In that sense, it is often regarded as a “basic” or “default” kriging method. However, if one considers mathematical simplicity, simple kriging is actually simpler since it assumes that the mean is known a priori. In practice, ordinary kriging is favoured for its flexibility when the true mean is not known, even though it involves estimating that constant mean from the data.

# Extract coordinates from training points and prepare data for interpolation

points_coords <- st_coordinates(training_sf)

precip_df <- data.frame(x = points_coords[,1], y = points_coords[,2],

value_precip = training_sf$Precipitation)

# Convert to sf spatial object

precip_sf <- st_as_sf(precip_df, coords = c("x", "y"), crs = st_crs(training_sf))

# Perform ordinary kriging over the grid;

# The output will include prediction (var1.pred) and variance (var1.var)

kriging_result <- krige(formula = value_precip ~ 1,

locations = precip_sf,

newdata = grid_sf_cropped,

model = model_variogram)## [using ordinary kriging]# Convert the kriging results (sf object) to a terra SpatVector

kriging_vect <- vect(kriging_result)

# Create a raster template based on the bounding polygon (bnd) with desired resolution

# Here we set resolution to 1km; adjust resolution as needed

raster_template <- rast(bnd, resolution = c(1000, 1000))

# Rasterize the predictions using the fields 'var1.pred' and 'var1.var'

kriging_raster_pred <- rasterize(kriging_vect, raster_template, field = "var1.pred")

kriging_raster_var <- rasterize(kriging_vect, raster_template, field = "var1.var")

# Convert variance to standard deviation

kriging_raster_var <- sqrt(kriging_raster_var)

# Assign a CRS and set variable names

crs(kriging_raster_pred) <- st_crs(bnd)$wkt

crs(kriging_raster_var) <- st_crs(bnd)$wkt

names(kriging_raster_pred) <- "Prediction"

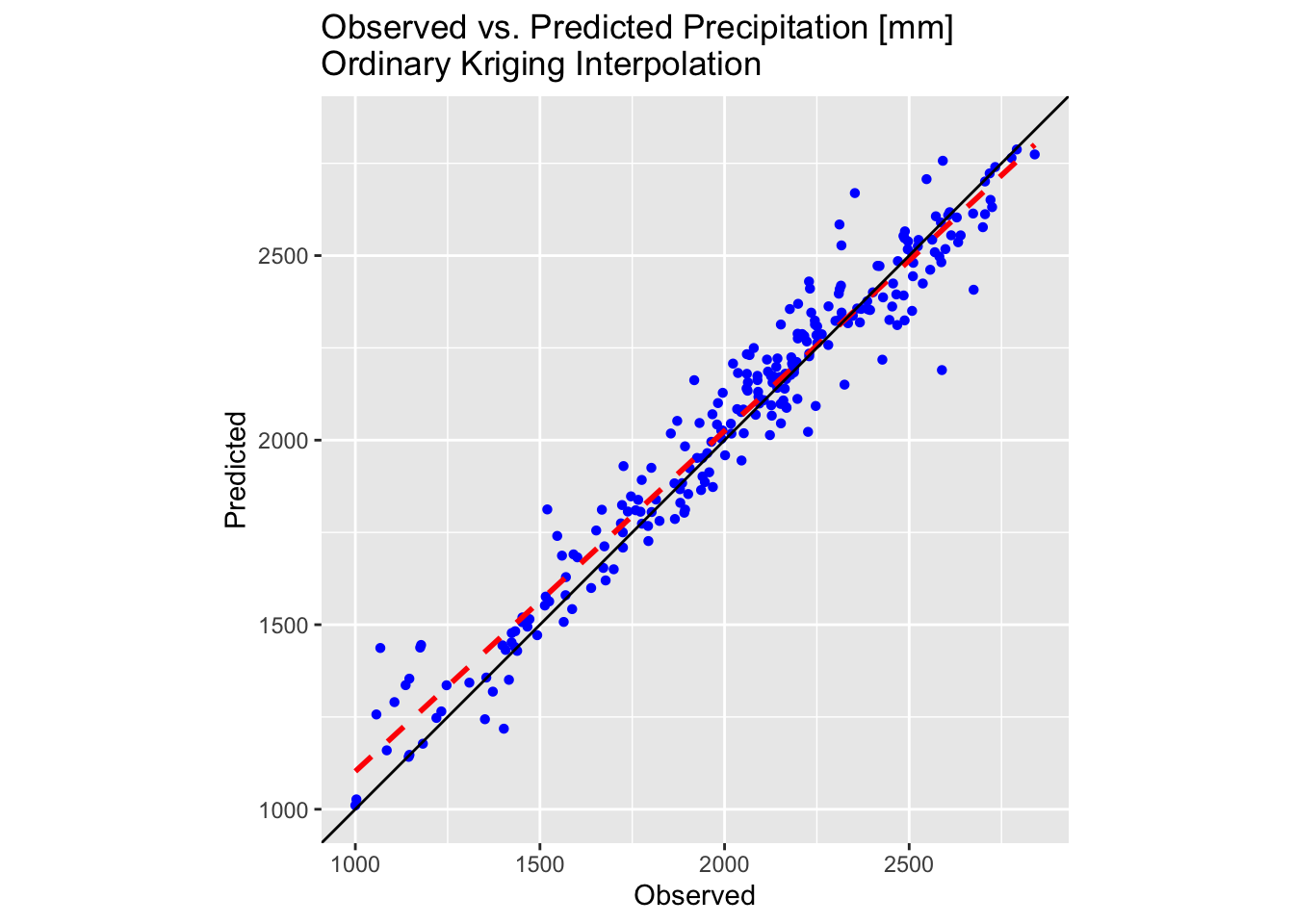

names(kriging_raster_var) <- "Variance"Next, we will create 3D plots to visualize the results, compare the predicted values with the testing dataset, and map both the predictions and their associated variance using tmap.

# Data for plotting

r <- kriging_raster_pred

# Convert the raster to a matrix for plotly.

r_mat <- terra::as.matrix(r, wide = TRUE)

# Flip the matrix vertically so that row order matches the y sequence (ymin to ymax).

r_mat <- r_mat[nrow(r_mat):1, ]

# Create an Interactive 3D Plot with plotly

# Initialize a plotly surface plot for the raster.

p_krige <- plot_ly(x = ~x_seq_r, y = ~y_seq_r, z = ~r_mat) %>%

add_surface(showscale = TRUE, colorscale = "Portland", opacity = 0.8)

# Add the sf object to plot.

p_krige <- p_krige %>% add_trace(x = sf_df_r$X, y = sf_df_r$Y, z = sf_df_r$Z,

type = 'scatter3d', mode = 'markers',

marker = list(size = 3, color = 'blue'))

# Modify the layout to control the z-axis range and adjust the vertical exaggeration.

p_krige <- p_krige %>% layout(scene = list(

zaxis = list(title = "Precipitation [mm]",

# Alternatively, specify a custom range, e.g., range = c(0, 20)

range = c(min(sf_df_r$Z, na.rm = TRUE), max(sf_df_r$Z, na.rm = TRUE))),

aspectmode = "manual", aspectratio = list(x = 1, y = 1, z = 0.5)

))

# Display the precipitation plot

p_krige# Create a copy of training set so we do not overwrite the original

testing_sf_krige <- testing_sf

# Convert testing_sf_idw to a SpatVector

points_vect <- vect(testing_sf_krige)

# Extract raster values at point locations

extracted_vals <- terra::extract(kriging_raster_pred, points_vect)

# Remove the ID column produced by extract()

extracted_vals <- extracted_vals[, -1, drop = FALSE]

# Rename extracted column

colnames(extracted_vals) <- "Precip_krige"

# Convert extracted values to an sf-compatible format

extracted_vals_sf <- as.data.frame(extracted_vals)

# Ensure row counts match for binding

testing_sf_krige <- cbind(testing_sf_krige, extracted_vals_sf)

# Compute the common limits for both axes from the data

lims_precip <- range(c(testing_sf_krige$Precipitation, testing_sf_krige$Precip_krige))

# First plot: Observed vs. Predicted for Precipitation

plot_krige <- ggplot(testing_sf_krige, aes(x = Precipitation, y = Precip_krige)) +

geom_point(shape = 16, colour = "blue") +

geom_smooth(method = "lm", se = FALSE, linetype = "dashed", colour = "red", size = 1) +

geom_abline(intercept = 0, slope = 1, colour = "black") +

labs(title = "Observed vs. Predicted Precipitation [mm] \nOrdinary Kriging Interpolation",

x = "Observed", y = "Predicted") +

coord_fixed(ratio = 1) +

theme(aspect.ratio = 1) +

scale_x_continuous(limits = lims_precip) +

scale_y_continuous(limits = lims_precip)

# Make plot

plot_krige

map_predict <-

tm_shape(kriging_raster_pred) + tm_raster("Prediction",

col.scale = tm_scale_continuous(values = "-scico.vik"),

tm_legend(title = "Precip.")) +

tm_layout(frame = FALSE,

legend.text.size = 0.7,

legend.title.size = 0.7,

legend.position = c("right", "bottom"),

legend.bg.color = "white") +

tm_graticules(col = "white", lwd = 0.5, n.x = 5, n.y = 4) +

tm_title("Predicted values [mm]", size = 1)

map_var <-

tm_shape(kriging_raster_var) + tm_raster("Variance",

col.scale = tm_scale_continuous(values = "turbo"),

tm_legend(title = "St.Dev.")) +

tm_layout(frame = FALSE,

legend.text.size = 0.7,

legend.title.size = 0.7,

legend.position = c("right", "bottom"),

legend.bg.color = "white") +

tm_graticules(col = "white", lwd = 0.5, n.x = 5, n.y = 4) +

tm_title("Standard deviation [mm]", size = 1)

map_predict

Operations on continuous fields

Spatial analysis of continuous fields involves a range of operations that allow us to understand, predict, and visualise phenomena that vary continuously over space.

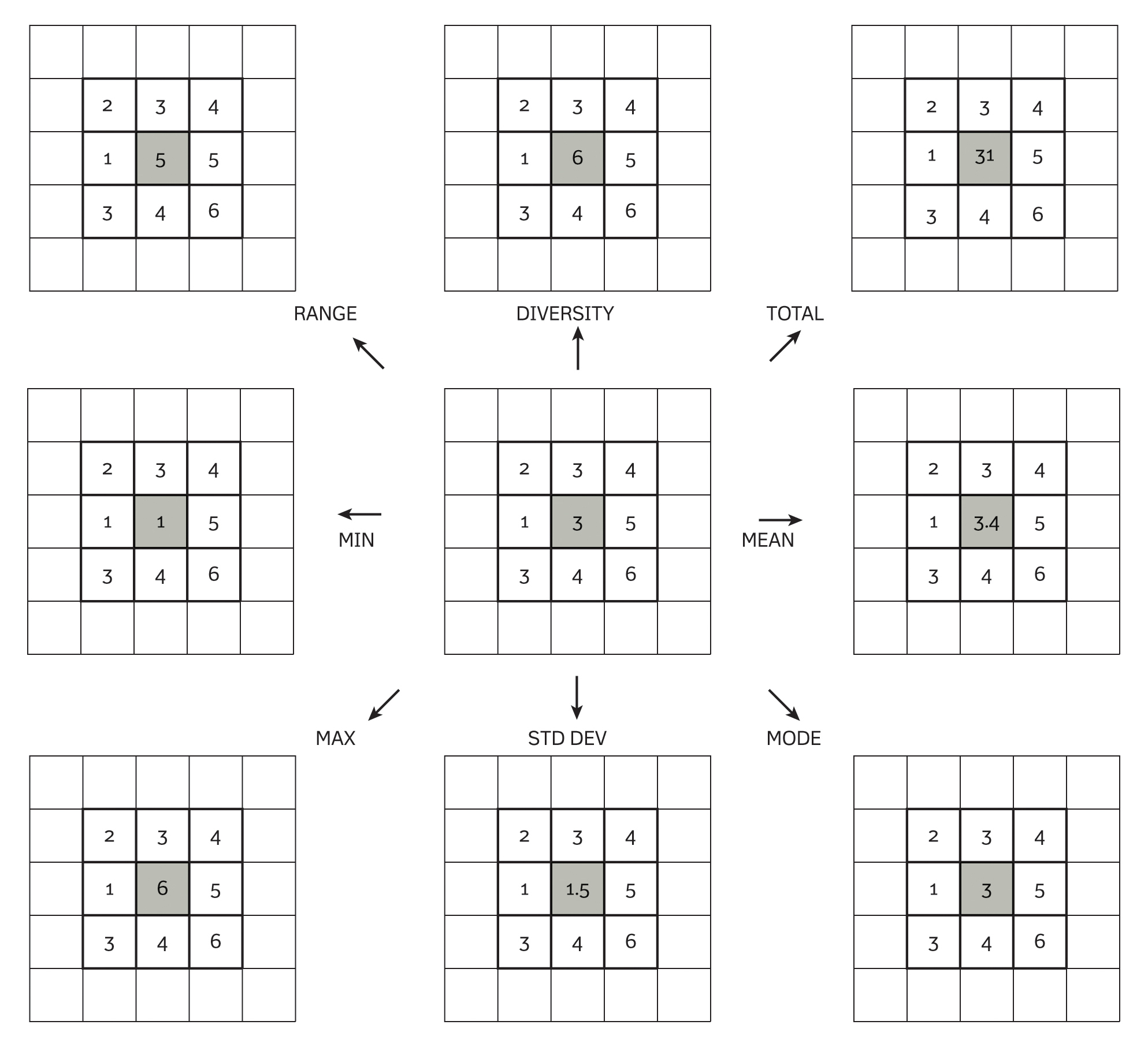

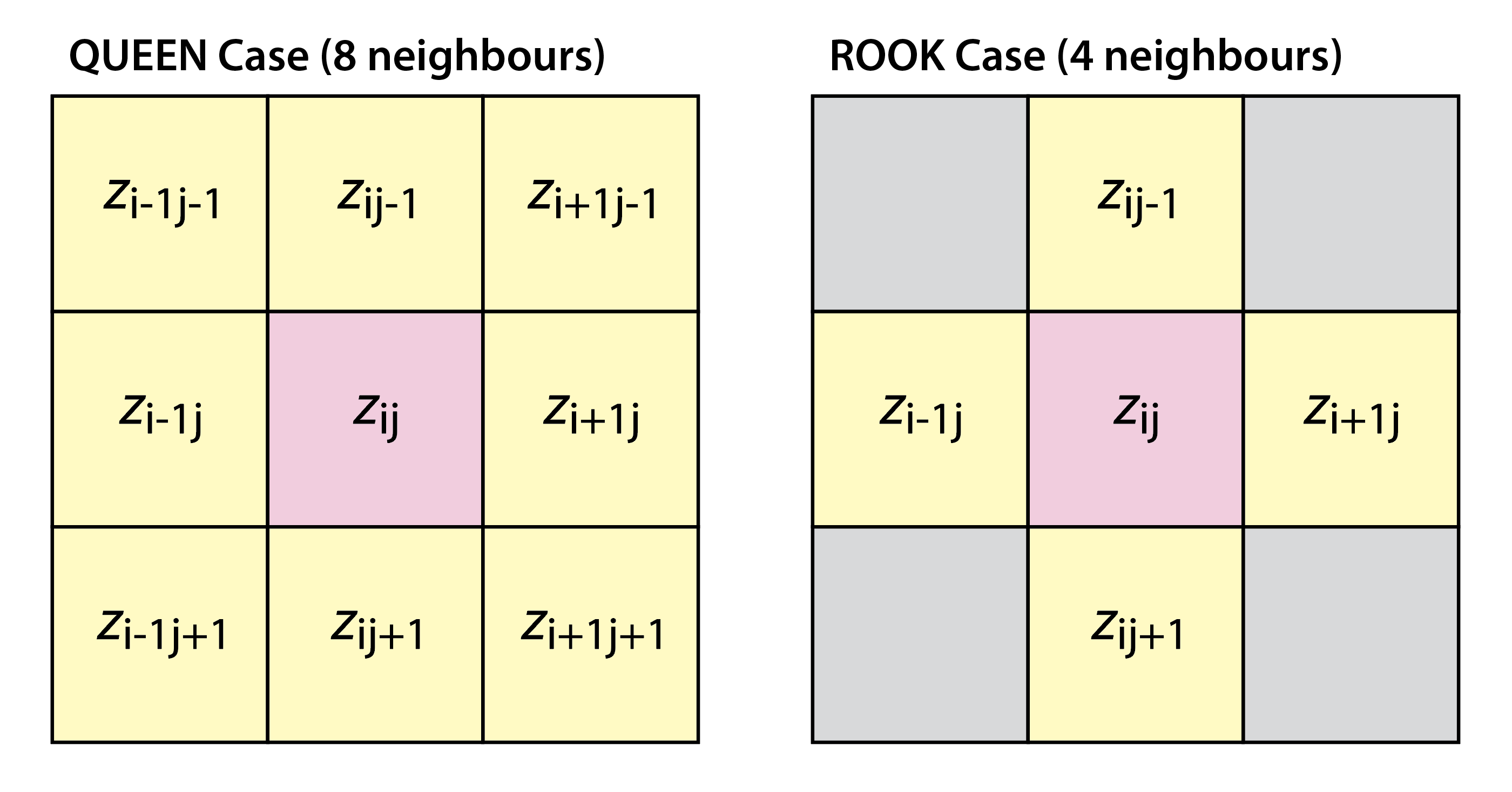

Convolution and filtering

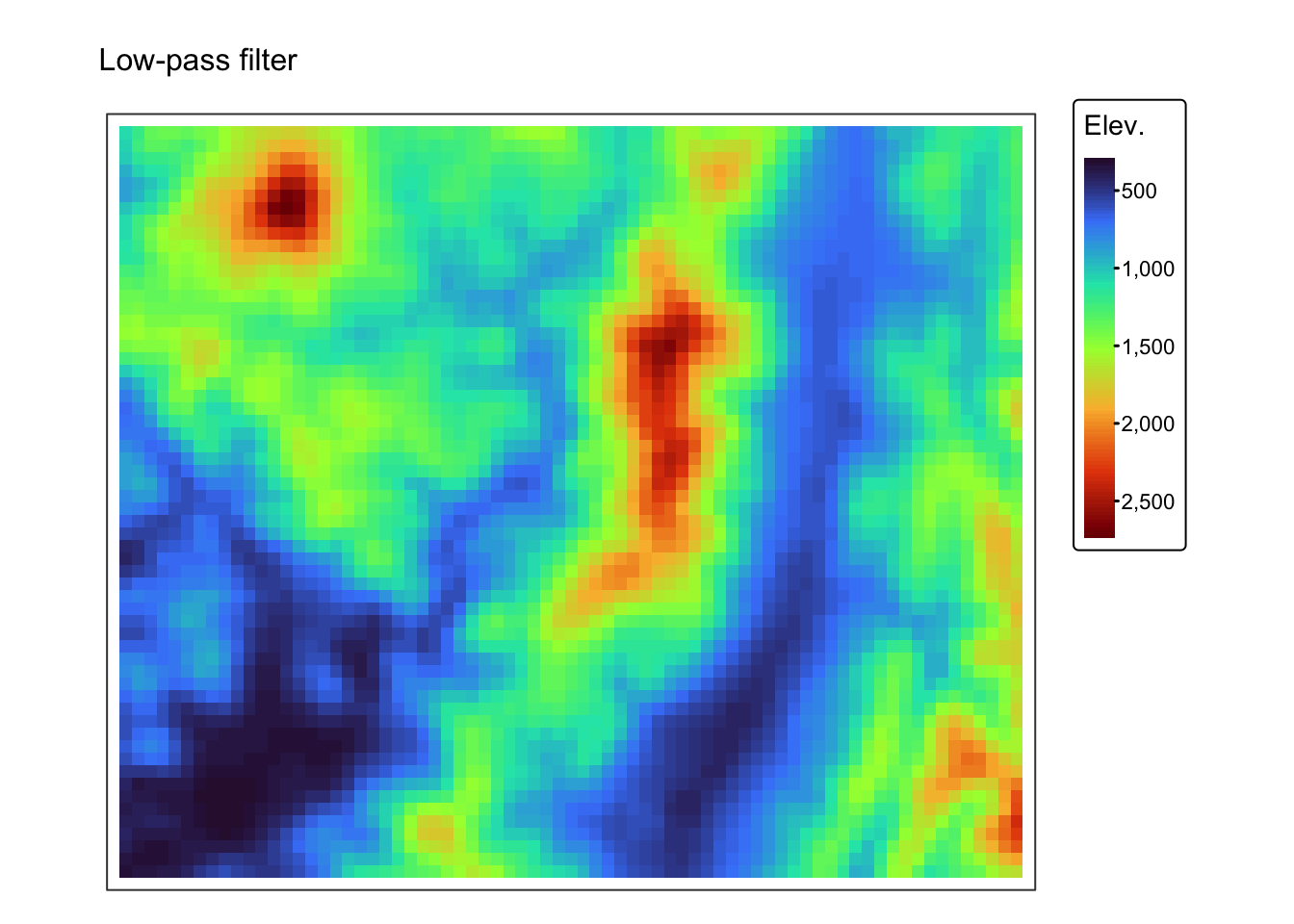

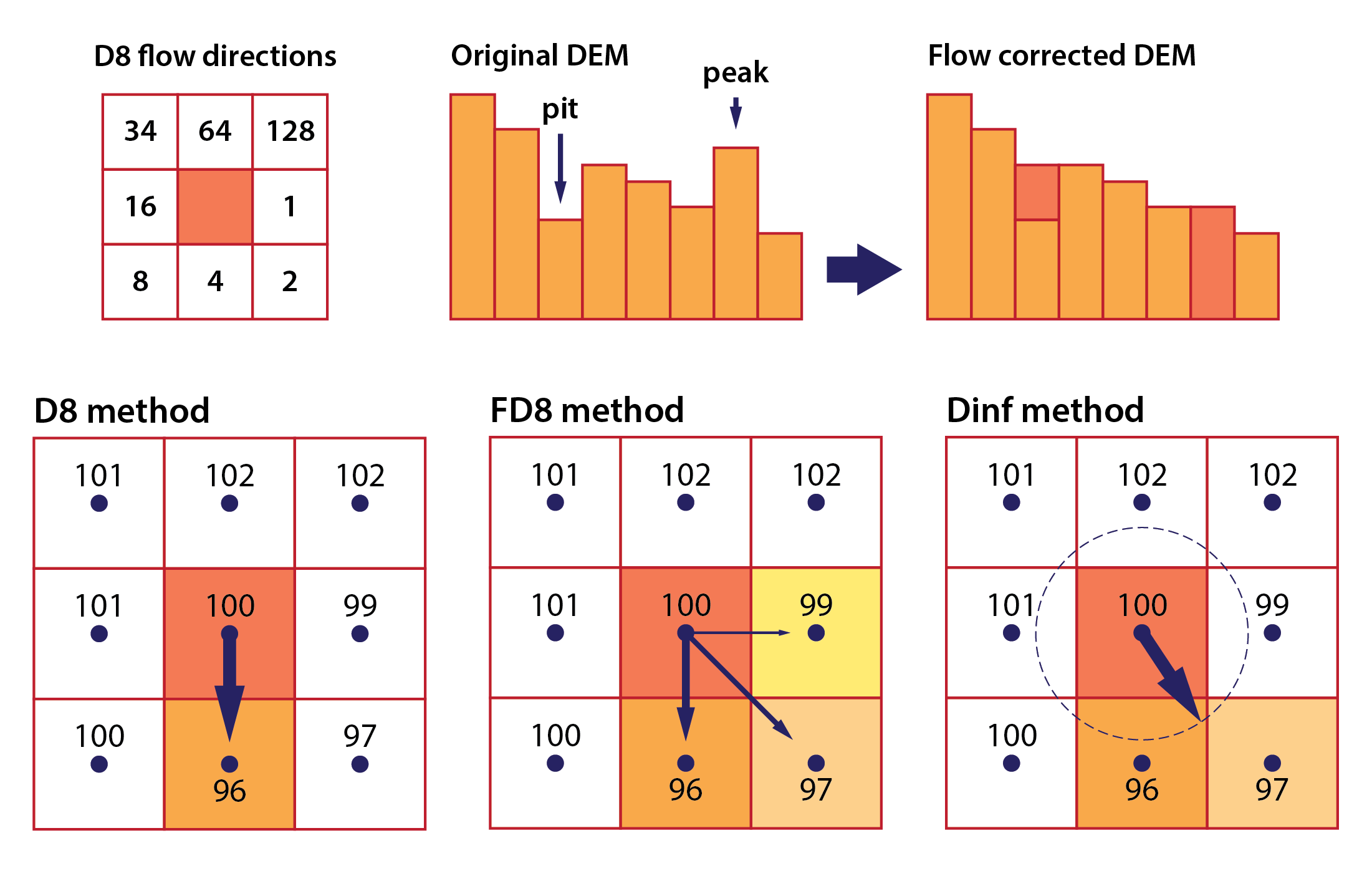

Convolution is a fundamental operation in spatial analysis that involves applying a filter (or kernel) to a continuous field to transform or enhance its features. The process works by moving a small matrix (the kernel) over each cell of the raster. At each position, the values under the kernel are multiplied by the corresponding kernel weights, and then a statistic of these products is computed to generate a new value for the central cell.

The kernel is frequently of size 3 × 3 raster cells, but any other kind of square window (e.g., 5 × 5, 7 × 7, or a distance measurement) is possible. The statistic is usually the mean or the sum of all products, but others are also possible (see Fig. 3, below).

Fig. 2: Kernel operations for spatial filtering. Reproduced from: Burrough et al (2015), Principles of Geographical Information Systems (3rd ed.), Oxford University Press.

The kernel’s coefficients determine the nature of the operation:

Smoothing (low-pass filters): these kernels average the values of neighbouring cells to reduce noise. A common example is a uniform kernel (e.g. every element is 1/9 in a 3 × 3 matrix) or a Gaussian kernel where the centre has a higher weight.

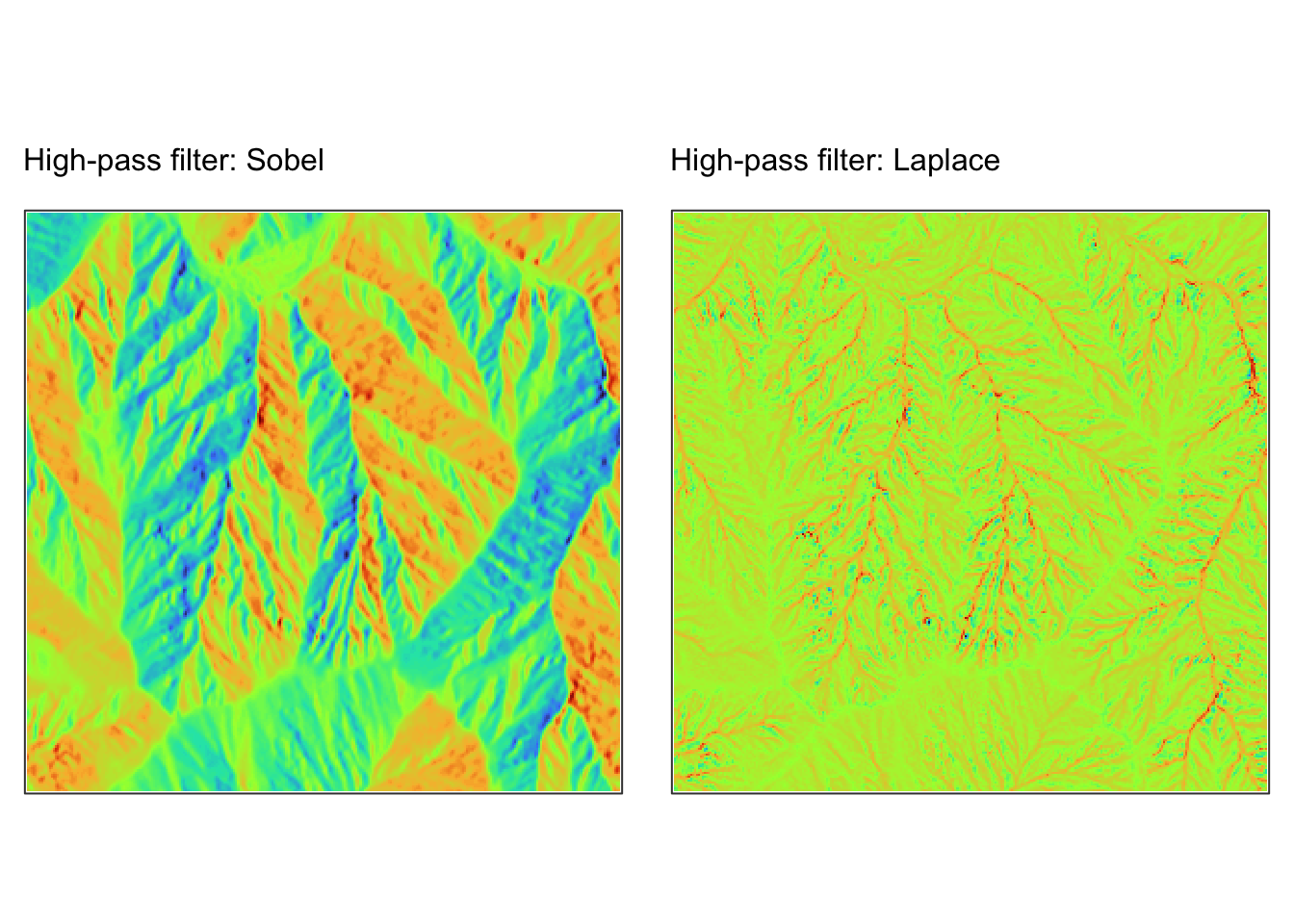

Edge detection (high-pass filters): These kernels emphasise differences in adjacent cell values to highlight boundaries. Examples include the Sobel operator (which can detect horizontal or vertical edges) and the Laplacian filter.

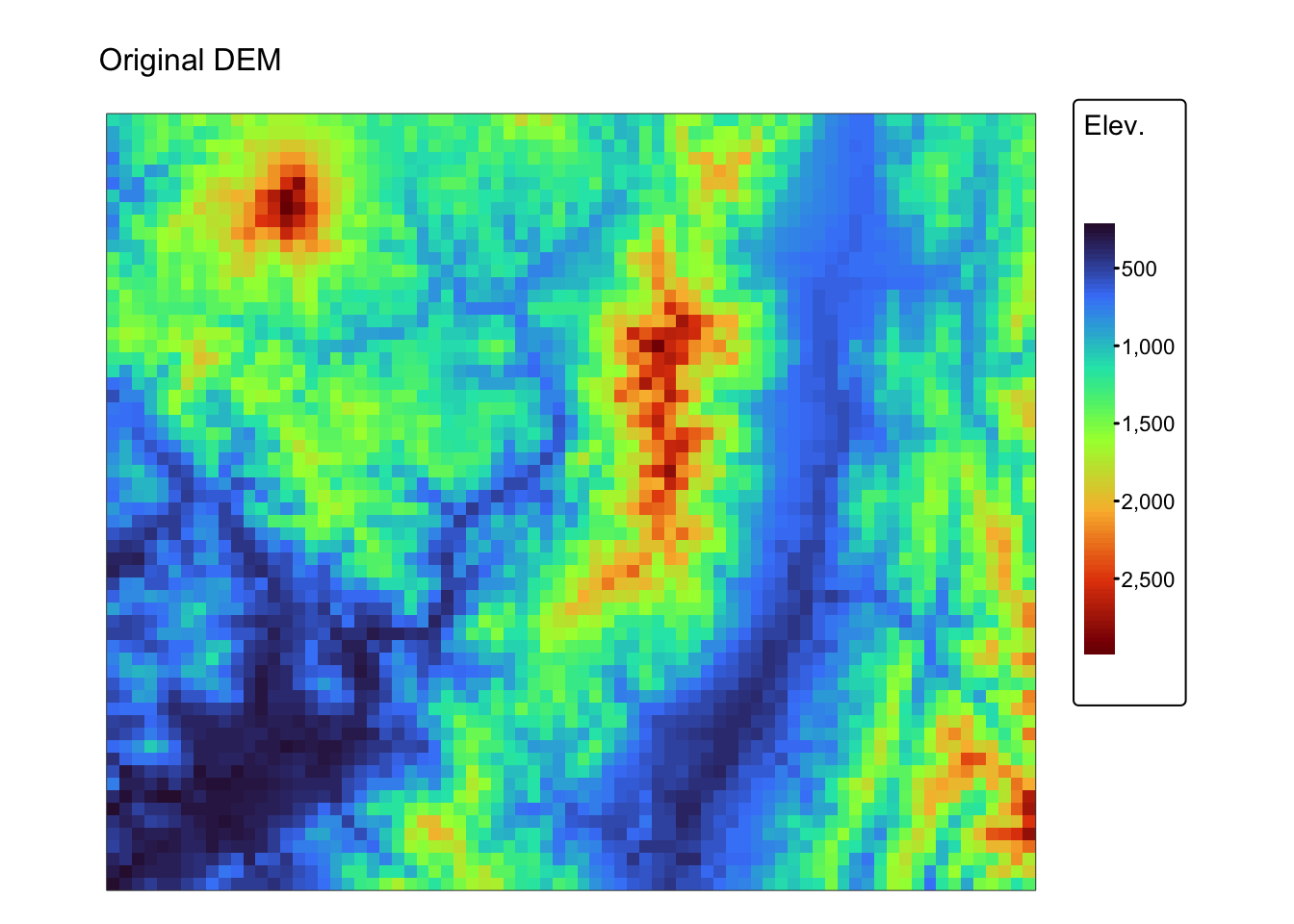

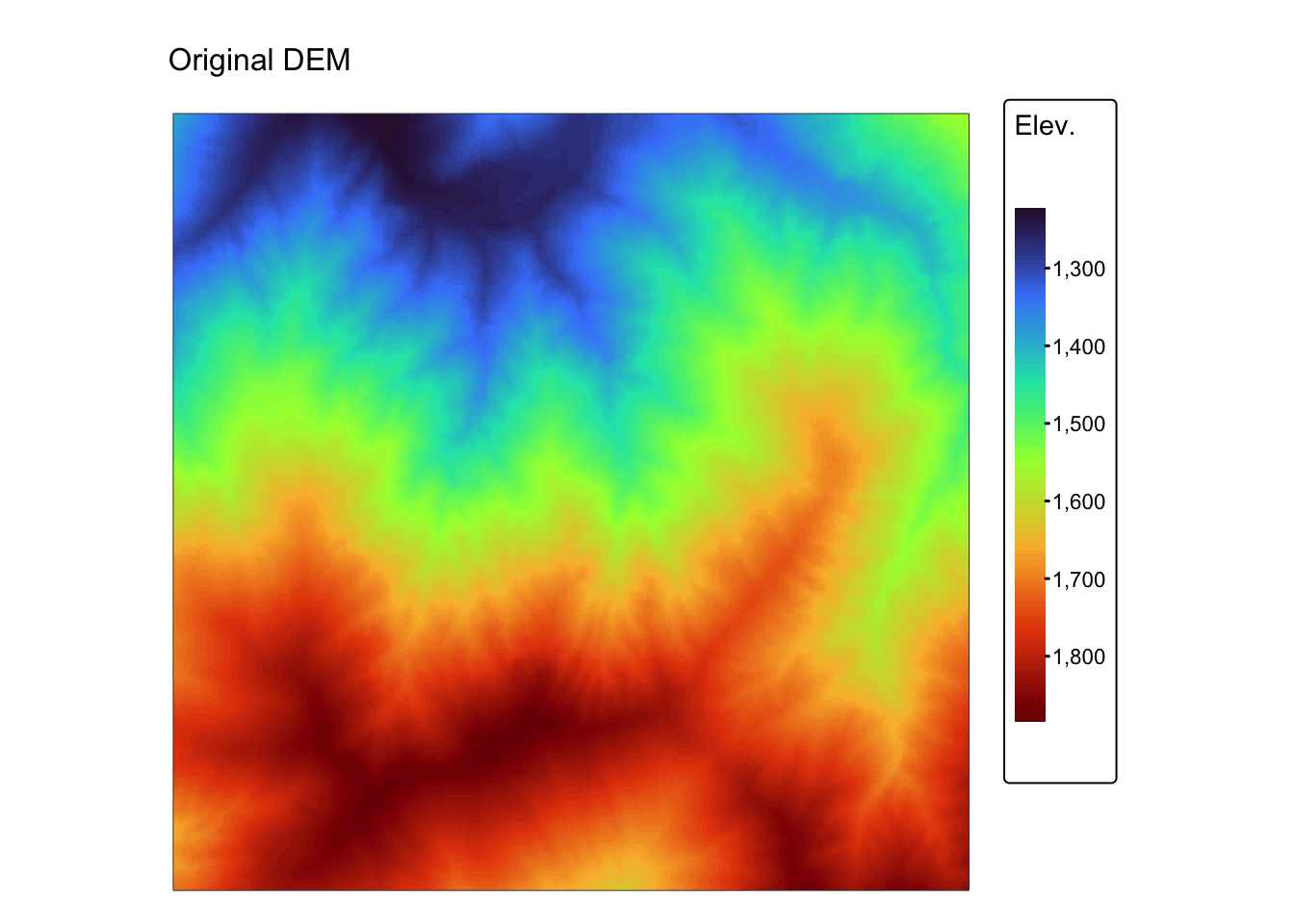

Below are two examples of how to perform convolution operations on a

raster using the terra package in R. The first example

demonstrates the smoothing filter using the coarse (1km) resolution

digital elevation model r_dem, whereas the second example

demonstrates edge detection using the fine (5m) resolution digital

elevation model jp_dem.

r1km <- r_dem # coarse DEM

r5m <- jp_dem # fine DEM

# Define a 3 x 3 smoothing kernel (simple average filter)

smoothing_kernel <- matrix(1/9, nrow = 3, ncol = 3)

# Apply convolution for smoothing using the focal function

r_smoothed <- focal(r1km, w = smoothing_kernel, fun = sum, na.policy = "omit")

# Define a Sobel kernel for edge detection (horizontal edges)

sobel_x <- matrix(c(-1, 0, 1,

-2, 0, 2,

-1, 0, 1), nrow = 3, byrow = TRUE)

# Apply convolution for edge detection

r_edges <- focal(r5m, w = sobel_x, fun = sum, na.policy = "omit")

# Define a Laplace kernel for edge detection

laplace <- matrix(c(0, 1, 0,

1, -4, 1,

0, 1, 0), nrow = 3, byrow = TRUE)

# Apply convolution for edge detection

r_edges2 <- focal(r5m, w = laplace, fun = sum, na.policy = "omit")

# Rename SpatRaster names

names(r1km) <- "Elev."

names(r5m) <- "Elev."

names(r_smoothed) <- "Elev."

names(r_edges) <- "Elev."

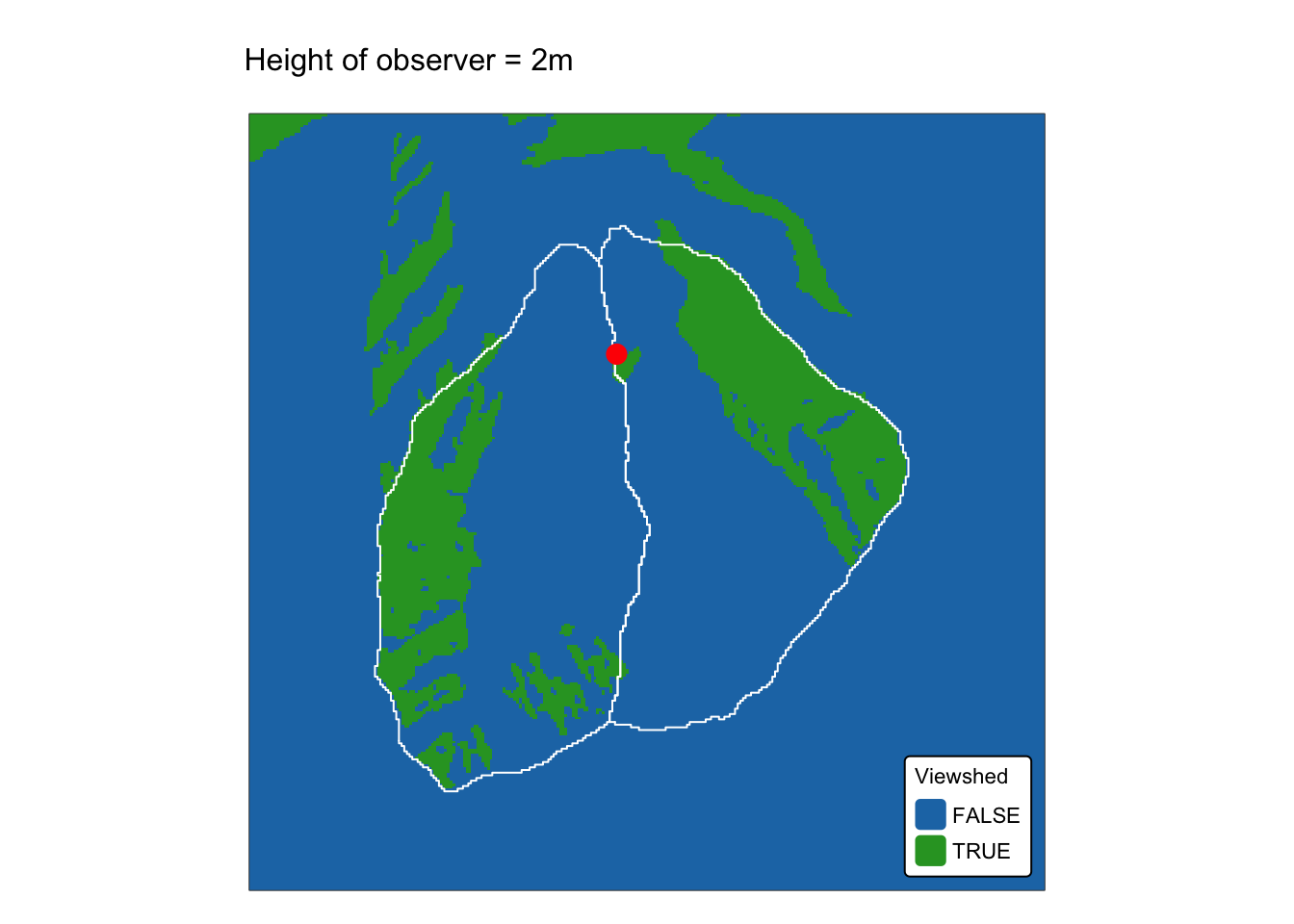

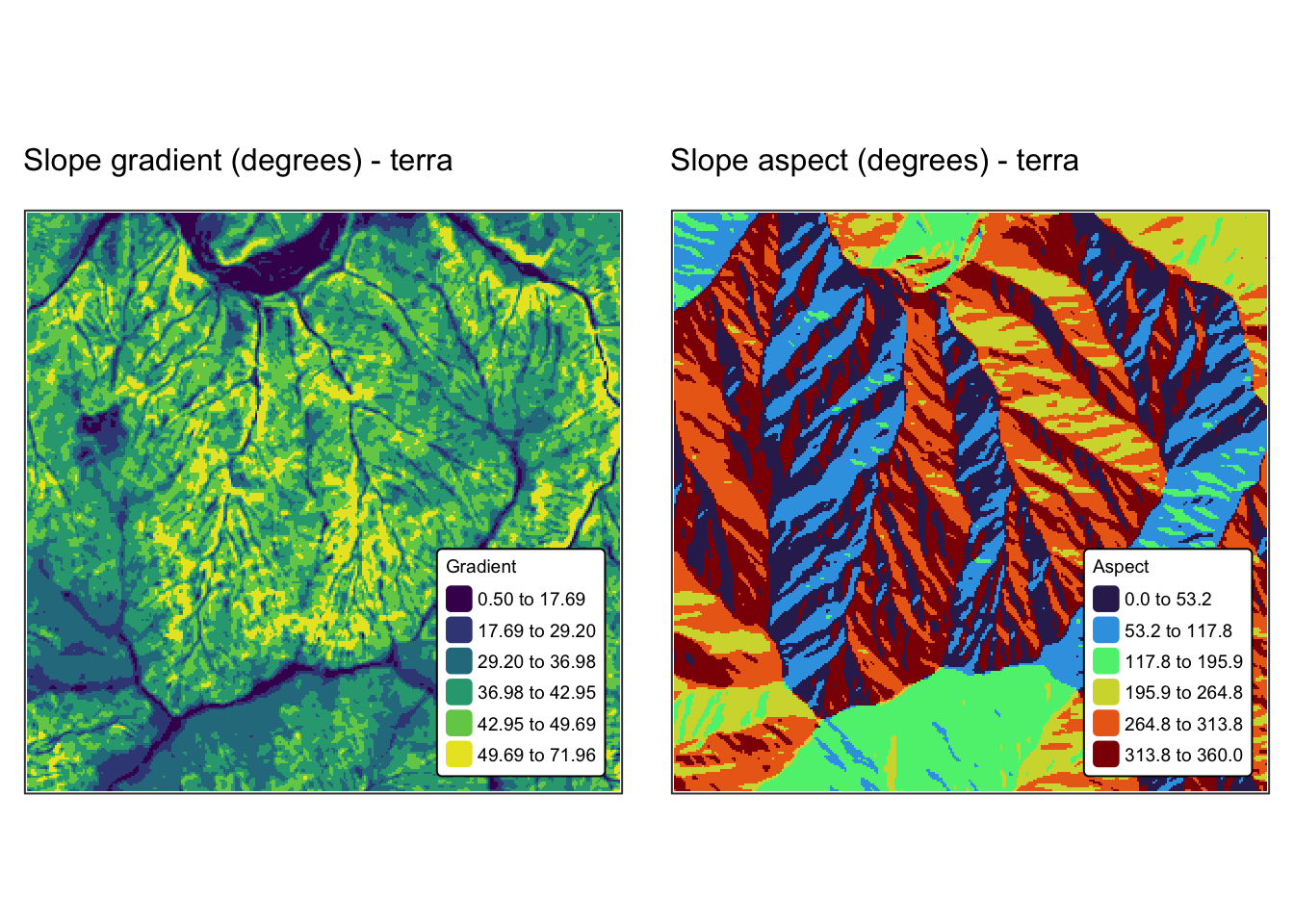

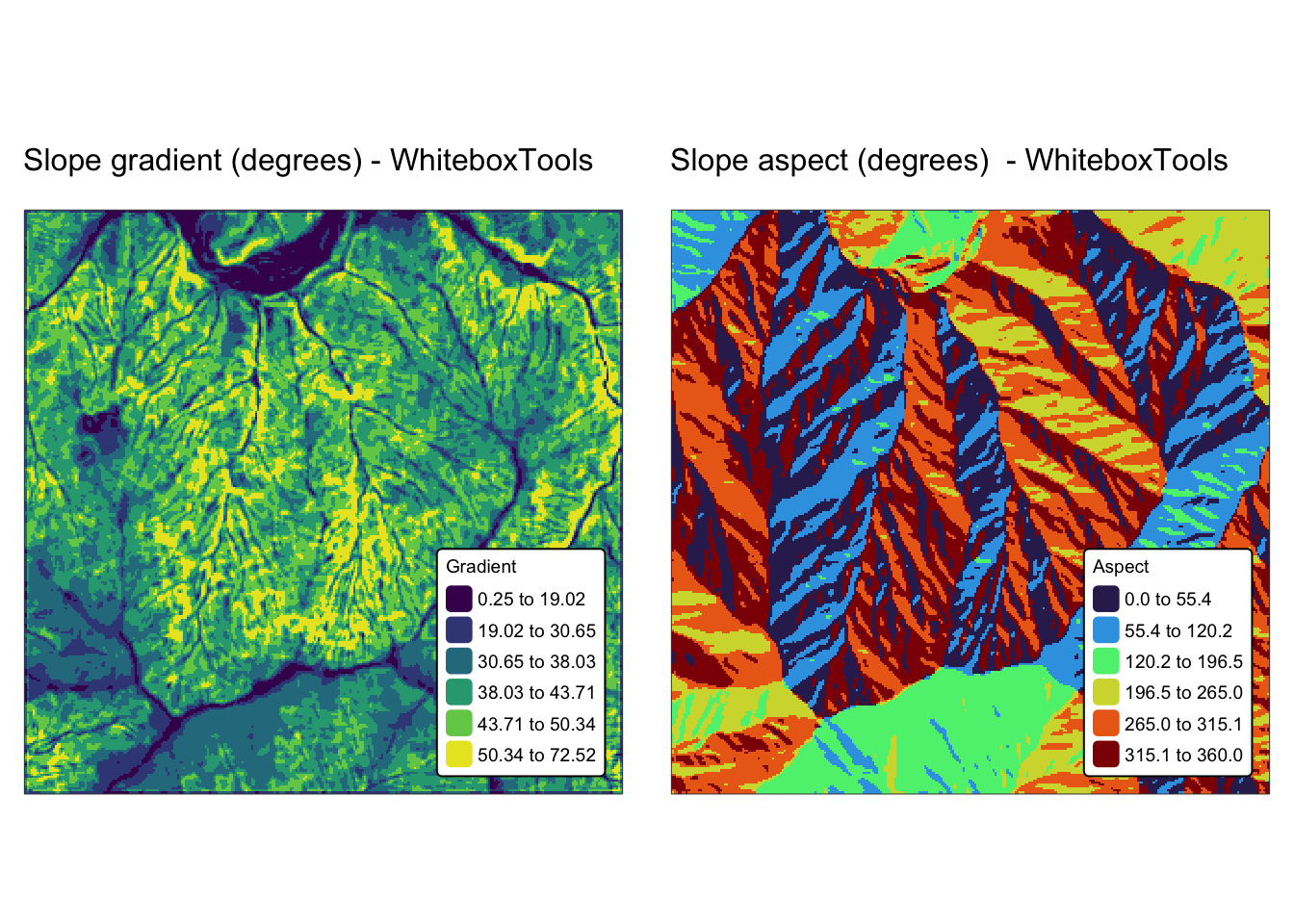

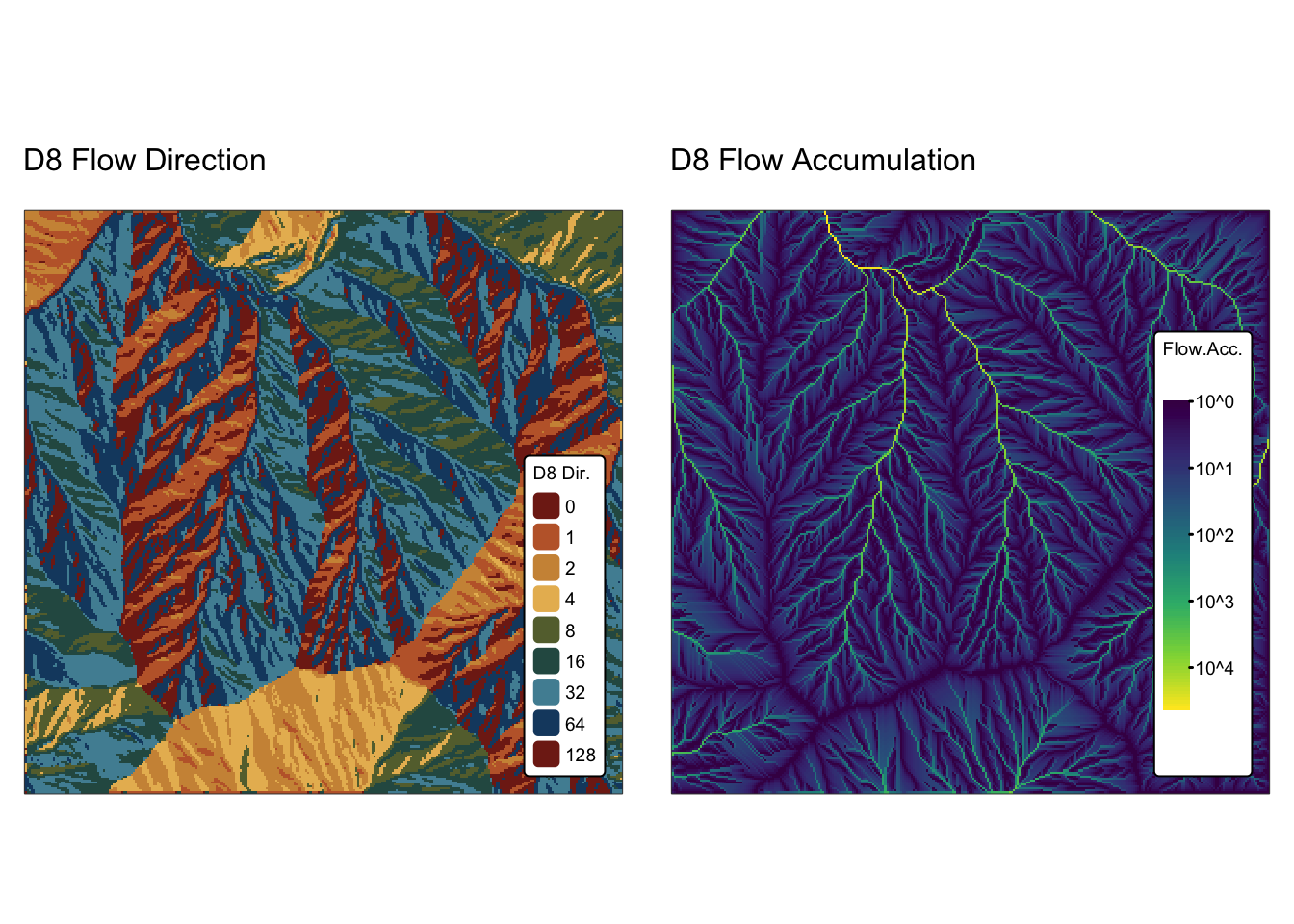

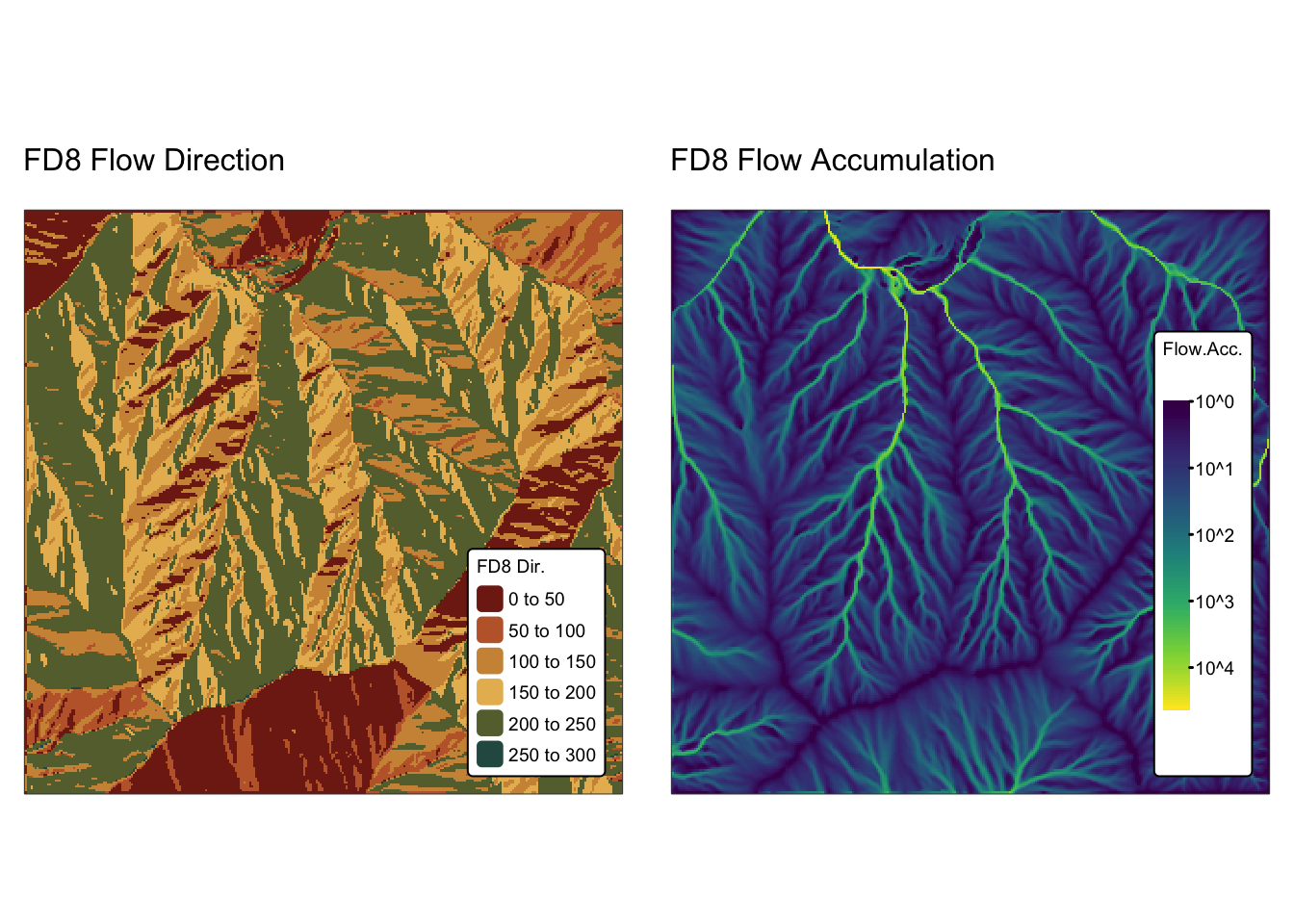

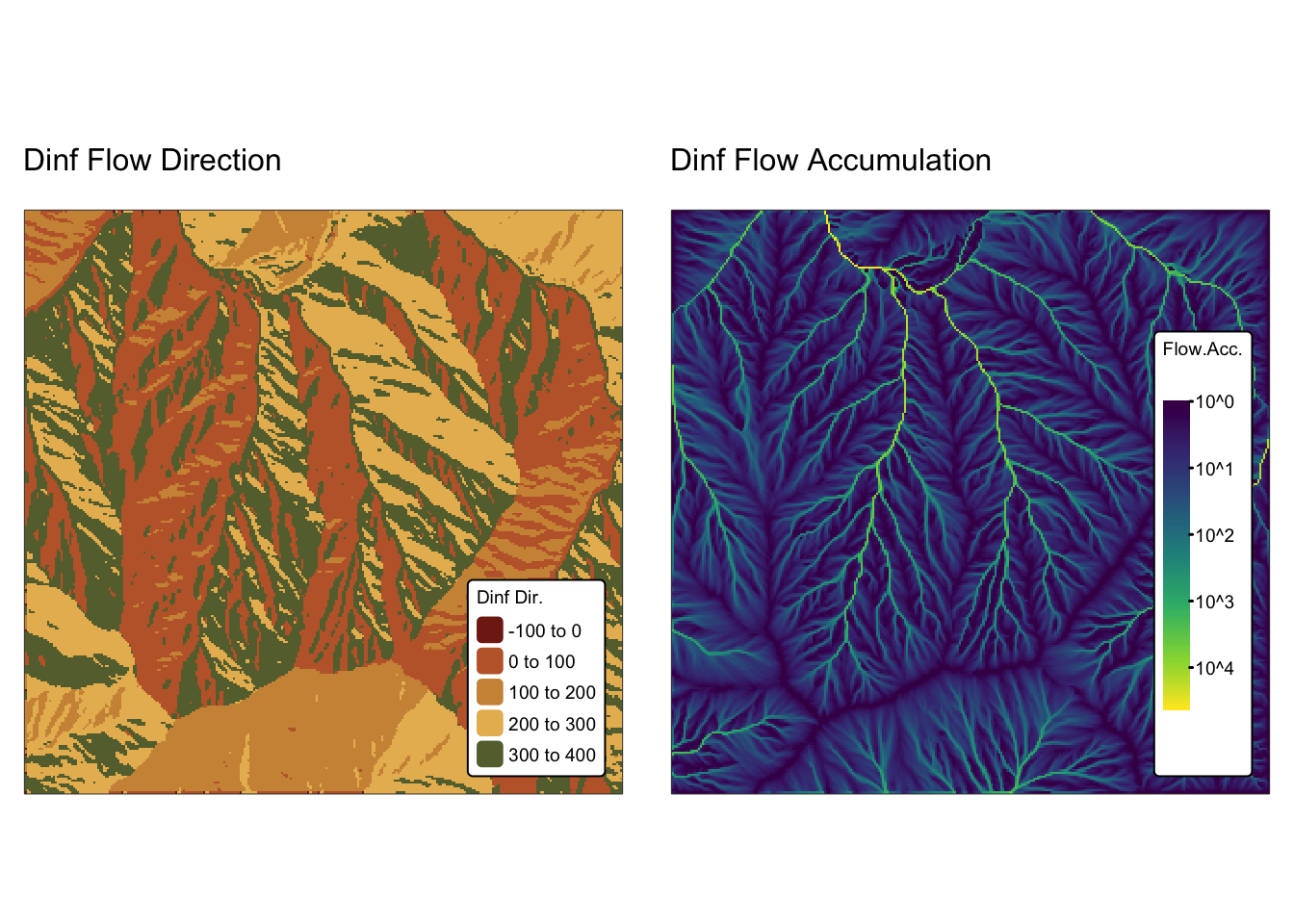

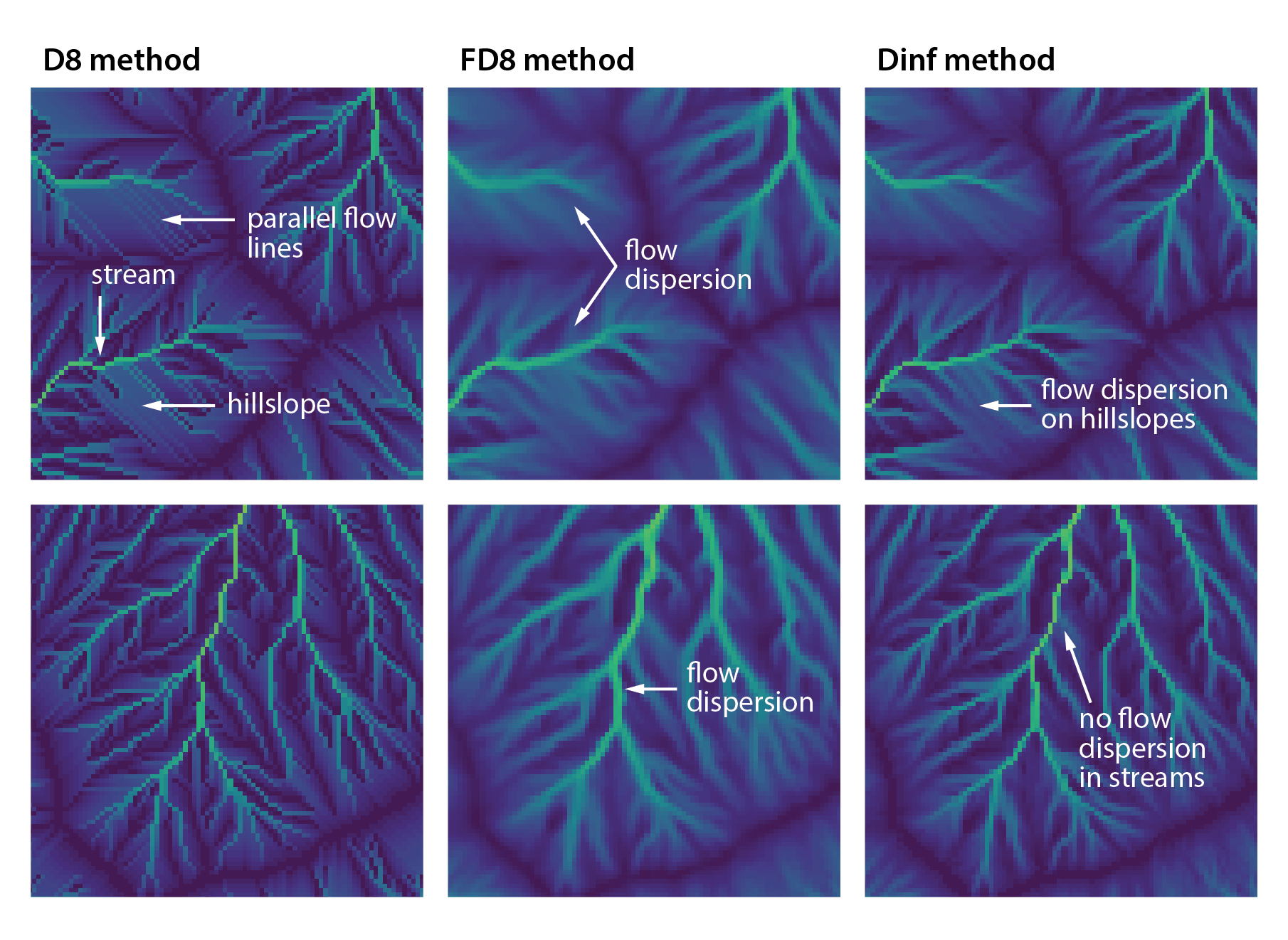

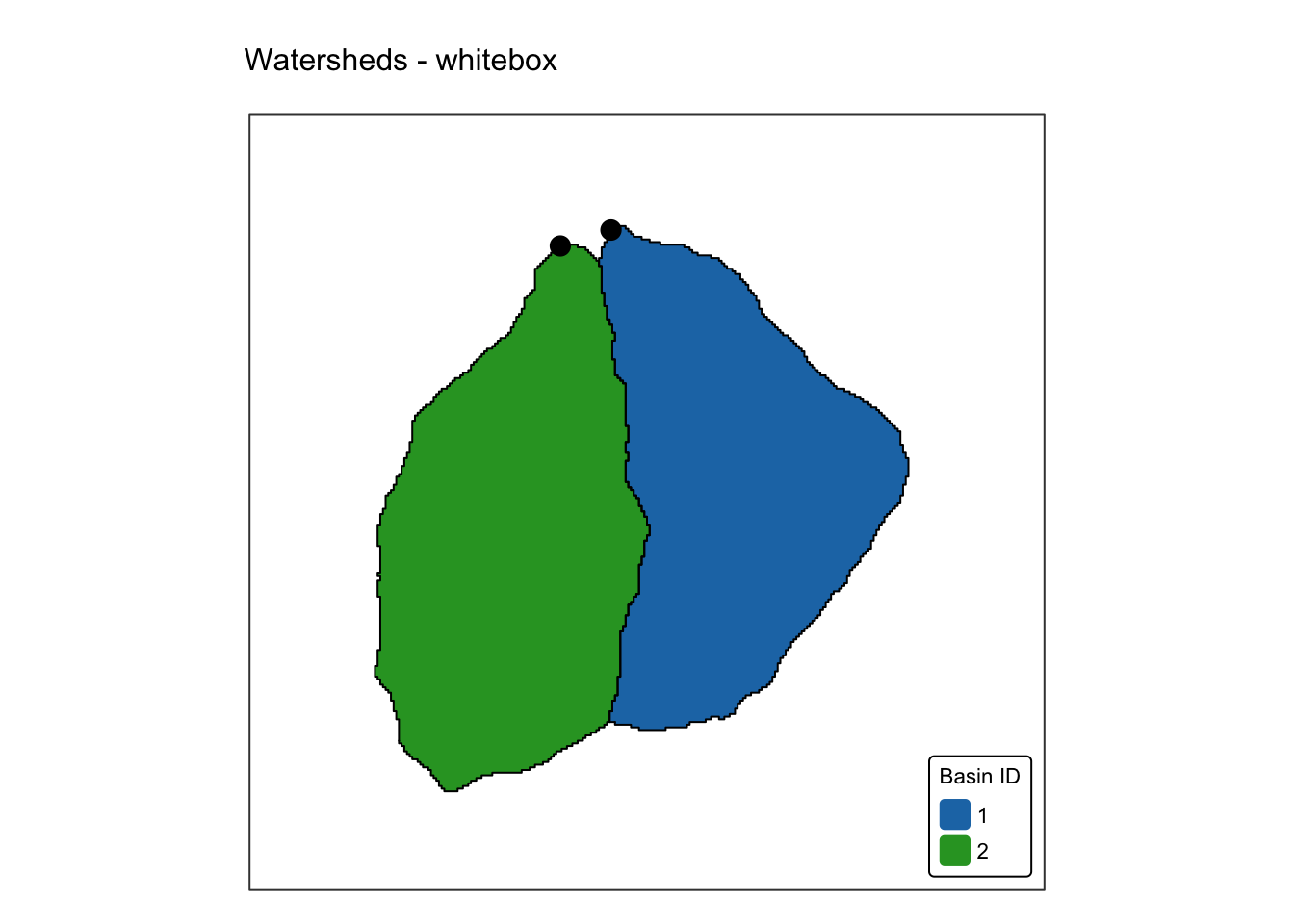

names(r_edges2) <- "Elev."Explanation: